Operating System 4 1193308760782240 2

- 1. Chapter 4: Threads

- 2. What’s in a process? A process consists of (at least): an address space the code for the running program the data for the running program an execution stack and stack pointer (SP) traces state of procedure calls made the program counter (PC), indicating the next instruction a set of general-purpose processor registers and their values a set of OS resources open files, network connections, sound channels, …

- 3. Concurrency Imagine a web server, which might like to handle multiple requests concurrently While waiting for the credit card server to approve a purchase for one client, it could be retrieving the data requested by another client from disk, and assembling the response for a third client from cached information Imagine a web client (browser), which might like to initiate multiple requests concurrently The IT home page has 10 “src= …” html commands, each of which is going to involve a lot of sitting around! Wouldn’t it be nice to be able to launch these requests concurrently? Imagine a parallel program running on a multiprocessor, which might like to employ “physical concurrency” For example, multiplying a large matrix – split the output matrix into k regions and compute the entries in each region concurrently using k processors

- 4. What’s needed? In each of these examples of concurrency (web server, web client, parallel program): Everybody wants to run the same code Everybody wants to access the same data Everybody has the same privileges (most of the time) Everybody uses the same resources (open files, network connections, etc.) But you’d like to have multiple hardware execution states: an execution stack and stack pointer (SP) traces state of procedure calls made the program counter (PC), indicating the next instruction a set of general-purpose processor registers and their values

- 5. How could we achieve this? Given the process abstraction as we know it: fork several processes cause each to map to the same physical memory to share data This is really inefficient!! space: PCB, page tables, etc. time: creating OS structures, fork and copy address space, etc. So any support that the OS can give for doing multi-threaded programming is a win

- 6. Can we do better? Key idea: separate the concept of a process (address space, etc.) … from that of a minimal “ thread of control ” (execution state: PC, etc.) This execution state is usually called a thread , or sometimes, a lightweight process

- 7. Single-Threaded Example Imagine the following C program: main() { ComputePI(“pi.txt”); PrintClassList(“clist.text”); } What is the behavior here? Program would never print out class list Why? ComputePI would never finish

- 8. Use of Threads Version of program with Threads: main() { CreateThread(ComputePI(“pi.txt”)); CreateThread(PrintClassList(“clist.text”)); } What does “CreateThread” do? Start independent thread running for a given procedure What is the behavior here? Now, you would actually see the class list This should behave as if there are two separate CPUs CPU1 CPU2 CPU1 CPU2 Time CPU1 CPU2

- 9. Threads and processes Most modern OS’s (NT, modern UNIX, etc) therefore support two entities: the process , which defines the address space and general process attributes (such as open files, etc.) the thread , which defines a sequential execution stream within a process A thread is bound to a single process / address space address spaces, however, can have multiple threads executing within them sharing data between threads is cheap: all see the same address space creating threads is cheap too!

- 10. Single and Multithreaded Processes

- 11. Benefits Responsiveness - Interactive applications can be performing two tasks at the same time (rendering, spell checking) Resource Sharing - Sharing resources between threads is easy Economy - Resource allocation between threads is fast (no protection issues) Utilization of MP Architectures - seamlessly assign multiple threads to multiple processors (if available). Future appears to be multi-core anyway.

- 12. Thread Design Space address space thread one thread/process many processes many threads/process many processes one thread/process one process many threads/process one process MS/DOS Java older UNIXes Mach, NT, Chorus, Linux, …

- 13. (old) Process address space 0x00000000 0x7FFFFFFF address space code (text segment) static data (data segment) heap (dynamic allocated mem) stack (dynamic allocated mem) PC SP

- 14. (new) Address space with threads 0x00000000 0x7FFFFFFF address space code (text segment) static data (data segment) heap (dynamic allocated mem) thread 1 stack PC (T2) SP (T2) thread 2 stack thread 3 stack SP (T1) SP (T3) PC (T1) PC (T3) SP PC

- 15. Types of Threads

- 16. Thread types User threads : thread management done by user-level threads library. Kernel does not know about these threads Kernel threads : Supported by the Kernel and so more overhead than user threads Examples: Windows XP/2000, Solaris, Linux, Mac OS X User threads map into kernel threads

- 17. Multithreading Models Many-to-One: Many user-level threads mapped to single kernel thread If a thread blocks inside kernel, all the other threads cannot run Examples: Solaris Green Threads, GNU Pthreads

- 18. Multithreading Models One-to-One: Each user-level thread maps to kernel thread

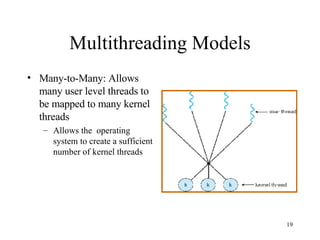

- 19. Multithreading Models Many-to-Many: Allows many user level threads to be mapped to many kernel threads Allows the operating system to create a sufficient number of kernel threads

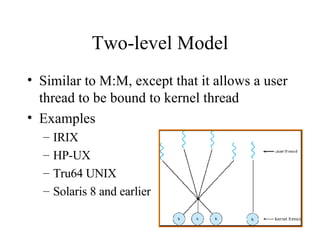

- 20. Two-level Model Similar to M:M, except that it allows a user thread to be bound to kernel thread Examples IRIX HP-UX Tru64 UNIX Solaris 8 and earlier

- 21. Threads Implementation Two ways: Provide library entirely in user space with no kernel support. Invoking function in the API ->local function call Kernel-level library supported by OS Invoking function in the API -> system call Three primary thread libraries: POSIX Pthreads (maybe KL or UL) Win32 threads (KL) Java threads (UL)

- 22. Threading Issues

- 23. Threading Issues Semantics of fork() and exec() system calls Thread cancellation Signal handling Thread pools Thread specific data Scheduler activations

- 24. Semantics of fork() and exec() Does fork() duplicate only the calling thread or all threads?

- 25. Thread Cancellation Terminating a thread before it has finished Two general approaches: Asynchronous cancellation terminates the target thread immediately Deferred cancellation allows the target thread to periodically check if it should be cancelled

- 26. Signal Handling Signals are used in UNIX systems to notify a process that a particular event has occurred A signal handler is used to process signals Signal is generated by particular event Signal is delivered to a process Signal is handled Every signal maybe handled by either: A default signal handler A user-defined signal handler

- 27. Signal Handling In Multi-threaded programs, we have the following options: Deliver the signal to the thread to which the signal applies (e.g. synchronous signals) Deliver the signal to every thread in the process (e.g. terminate a process) Deliver the signal to certain threads in the process Assign a specific thread to receive all signals for the process In *nix: Kill –signal pid (for process), pthread_kill tid (for threads)

- 28. Thread Pools Do you remember the multithreading scenario in a web server? It has two problems: Time required to create the thread No bound on the number of threads

- 29. Thread Pools Create a number of threads in a pool where they await work Advantages: Usually slightly faster to service a request with an existing thread than create a new thread Allows the number of threads in the application(s) to be bound to the size of the pool

- 30. Thread Specific Data Allows each thread to have its own copy of data Useful when you do not have control over the thread creation process (i.e., when using a thread pool)

- 31. Scheduler Activations Both M:M and Two-level models require communication to maintain the appropriate number of kernel threads allocated to the application Use intermediate data structure called LWP (lightweight process) CPU Bound -> one LWP I/O Bound -> Multiple LWP

- 32. Scheduler Activations Scheduler activations provide upcalls - a communication mechanism from the kernel to the thread library This communication allows an application to maintain the correct of number kernel threads

- 33. OS Examples

- 34. Windows XP Threads Implements the one-to-one mapping Each thread contains A thread id Register set Separate user and kernel stacks Private data storage area

- 35. Linux Threads Linux refers to them as tasks rather than threads Thread creation is done through clone() system call clone() allows a child task to share the address space of the parent task (process)

- 36. Conclusion Kernel threads: More robust than user-level threads Allow impersonation Easier to tune the OS CPU scheduler to handle multiple threads in a process A thread doing a wait on a kernel resource (like I/O) does not stop the process from running User-level threads A lot faster if programmed correctly Can be better tuned for the exact application Note that user-level threads can be done on any OS

- 37. Conclusion Each thread shares everything with all the other threads in the process They can read/write the exact same variables, so they need to synchronize themselves They can access each other’s runtime stack, so be very careful if you communicate using runtime stack variables Each thread should be able to retrieve a unique thread id that it can use to access thread local storage Multi-threading is great, but use it wisely