Speaking on the record: Combining interviews with search log analysis in user research.

- 1. Knoxville • ALISE • 24 September 2019 Speaking on the Record: Combining Interviews with Search Log Analysis in User Research Lynn Silipigni Connaway, Ph.D. Director of Library Trends and User Research, OCLC Market Analysis Manager, OCLC Peggy Gallagher, MLS, MMCBrittany Brannon, MLIS, MA Research Support Specialist, OCLC Christopher Cyr, Ph.D. Associate Research Scientist, OCLC

- 2. Lynn Silipigni Connaway Director of Library Trends and User Research connawal@oclc.org @LynnConnaway Peggy Gallagher Market Analysis Manager gallaghp@oclc.org @PeggyGal1 Christopher Cyr Associate Research Scientist cyrc@oclc.org @ChrisCyr19 Erin Hood Research Support Specialist hoode@oclc.org @ErinMHood1 Brittany Brannon Research Support Specialist brannonb@oclc.org Research team

- 3. Theoretical Background • Log analysis to collect large amounts of unbiased user data (Jansen 2006, Connaway and Radford 2017) • Logs used to study how people use online systems • Catalog Search failure rates • Behavior of digital library users • Use of e-journals • User experience with video and music streaming services (Hunter 1991; Jamali, Nicholas, and Huntington 2005; Lamkhede and Das 2019; Nouvellet, et al. 2019)

- 4. Theoretical Background • Problems with log analysis • Ambiguity of log events • Actions not captured in logs • Combining log analysis with user interviews • Asked questions about search and analyzed transaction logs (Connaway, Budd, and Kochtanek 1995) • No indication that combining search logs with individual interviews has been used since

- 5. Discovery and Access Project: How do academic library users navigate the path from discovery to access? • What do academic users do when searches don't result in fulfillment? • What differentiates searches that lead to access from searches that don’t? • What demographic characteristics influence the access of users? • How does access correlate with success?

- 6. WORLDCAT DISCOVERY SEARCH LOG ANALYSIS “Log analysis is everything that a lab study is not.” (Jansen 2017, 349)

- 7. 1. Did a keyword search but mistyped it - Had 0 results 2. Redid keyword search with correct spelling - Had 759,902 results 3. Began typing in additional keyword 4. Selected one of the autosuggested keyword phrases - Had 1,761 results What do the raw logs tell us?

- 8. Ways of evolving a search Corrected search Refined search Shows greater than 90% similarity with the previous search string Shows 80–90% similarity with the previous search string, with the first string contained in the second, or an index change Shows less than 80% similarity with the previous search string New search

- 9. Summary of results • Average of 5 minutes per session • Average of 2.2 searches per session • Average of 5.1 words per search • 12% of sessions had search refinements • 33% of sessions had multiple searches n=282,307 sessions

- 10. Types of Requests Search results Physical access options Online access attempt Attempt to save Physical access attempt The user made a request for search results. This could include a new search, refinement of an existing search, or the addition of limiters. The user clicked an item or made a request to digitally access the full text of the item. The user attempted to export or otherwise save the citation. The user clicked an item or made a request to place a hold on a physical copy of the item. Some users left the system after looking at holding, where they were able to identify the physical item call number and/or location. These users were categorized as having the option to physically access the item.

- 11. 39% 54% 20% 19% 30% 16% 5% 6% 2% 2% 0% 10% 20% 30% 40% 50% 60% 70% 80% 90% 100% Last requests (n=274,346 requests) All click events (n=1,961,168 events) All click events vs. Last requests by type of request Search results Physical access option Online access attempt While search results account for over half (54%) of all click events, they account for just over a third (39%) of last requests

- 12. Probability of fulfillment Number of searches 2 Number of search refinements 0 Words per search 2 Results per search 1000 Keyword limiter (1 if yes, 0 if no) 1 Author limiter (1 if yes, 0 if no) 0 Title limiter (1 if yes, 0 if no) 0 Chance of Fulfillment 69.09% Number of searches 2 Number of search refinements 0 Words per search 7 Results per search 1000 Keyword limiter (1 if yes, 0 if no) 1 Author limiter (1 if yes, 0 if no) 0 Title limiter (1 if yes, 0 if no) 0 Chance of Fulfillment 70.32% Number of searches 2 Number of search refinements 0 Words per search 2 Results per search 1000 Keyword limiter (1 if yes, 0 if no) 1 Author limiter (1 if yes, 0 if no) 1 Title limiter (1 if yes, 0 if no) 0 Chance of Fulfillment 84.76%

- 13. USER INTERVIEWS “User interviews can help capture search and discovery behavior as the user understands it, rather than as a computer system understands it.” (Connaway, Cyr, Brannon, Gallagher, and Hood 2019)

- 14. Example questions • “Please tell us what you were looking for and why you decided to do an online search.” • “Did the item you were searching for come up in your search results? In other words, did you find it?” • “I’d like to understand how you felt about your search experience overall. Would you say you were delighted with your search experience?”

- 15. What the logs told us: • Began keyword search but mistyped it o Had 0 results • Redid keyword search with correct spelling o Had 759,902 results • Began typing in additional keyword • Selected one of the autosuggested phrases o Had 1,761 results What do the interviews tell us?

- 16. What the logs told us: • Began keyword search but mistyped it o Had 0 results • Redid keyword search with correct spelling o Had 759,902 results • Began typing in additional keyword • Selected one of the autosuggested phrases o Had 1,761 results • Just starting work on a paper on a broad topic; didn’t yet have a direction for the paper • Was overwhelmed with number of search results • Abandoned “library search” to do “Google searching” to better determine a direction for the paper • Later came back to the library search and found it useful • Also received help from student workers in the library • Felt “prepared” to use the library search due to 1st-year library instruction What do the interviews tell us?

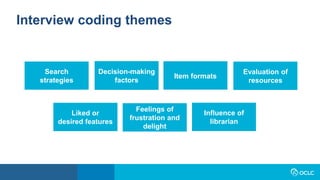

- 17. Interview coding themes Item formats Search strategies Decision-making factors Liked or desired features Evaluation of resources Feelings of frustration and delight Influence of librarian

- 18. METHODOLOGY CHALLENGES AND BENEFITS “The methodology used for this study also could be extended beyond discovery systems. Other computerized activities that leave digital traces could be studied using interview protocols based on log analysis.” (Connaway, Cyr, Brannon, Gallagher, and Hood 2019)

- 19. Challenges of methodology (Tandem use of log data and user interviews) • Resource intensive Time consuming Multiple team members Multiple IRBs • High level of expertise required

- 20. Benefits of methodology (Tandem use of log data and user interviews) • Provide context for quantitative data • Clarify qualitative data • Most effective when digital traces are present • Inform development of literacy instruction

- 21. Impact of Study • Collaborate internally in new ways • Identify why and what users did during the search and when acquiring resources • Develop a new methodology for studying user behaviors • Influence product and system development

- 22. ACKNOWLEDGEMENTS We would like to thank the librarians and library users who participated in this research. We also thank additional OCLC staff who assisted with the project: Jay Holloway and Ralph LeVan for assisting with data collection and analysis and Nick Spence for his assistance in preparing this presentation.

- 23. References Connaway, Lynn Silipigni, John M. Budd, and Thomas R. Kochtanek. 1995. “An Investigation of the Use of an Online Catalog: User Characteristics and Transaction Log Analysis.” Library Resources and Technical Services 39, no. 2: 142–152. Connaway, Lynn Silipigni, Chris Cyr, Brittany Brannon, Peggy Gallagher, and Erin Hood. 2019. “Speaking on the Record: Combining Interviews with Search Log Analysis in User Research.” ALISE/ProQuest Methodology Paper Competition Award Winner. Connaway, Lynn Silipigni, and Marie L. Radford. 2017. Research Methods in Library and Information Science, 6th ed. Santa Barbara, CA: Libraries Unlimited. Connaway, Lynn Silipigni, and Marie L. Radford. 2018. Survey Research. Webinar presented by ASIS&T, January 23. https://www.slideshare.net/LynnConnaway/survey-research-methods-with-lynn-silipigni-connaway and https://www.youtube.com/watch?v=4dlpAT7MXh0 . Hunter, Rhonda N. 1991. “Successes and Failures of Patrons Searching the Online Catalog at a Large Academic Library: A Transaction Log Analysis.” RQ 30, no. 3: 395–402. https://www.jstor.org/stable/25828813. Jamali, Hamid R., David Nicholas, and Paul Huntington. 2005. “The Use and Users of Scholarly E-Journals: A Review of Log Analysis Studies.” Aslib Proceedings 57, no. 5: 554–571. https://doi.org/10.1108/00012530510634271. Jansen, Bernard J. 2006. “Search Log Analysis: What It Is, What’s Been Done, How to Do It.” Library and Information Science Research 28, no. 3: 407–432. Jansen, Bernard J. 2017. “Log Analysis.” In Research Methods for Library and Information Science, 6th ed., edited by Lynn Silipigni Connaway and Marie L. Radford, 348-349. Santa Barbara, CA: Libraries Unlimited. Lamkhede, Sudarshan, and Sudeep Das. 2019. “Challenges in Search on Streaming Services: Netflix Case Study.” In the Proceedings of SIGIR ’19, July 21–25, 2019, Paris, France. https://arxiv.org/pdf/1903.04638.pdf. Nouvellet, Adrien, Florence D’Alché-Buc, Valérie Baudouin, Christophe Prieur, and François Roueff. 2019. “A Quantitative Analysis of Digital Library User Behaviour Based on Access Logs.” Qualitative and Quantitative Methods in Libraries 7, no. 1: 1–13.

- 24. Questions & Discussion Lynn Silipigni Connaway, PhD connawal@oclc.org @LynnConnaway Peggy Gallagher, MLS, MMC gallaghp@oclc.org @PeggyGallagher Christopher Cyr, PhD cyrc@oclc.org @ChrisCyr19 Brittany Brannon brannonb@oclc.org Erin M. Hood, MLIS hoode@oclc.org @ErinMHood1

Editor's Notes

- Lynn Jansen, Bernard J. 2006. “Search Log Analysis: What It Is, What’s Been Done, How to Do It.” Library and Information Science Research 28, no. 3: 407–432. Connaway, Lynn Silipigni, and Marie L. Radford. 2017. Research Methods in Library and Information Science, 6th ed. Santa Barbara, CA: Libraries Unlimited. Hunter, Rhonda N. 1991. “Successes and Failures of Patrons Searching the Online Catalog at a Large Academic Library: A Transaction Log Analysis.” RQ 30, no. 3: 395–402. https://www.jstor.org/stable/25828813. Jamali, Hamid R., David Nicholas, and Paul Huntington. 2005. “The Use and Users of Scholarly E-Journals: A Review of Log Analysis Studies.” Aslib Proceedings 57, no. 5: 554–571. https://doi.org/10.1108/00012530510634271. Lamkhede, Sudarshan, and Sudeep Das. 2019. “Challenges in Search on Streaming Services: Netflix Case Study.” In the Proceedings of SIGIR ’19, July 21–25, 2019, Paris, France. https://arxiv.org/pdf/1903.04638.pdf. Nouvellet, Adrien, Florence D’Alché-Buc, Valérie Baudouin, Christophe Prieur, and François Roueff. 2019. “A Quantitative Analysis of Digital Library User Behaviour Based on Access Logs.” Qualitative and Quantitative Methods in Libraries 7, no. 1: 1–13.

- Lynn Connaway, Lynn Silipigni, John M. Budd, and Thomas R. Kochtanek. 1995. “An Investigation of the Use of an Online Catalog: User Characteristics and Transaction Log Analysis.” Library Resources and Technical Services 39, no. 2: 142–152.

- Lynn

- Brittany We started by analyzing the data that was already available to us—search logs from WorldCat Discovery. Benefit: “One gets real behaviors from real users using real systems interacting with real information. Log analysis is everything that a lab study is not.” (Jansen 2017, 349) Caveat: Log data is trace data, meaning it is a trace left behind of what a user did, and it must be interpreted. Jansen, Bernard J. 2017. “Log Analysis.” In Research Methods for Library and Information Science, 6th ed., edited by Lynn Silipigni Connaway and Marie L. Radford, 348-349. Santa Barbara, CA: Libraries Unlimited.

- Brittany One of the first things that we had to establish was what the search logs could tell us about user behavior. So we looked manually at some logs to determine what type of information we could glean. The logs could tell us: Type of search (index) Search terms/string Elements of the system interacted with, in this case the autosuggest feature Evolution of searches in a search session Part of this process was working with libraries to recruit participants so we could connect logs with the users who created them. Logs in the system are anonymous—they can’t be tied to the user who generated them. The participants we recruited gave us the ID from their search, which we then used to pull all of the log events associated with that search. On the left-hand side of this slide you can (maybe) see the search log for this participant, CBU09. What we found in interpreting the logs was that this participant started with a mistyped keyword search that returned 0 results. They corrected their spelling and returned over 700,000 results. They then began typing an additional word into their keyword search and selected one of the autosuggested keyword phrases, returning just under 2,000 results. Once we had interpreted the participant logs, we used that information to build an interview protocol. Peggy will talk about the interview process a little later. CBU09 - Female, 19-25, undergraduate, humanities, Christian Brothers University

- Brittany One of the things that CBU09’s search session demonstrates are the different ways in which users can go about evolving a search during a single session. In order to perform bulk analysis of the search logs, which we wanted to do to be able to provide context for our interview data, we needed to be able to distinguish those different techniques quantitatively. Based on quantitative analysis that was manually reviewed, we established the three categories you see here. A corrected search was defined as being more than 90% similar to a previous search string. That primarily includes misspellings, as seen in step 2 of CBU09’s search. A refined search was defined at 80-90% similarity, but in manually reviewing, we also determined the need to include any instance in which the first search string was contained in the second search string or the search string remained the same but the index was changed. You saw this in steps 3 and 4 of CBU09’s search. The final category we established was a new search, which showed less than 80% similarity with the previous search string. We want to emphasize that this does not necessarily mean that they began searching for a different topic or type of material (although it certainly could). Instead, it represents a search where they changed tactics and began searching using a new or meaningfully different search string.

- Brittany Once we had established those categories, we could begin looking at the overall patterns in our search logs. For the bulk log analysis, we looked at all of the logs in the Discovery system for April of 2018. We found that on average, a search session lasted 5 minutes and contained just over two searches, with an average of about 5 words per search. Twelve percent of sessions showed search refinements and 33% of sessions showed multiple searches.

- Christopher Log events for last requests and for the full sessions were categorized in the following way: Online Access Attempt: The user clicked an item or made a request to digitally access the full text of the item. Physical Access Attempt: The user clicked an item or made a request to place a hold on a physical copy of the item. Physical Access Option: Some users left the system after looking at holdings. In these cases, users were in a place in the system where they were able to identify the physical item call number and/or location. The interviews revealed that at least some of the participants looked for the item on the library shelf at this point. Since logs cannot reveal what users did after leaving the system, these users were categorized as having the option to physically access the item. Attempt to Save: The user made an attempt to export or otherwise save the citation. Search Results: The user made a request for search results. This could include a new search, refinement of an existing search, or the addition of limiters. Other: These requests and click events did not fit cleanly into any category, and there were no overarching themes to them.

- Christopher While 54% of the total click events were to generate search results, only 39% of users left on a search results page. More than 50% of users left the system with a request to access an item online, an attempt to access an item physically, or an option to find its physical location. This discrepancy between all request categories and last request categories suggests that users were more likely to leave the system on a successful note. However, it cannot be determined whether the access attempts were successful, so conclusions must be made cautiously.

- Christopher

- Peggy As mentioned earlier, our methodology consisted of both bulk log analysis and user interviews. We conducted semi-structured interviews, using the critical incident technique. How many of you are familiar with the critical incident technique? For those of you who aren't familiar with it, the critical incident technique is a methodology in which a subject is asked to reflect on a specific incident and discuss the cause, description and outcome of the incident as well as his/her feelings and perceptions of the situtation. Connaway, Lynn Silipigni, Chris Cyr, Brittany Brannon, Peggy Gallagher, and Erin Hood. 2019. “Speaking on the Record: Combining Interviews with Search Log Analysis in User Research.” ALISE/ProQuest Methodology Paper Competition Award Winner. Go over methodology (semi-structured interviews with critical incident technique) Connaway, Lynn Silipigni, and Marie L. Radford. 2018. Survey Research. Webinar presented by ASIS&T, January 23. https://www.slideshare.net/LynnConnaway/survey-research-methods-with-lynn-silipigni-connaway and https://www.youtube.com/watch?v=4dlpAT7MXh0 .

- Peggy We developed specific protocols for each of our interviewees based on what we learned from their search logs. The protocols included some standard questions such as what they were looking for and why, whether they were successful – for example, if they were doing a known-item search, did the item appear in their search results, and whether they were delighted and/or frustrated with their search experience.

- Peggy Looking again at the same interviewee that Brittany spoke of – CBU09 – we knew from the search log what she did: she misspelled her search term, corrected the spelling, had over half a million results, refined her search by additional another search phrase, had nearly 2,000 results and then that was it. She appears to have abandoned the search. CBU09 - Female, 19-25, undergraduate, humanities, Christian Brothers University

- Peggy What we learned from her interview, however, was that she was just getting started searching for resources for a paper and hadn't yet narrowed down her topic. She was overwhelmed by how many search results she got, and so she did indeed abandon the library search. However, she came back to the library search tool at a later time, after she had done some Googling and honed in on her topic. She told us how she found the library search tool very helpful once she knew what she wanted to write about, and that she appreciated the help she received from some student workers. She also mentioned that because of the library instruction she had received in her first year, she felt confident in using the library. CBU09 - Female, 19-25, undergraduate, humanities, Christian Brothers University

- Peggy So, once we had conducted all of our interviews, we reviewed the interview transcripts, developed a code book and then had two different team members code each of the interviews. The themes that emerged from the interviews tended to fall into seven different buckets. Interviewees talked about the various search strategies they used and the factors that went into their decision-making process on which resources they wanted to access. As we've learned in several other studies over the years, convenience and ease of access are major factors. They talked about the formats they were most interested in – PDFs being the most mentioned. They talked about how they evaluate resources such as considering the type of study involved from a particular article and whether the article was peer-reviewed. They mentioned features they like about the current library search tool as well as features they wished it had. Many of them talked about being satisfied with their search experience but wouldn't go as far as saying they were delighted by it and spoke of some of their frustrations with the top frustration being too many search results. And many of them spoke favorably about the impact of working with a librarian for receiving library instruction on their confidence in their information-seeking abilities. So, that's a quick review of what we did and how we did it. I'm going to turn things back over to Lynn who's going to talk about some of the challenges and benefits of the methodology we used for this study.

- Connaway, Lynn Silipigni, Chris Cyr, Brittany Brannon, Peggy Gallagher, and Erin Hood. 2019. “Speaking on the Record: Combining Interviews with Search Log Analysis in User Research.” ALISE/ProQuest Methodology Paper Competition Award Winner.

- Lynn

- Lynn

- Lynn It’s changing the way we think, the way we work together. Research goes beyond the academic… influencing product development We earn the right to share our insights by listening.