Tailoring machine learning practices to support prescriptive analytics

- 1. Tailoring Machine Learning Practices to Support Prescriptive Analytics Anthony Melson Data Optimization Decision Science Induction OR Deduction Statistics What-If Business Processes Cost/Benefit NLP Classification Regression

- 2. Narrowing the Scope Subject Matter • Models: Classifiers • Problems: Decision (Yes/No) Goals • Probabilistic and Label Outputs • Deterministic and Non- Deterministic Decision-Making Strategy 2: Incorporate Knowledge Prescriptive Use into the Machine Learning Pipeline Strategy 1: Design Classifiers For Both Types of Decisions

- 3. A Closer Look at Classifiers

- 4. Traditional Classifier Pipeline • Abstract • Accuracy • Indifference • Class Labels • Little Postprocessing

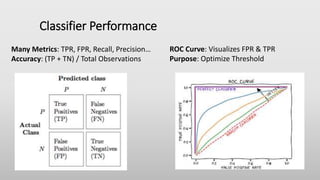

- 5. Classifier Performance Many Metrics: TPR, FPR, Recall, Precision… Accuracy: (TP + TN) / Total Observations ROC Curve: Visualizes FPR & TPR Purpose: Optimize Threshold

- 6. Two Types of Classifiers Label Classifier • Predicts Label • Doesn’t Account For Uncertainty • Label is a Decision • Modifiable Thresholds Probabilistic Classifier • Predicts Probability of Labels • Accounts for Uncertainty (Risk Scores) • Decisions Require Additional Steps Class Boundary Uncertain Space ~0 < x < ~1 Creditworthy Not CW

- 7. A Look From Above Label Output Probability Output

- 8. What Changes When We Consider Prescription

- 9. Real-World Complexity Stakeholders • Risk Attitudes, Outcomes Organizations • Business Objectives Weighted Outcomes • FP FN; TP TN How Does ML Fit In?

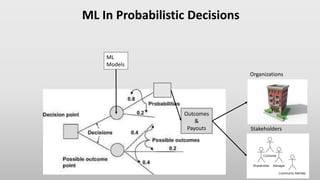

- 10. ML In Probabilistic Decisions ML Models Outcomes & Payouts Organizations Stakeholders

- 11. ML In Deterministic Decisions ML Models Outcomes & Payouts Organizations Stakeholders Uncertain Decision Crew Works on Interior Crew Works on Exterior Will Rain Will Not

- 12. Strategy 1 Label Output Probability Output Modifications Move Decision Threshold Pass Probabilities to Utility Functions Organizations Align Threshold with Objectives Account for Objectives as Utilities Weighted Outcomes Trade-off FP, TP, FN, FP Accordingly Risk Mitigation (Hedging) Stakeholders Account for Risk Attitudes Deliberation How Can ML Experts Respond to these Challenges?

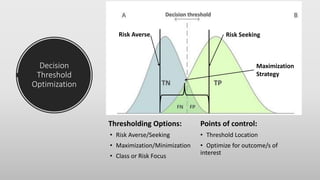

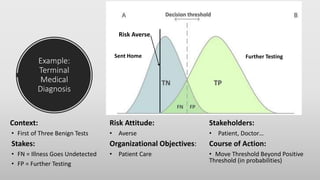

- 13. Decision Threshold Optimization Thresholding Options: • Risk Averse/Seeking • Maximization/Minimization • Class or Risk Focus Points of control: • Threshold Location • Optimize for outcome/s of interest Risk Averse Risk Seeking Maximization Strategy

- 14. Example: Terminal Medical Diagnosis Context: • First of Three Benign Tests Stakes: • FN = Illness Goes Undetected • FP = Further Testing Risk Attitude: • Averse Organizational Objectives: • Patient Care Stakeholders: • Patient, Doctor… Course of Action: • Move Threshold Beyond Positive Threshold (in probabilities) Risk Averse Sent Home Further Testing

- 15. Example: Terminal Medical Diagnosis (variation) Context: • Only one test Stakes: • FN = Illness Goes Undetected • FP = High-Risk Surgery Risk Attitude: • ? Organizational Objectives: • Patient Care Stakeholders: • Patient, Doctor… Course of Action: • ? Sent Home High-Risk Surgery ?

- 16. Things to Think About Can Be a Max- Min-imization Tool • Threshold For Utility/EMV • Minimize Risk Order/Cost of Information • Sequence • Price/Risk Label Can Be Used in Deterministic Systems • Business Processes Great for Automated Decision

- 17. Connection With Utility Function Advantages: • Hedge Decisions • Maximize Utility • Account for Risks of Multiple Decisions • Combine Outputs from Multiple Models • Individual or Batches • Assess Risk

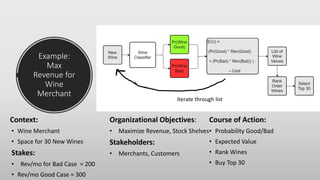

- 18. Example: Max Revenue for Wine Merchant Context: • Wine Merchant • Space for 30 New Wines Stakes: • Rev/mo for Bad Case = 200 • Rev/mo Good Case = 300 Organizational Objectives: • Maximize Revenue, Stock Shelves Stakeholders: • Merchants, Customers Course of Action: • Probability Good/Bad • Expected Value • Rank Wines • Buy Top 30 Iterate Iterate through list

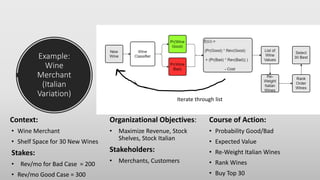

- 19. Example: Wine Merchant (Italian Variation) Context: • Wine Merchant • Shelf Space for 30 New Wines Stakes: • Rev/mo for Bad Case = 200 • Rev/mo Good Case = 300 Organizational Objectives: • Maximize Revenue, Stock Shelves, Stock Italian Stakeholders: • Merchants, Customers Course of Action: • Probability Good/Bad • Expected Value • Re-Weight Italian Wines • Rank Wines • Buy Top 30 Iterate Iterate through list

- 20. Things to Think About Batch vs Individual • Calibration (Especially People) • Difference in Risk Attitudes Utilities Other Than Money • Ethics, Laws, Norms • Predictability • Health • Anything Hard to Put Monetary Value On

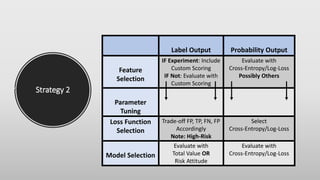

- 21. Strategy 2 Label Output Probability Output Feature Selection IF Experiment: Include Custom Scoring IF Not: Evaluate with Custom Scoring Evaluate with Cross-Entropy/Log-Loss Possibly Others Parameter Tuning Loss Function Selection Trade-off FP, TP, FN, FP Accordingly Note: High-Risk Select Cross-Entropy/Log-Loss Model Selection Evaluate with Total Value OR Risk Attitude Evaluate with Cross-Entropy/Log-Loss

- 22. Decisions in ML Pipeline • Feature Selection • Algorithm Selection • Loss Function • Parameter Tuning

- 23. In Abstract • Evaluation Metrics (usually accuracy) • Previous Experience In Business Context • Based on Outcomes How do we make these decisions?

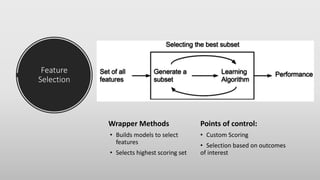

- 24. Feature Selection Wrapper Methods • Builds models to select features • Selects highest scoring set Points of control: • Custom Scoring • Selection based on outcomes of interest

- 25. Hyper- Parameter Tuning Search Types • Grid Search • Random Search • Many Others Points of control: • Custom Scoring • Selection based on outcomes of interest

- 26. Loss Function Loss Functions • Cross-Entropy • Hinge Loss • Many Others Points of control: • Selection based on Use-Case • Selection based on outcomes • Generation based on outcomes • Note: Risky to modify

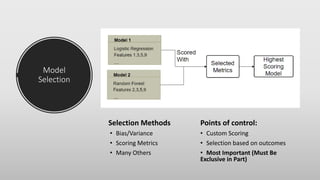

- 27. Model Selection Selection Methods • Bias/Variance • Scoring Metrics • Many Others Points of control: • Custom Scoring • Selection based on outcomes • Most Important (Must Be Exclusive in Part)

- 29. Conclusions Don’t Over-Focus on Accuracy • Outcomes • Context • Stakeholders • Organizations Keep the Use-Case in the Process • Choose the Right Classifier • Make Decisions Based on Application Work With Domain Experts and Prescriptive Analysts • Model Consumption/Utilization • Get Utilities and Risk Attitudes