Testing

- 1. SOFTWARE TESTING SONALI CHAUHAN SYBSC IT UDIT Software Engineering: A Practitioner's Approach - R.S. Pressman & Associates, Inc.

- 2. INTRODUCTION It is critical element of s/w quality assurance. S/w must be tested to uncover as many as errors before delivery to customer. This is where s/w testing technique comes into picture.

- 3. Technique provides systematic guidance for designing test: Exercise internal logic of software components Exercise the i/p, o/p domain of program to uncover errors in program function, behavior and performance S/w is tested in 2 perspective: Internal logic of software components is exercise “ WHITEBOX ” test case technique S/w requirement are exercised using “ BLACKBOX ” test case technique CONT…

- 4. In both the cases, the intend is to find maximum number of error with minimum amount of effort and time. CONT…

- 5. WHO TEST THE SOFTWARE??? Understands the system, but will test “gently” and, is driven by “delivery” Must learn about system but, will attempt to break it and, is driven by quality independent tester developer

- 6. TESTIBILITY FEATURE OPERABILITY OBSERVABILITY CONTROLABILITY DECOMPOSABILITY SIMPLICITY STABILITY UNDERSTANDABILITY

- 7. TESTING OBJECTIVE Testing is a process of executing a program with the intent of finding an error. A good test case is one which has high probability to find an as-yet-undiscovered error. A successful test is one that uncovers as-yet-undiscovered errors. Our objective is to design test that systematically uncover different classes of errors and to do so in minimum amount of time and efforts. If testing is conducted successfully it will uncover errors in s/w.

- 8. PRINCIPLE OF TESTING Testability All tests should be traceable to customer requirement. Planning Tests should be planed long before testing begins. Execution Should begin in small and progress towards in the large.

- 9. Pareto The pareto principle: implies that 80% of all the errors uncovered during testing will likely be traceable to 20% of all programs components Exhaustive testing Exhaustive testing is not possible Moderation To be more effective testing should be conducted by a independent third party. CONT…

- 10. TEST CASE DESIGN Designing a test is difficult Recalling, test should be find max error in min efforts n time

- 11. Whitebox testing: Knowing the internal working of the product, tests can be conducted to ensure that “all gears mesh”, i.e,internal operation are adequately exercised. Blackbox testing Knowing the specified function that a product has been designed to performed, tests can be conducted that demonstrate each function CONT…

- 12. WHITEBOX TESTING Close examination of procedural details. Goal is to ensure that all the conditions and statements are been executed at least once in loop. It is also known as ‘‘ GLASS BOX TESTING ’’

- 13. Software engineers can drive test cases that: guarantee that all independent paths are within a module have been exercised at least once. Exercise all logical decision on their true and false sides Execute all loops at their boundaries and within their operational bound Exercise internal data structures to ensure their validity CONT…

- 14. WHY WHITEBOX TESTING? logic errors and incorrect assumptions are inversely proportional to a path's execution probability. we often believe that a logical path is not likely to be executed; in fact, it may be executed on a regular basis. Typographical errors are random; it's likely that untested paths will contain some

- 15. I.BASIC PATH TESTING Basic path testing is white box testing It enables the test case design to derive a logical complexity basic of procedural design It contains following types Flow graph notation Cyclomatic complexity Deriving test case CONT…

- 16. 1.Flow Graph Notation It depicts the logical control flow of program

- 17. 2.CYCLOMATIC COMPLEXITY Determines no of region of flow chart corresponds to CYCLOMATIC COMPLEXITY Cyclomatic complexity V(G)=E-N+2 E = no of flow graph edges N = number of flow graph nodes V(G) = P+1 P=no of predicate node

- 18. 3.DERIVING TES CASE Draw flow chart Determine CC Determine a basis set of linear independent path Prepare test cases which will execute each path in basic set.

- 19. II. GRAPH MATRICES A graph matrix is a square matrix whose size (i.e., number of rows and columns) is equal to the number of nodes on a flow graph Each row and column corresponds to an identified node, and matrix entries correspond to connections (an edge) between nodes. By adding a link weight to each matrix entry, the graph matrix can become a powerful tool for evaluating program control structure during testing

- 20. III. CONTROL STRUCTURE TESTING Condition testing — a test case design method that exercises the logical conditions contained in a program module Data flow testing — selects test paths of a program according to the locations of definitions and uses of variables in the program

- 21. IV. LOOP TESTING Nested Loops Concatenated Loops Unstructured Loops Simple loop

- 22. LOOP TESTING:SIMPLE LOOPS Minimum conditions—Simple Loops skip the loop entirely only one pass through the loop two passes through the loop m passes through the loop m < n (n-1), n, and (n+1) passes through the loop where n is the maximum number of allowable passes

- 23. LOOP TESTING:NESTED LOOPS Nested Loop: Start at the innermost loop. Set all outer loops to their minimum iteration parameter values. Test the min+1, typical, max-1 and max for the innermost loop, while holding the outer loops at their minimum values. Move out one loop and set it up as in step 2, holding all other loops at typical values. Continue this step until the outermost loop has been tested .

- 24. Concatenated Loops If the loops are independent of one another then treat each as a simple loop else* treat as nested loops endif*

- 25. BLACK BOX TESTING Also called as Behavioral Testing Focuses on the functional requirements of S/W It is complimentary to whitebox testing that uncovers errors

- 26. Black-Box attempts to fin errors in following categories: Incorrect or missing function Interface errors Errors in data structures or external data base access Behavior or performance errors Initialization and termination error CONT…

- 27. Black Box are designed to answer: How is functional validity tested? How is system behavior and performance tested? What classes of input will make good test cases? Is the system particularly sensitive to certain input values? How are the boundaries of a data class isolated? What data rates and data volume can the system tolerate? What effect will specific combinations of data have on system operation? CONT…

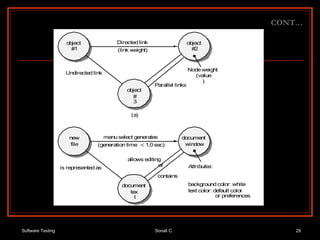

- 28. To understand the objects that are modeled in software and the relationships that connect these objects Software testing begins by creating graph of object and relation among them to uncover errors. Graph is collection of nodes,links. GRAPH BASE TESTING METHOD CONT…

- 29. CONT…

- 30. EQUIVALENCE PARTITIONING Focuses on the test case for input condition If as input condition specifies a range, 1-valid and 2-invalid equivalence class are define If as input condition specifies a value, 1-valid and 2-invalid equivalence class are define If as input condition specifies a number of a set, 1-valid and 1-invalid equivalence class are define If input condition is Boolean ,1 valid and one invalid class are define

- 31. BOUNDARY VALUE ANALYSIS It selects test case at the edges of the class it focuses on input as well as output domain Guidelines for BVA are:

- 32. If the input condition specified a range bounded by values a and b, test case should be just above a and just below b. If the input condition specified a number of values, test case should be maximum and minimum Apply guideline 1 and 2 for output domain If internal program data structure have prescribed boundaries, design test case for data structure as its boundaries CONT…

- 33. COMPARISON TESTING Used only in situations in which the reliability of software is absolutely critical (e.g., human-rated systems) Separate software engineering teams develop independent versions of an application using the same specification Each version can be tested with the same test data to ensure that all provide identical output Then all versions are executed in parallel with real-time comparison of results to ensure consistency

- 34. ORTHOGANAL ARRAY TESTING Used when the number of input parameters is small and the values that each of the parameters may take are clearly bounded

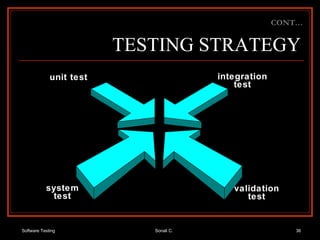

- 35. TESTING STRATEGY We begin by ‘ testing-in-the-small ’ and move toward ‘ testing-in-the-large ’ For conventional software The module (component) is our initial focus Integration of modules follows For OO software our focus when “testing in the small” changes from an individual module (the conventional view) to an OO class that encompasses attributes and operations and implies communication and collaboration

- 36. TESTING STRATEGY unit test integration test validation test system test CONT…

- 37. VALIDATION AND VERIFICATION Software testing is one type of a broader domain that is known as verification and validation (V&V). Verification related to a set of operations that the software correctly implements a particular function. Validation related to a different set of activities that ensures that the software that has been produced is traceable to customer needs.

- 38. UNIT TESTING module to be tested test cases results software engineer

- 39. UNIT TESTING Unit testing concentrates verification on the smallest element of the program. Control paths are tested to uncover errors within the boundary of module. Is whitebox oriented. CONT…

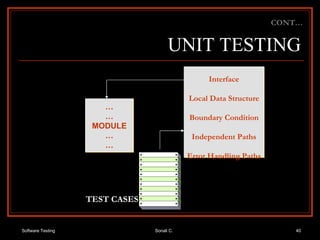

- 40. UNIT TESTING CONT… … … MODULE … … Interface Local Data Structure Boundary Condition Independent Paths Error Handling Paths TEST CASES

- 41. UNIT TESTING Module interface is tested to ensure that information properly flows into and out of the program unit being tested. Local data structure is tested to ensure that data stored temporarily maintains its integrity for all stages in an algorithm’s execution. Boundary conditions are tested to ensure that the modules perform correctly at boundaries created to limit or restrict processing. All independent paths through the control structure are exercised to ensure that all statements in been executed once. Finally, all error-handling paths are examined. CONT…

- 42. UNIT TESTING-Environment CONT… Driver Modules Stubs Stubs Result Interface Local Data Structure Boundary Condition Independent Paths Error Handling Paths TEST CASES

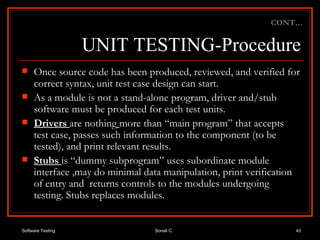

- 43. UNIT TESTING-Procedure Once source code has been produced, reviewed, and verified for correct syntax, unit test case design can start. As a module is not a stand-alone program, driver and/stub software must be produced for each test units. Drivers are nothing more than “main program” that accepts test case, passes such information to the component (to be tested), and print relevant results. Stubs is “dummy subprogram” uses subordinate module interface ,may do minimal data manipulation, print verification of entry and returns controls to the modules undergoing testing. Stubs replaces modules. CONT…

- 44. INTEGRATION TESTING Once all the modules has been unit tested, Integration testing is performed. Next problem is Interfacing Data may lost across interface, one module may not have an adverse impact on another and a function is may not performed correctly when combined. IT is systematic testing. Produce tests to identify errors associated with interfacing.

- 45. INTEGRATION TESTING The “big bang” approach. An entire program is tested as a whole creates a problem. An incremental construction strategy CONT…

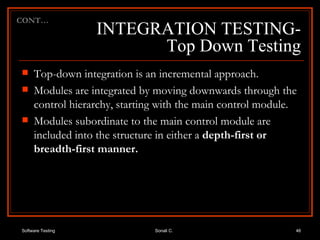

- 46. INTEGRATION TESTING- Top Down Testing Top-down integration is an incremental approach. Modules are integrated by moving downwards through the control hierarchy, starting with the main control module. Modules subordinate to the main control module are included into the structure in either a depth-first or breadth-first manner. CONT…

- 47. INTEGRATION TESTING- Top Down Testing For instance in depth-first integration, selecting the left-hand path, modules M1, M2, M5 would be integrated first. Next M8 or M6 would be integrated. Then the central and right-hand control paths are produced. Breath-first integration includes all modules directly subordinate at each level, moving across the structure horizontally. From the figure modules M2, M3 and M4 would be integrated first. The next control level, M5, M6 etc., follows. CONT…

- 48. INTEGRATION TESTING- Top Down Testing CONT… M1 M7 M8 M9 M6 M5 M3 M4 M2 Top module is tested with stub stubs are replaced one at a time, "depth first" as new modules are integrated, some subset of tests is re-run

- 49. INTEGRATION TESTING- Top Down Testing The integration process is performed in a series of five stages: The main control module is used as a test driver and stubs are substituted for all modules directly subordinate to the main control module. Depending on the integration technique chosen, subordinate stubs are replaced one at a time with actual modules. Tests are conducted as each module is integrated. On the completion of each group of tests, another stub is replaced with the real module. Regression testing may be performed to ensure that new errors have been introduced. CONT…

- 50. INTEGRATION TESTING- Top Down Testing Problem on Top Down Processing at low levels in the hierarchy is required to test upper level Solution Delay test until stubs are replaced with actual module Difficult to determine cause of errors Develop stubs that perform limited functions to simulate actual module Overhead, as stubs become more compels. Integrate software from bottom of the hierarchy CONT…

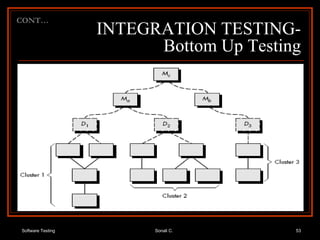

- 51. INTEGRATION TESTING- Bottom Up Testing Begins testing with the modules at the lowest level (atomic modules). As modules are integrated bottom up, processing required for modules subordinates to a given level is always available and the need for stubs is eliminated. CONT…

- 52. INTEGRATION TESTING- Bottom Up Testing A bottom-up integration strategy may be implemented with the following steps: Low-level modules are combined into clusters that perform a particular software subfunction. A driver is written to coordinate test cases input and output. The cluster is tested. Drivers are removed and clusters are combined moving upward in the program structure. CONT…

- 53. INTEGRATION TESTING- Bottom Up Testing CONT…

- 54. REGRESSION TESTING Any time changes are made at any level, all previous testing must be considered invalid can do regression testing at unit, integration, and system level this means tests must be re-run to ensure the software still passes re-running previous tests is regression testing particularly problematic for user interface software

- 55. SMOKE TESTING A common approach for creating “daily builds” for product software. Smoke testing steps:

- 56. SMOKE TESTING Software components that have been translated into code are integrated into a “build.” A build includes all data files, libraries, reusable modules, and engineered components that are required to implement one or more product functions. A series of tests is designed to expose errors that will keep the build from properly performing its function. The intent should be to uncover “show stopper” errors that have the highest likelihood of throwing the software project behind schedule. The build is integrated with other builds and the entire product (in its current form) is smoke tested daily. The integration approach may be top down or bottom up.

- 57. VALIDATION TESTING Software is completely assembled as a package, interfacing errors have been identified and corrected, and a final set of software tests validation testing are started. Validation can be defined in various ways, but a basic one is valid succeeds when the software functions in a fashion that can reasonably expected by the customer.

- 58. VALIDATION TESTING Validation Test Criteria Configuration review Alpha and Beta testing CONT…

- 59. SYSTEM TESTING System testing is a series of different tests whose main aim is to fully exercise the computer-based system Although each test has a different role, all work should verify that all system elements have been properly integrated and form allocated functions. Below we consider various system tests for computer-based systems.

- 60. SYSTEM TESTING Recovery Testing Recovery testing is a system test that forces the software to fail in various ways and verifies the recovery is performed correctly. Security Testing Security testing tries to verify that protection approaches built into a system will protect it from improper penetration. Stress Testing Stress testing executes a system in the demands resources in abnormal quantity CONT…

- 61. Example of Stress testing most systems have requirements for performance under load, e.g., 100 hits per second 500 ambulances dispatched per day all data processed using 70% processor capacity while operating in flight mode with all sensors live systems with load requirements must be tested under load simulated load scenarios must be designed and supported frequently requires significant test code and equipment to adequately support

- 62. PERFORMANCE TESTING Test the runtime performance of the s/w within he context of integrated system.

- 63. Thank You Any Doubt, question, query????