The Future of AI (September 2019)

- 1. ©2019, Amazon Web Services, Inc. or its affiliates. All rights reserved The Future of AI Julien Simon Global Technical Evangelist, AI & Machine Learning Amazon Web Services @julsimon

- 2. Does AI have a massive future? Sure! Please insert another coin. Do we (the builders) have a clear idea how to get there? Hmmmm.

- 3. « If you want to know the future, look at the past » Albert Einstein What’s our collective track record on understanding and implementing disruptive technologies?

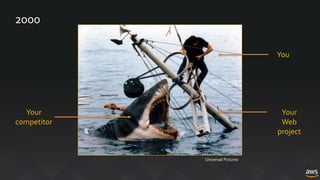

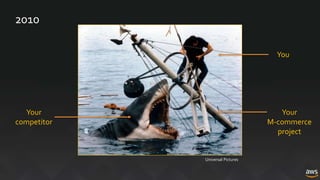

- 8. The terrifying truth about tech projects Delusional stakeholders Business pressure Unprepared team Inadequate tools Improvised tactics Random acts of bravery Universal Pictures

- 9. « It’s different this time! The AI revolution is here! Blah blah blah » You know who

- 11. « Insanity is doing the same thing over and over again and expecting different results » Whoever said it first

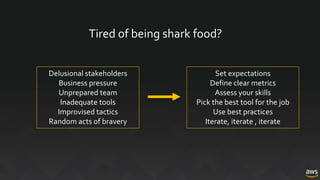

- 12. Delusional stakeholders Business pressure Unprepared team Inadequate tools Improvised tactics Random acts of bravery Set expectations Define clear metrics Assess your skills Pick the best tool for the job Use best practices Iterate, iterate , iterate Tired of being shark food?

- 13. • What is the business question you’re trying to answer? – One sentence on the whiteboard – Must be quantifiable • Do you have (enough) data that could help? • Involve everyone and come to a common understanding – Business, IT, Data Engineering, Data Science, Ops, etc. «We want to see what this technology can do for us » «We have tons of relational data, surely we can do something with it » « I read this cool article about FooBar ML, we ought to try it » 1 - Set expectations 1-

- 14. 2 - Define clear metrics • What is the business metric showing success? • What’s the baseline (human and IT)? • What would be a significant and reasonable improvement? • What would be reasonable further improvements? «The confusion matrix for our support ticket classifier has significantly improved ». Huh? « P90 time-to-resolution is now under 24 hours ». Err…. « Misclassified emails have gone down 5.3% using the latest model ». So? «The latest survey shows that ‘very happy’ customers are up 9.2% ».Woohoo!

- 15. 3 - Assess your skills • Can you build a data set describing the problem? • Do you know how to clean and curate it? • Can you write and tweak ML algorithms? • Can you manage ML infrastructure? • … Or do you only want to call an API and get the job done? 100% DIY Fully managed ?

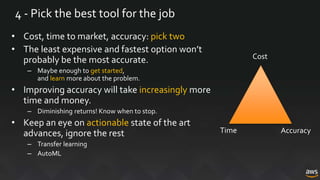

- 16. 4 - Pick the best tool for the job • Cost, time to market, accuracy: pick two • The least expensive and fastest option won’t probably be the most accurate. – Maybe enough to get started, and learn more about the problem. • Improving accuracy will take increasingly more time and money. – Diminishing returns! Know when to stop. • Keep an eye on actionable state of the art advances, ignore the rest – Transfer learning – AutoML Cost AccuracyTime

- 17. 5 - Use best practices • No, things are not different this time. • AI / ML is software engineering – Dev, test, QA, documentation, Agile, versioning, etc. – Involve all teams • Sandbox tests are nice, but truth is in production – Get there fast, as often as needed – CI / CD and automation are required – Devops for ML Universal Pictures

- 18. 6 - Iterate, iterate, iterate aka Boyd’s Law (1960) • Start small • Try the simple things first • Go to production quickly • Observe prediction errors • Act: fix data set? Add more data? Tweak the algo? Try another algo? • Repeat until accuracy gains become irrelevant • Move to the next project

- 19. « Does this work? » Everyone in this room

- 20. 10,000+ active customers – all sizes, all verticals FINRA Expedia Group

- 21. ©2019, Amazon Web Services, Inc. or its affiliates. All rights reserved https://ml.aws Julien Simon Global Technical Evangelist, AI & Machine Learning Amazon Web Services @julsimon https://medium.com/@julsimon