Time series database by Harshil Ambagade

- 1. Time Series Database - Harshil Ambagade

- 2. Outline ² Time Series DataBase ² Storage Problems ² Solution ² Query Optimization

- 3. Time Series Database A Time-Series Database is a database that contains data for each point in time Examples: Weather Data Stock Prices Bangalore weather chart

- 4. Storage Problem in Databases Ø Storage as an important aspect of Time- series database Goal is to minimize IO by reading minimum of records/files as possible

- 5. Storage Problem in Databases Producing a lot of data is easy Producing a lot of derived data is even easier. Solution: Compress all the things! Quota Limit Reached !!!Instrument all the things

- 6. CPU Performance Scanning a lot of data is easy ... but not necessarily fast. Waiting is not productive. We want faster turnaround. Compression but not at the cost of reading speed.

- 8. Apache Parquet Apache Parquet is a columnar storage format available to any project in Hadoop ecosystem, regardless of the choice of data processing framework, data model or programming language

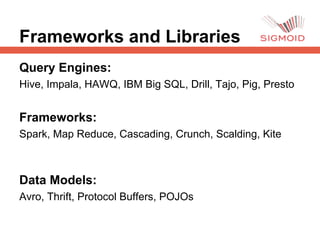

- 9. Frameworks and Libraries Query Engines: Hive, Impala, HAWQ, IBM Big SQL, Drill, Tajo, Pig, Presto Frameworks: Spark, Map Reduce, Cascading, Crunch, Scalding, Kite Data Models: Avro, Thrift, Protocol Buffers, POJOs

- 10. Columnar Storage

- 11. Advantages of Columnar Storage Ø Organizing by column allows for better compression, as data is more homogenous. The space savings are very noticeable at the scale of a Hadoop cluster. Ø I/O will be reduced as we can efficiently scan only a subset of the columns while reading the data. Better compression also reduces the bandwidth required to read the input. Ø As we store data of the same type in each column, we can use encodings better suited for that datatype

- 13. Parquet Terminology u Block (HDFS block): Block in HDFS u Row group: A logical horizontal partitioning of the data into rows. A row group consists of a column chunk for each column in the dataset. u Column chunk: A chunk of the data for a particular column. These live in a particular row group and is guaranteed to be contiguous in the file. u Page: Column chunks are divided up into pages. A page is conceptually an indivisible unit (in terms of compression and encoding). There can be multiple page types which is interleaved in a column chunk. Hierarchically, a file consists of one or more row groups. A row group contains exactly one column chunk per column. Column chunks contain one or more pages

- 14. Encodings v Dictionary Encoding Small 60K set of values v Prefix coding When dictionary encoding does not work v Run Length Encoding Repetitive data v Delta Encodings For sorted datasets where the variation is less important than the absolute value

- 15. Encodings Encoding Techniques borrowed from Google’s Dremel Paper Read More: https://blog.twitter.com/2013/dremel-made-simple- with-parquet

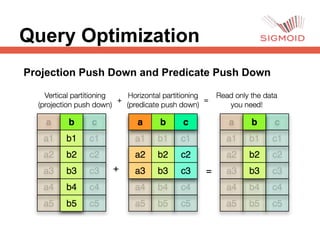

- 16. Query Optimization Projection Push Down and Predicate Push Down

- 17. Query Optimization Choose Parquet Block Size carefully as compared to HDFS Block Size Parquet Block Size should be smaller than equal to HDFS block size to prevent transfer of data between slave nodes.

- 18. Query Optimization Avoid data ingestion processes that produce too many small files Small files may not lead to better compression and thus may lead to significant I/O time

- 19. Query Optimization Better compression with Sorted or almost sorted dataset Dataset with similar values together leads to better compression and better query performance