Transparent Latent GAN

- 2. TL GAN in one sec Matching feature axes to latent space Without NN finetuning!

- 3. Why we need TL-GAN? Matching feature axes to latent space Matching options(Renderer’s) to feature vector(Director’s)

- 4. GAN? http://jaejunyoo.blogspot.com/2017/01/generative-adversarial-nets-2.html latent vector를 given dataset 분포와 매칭시키는 것

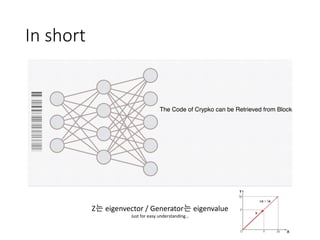

- 5. In short Z는 eigenvector / Generator는 eigenvalue Just for easy understanding…

- 6. Generating with condition Style-transfer networks (Pix2Pix, CycleGAN, Stargan) - Required label & Image - Hard to control each conditional volume Conditional Generator (conditional GAN) - Required label for training - Need retraining

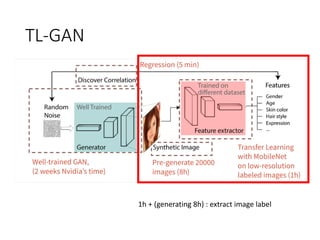

- 8. TL-GAN

- 9. TL-GAN 1h + (generating 8h) : extract image label

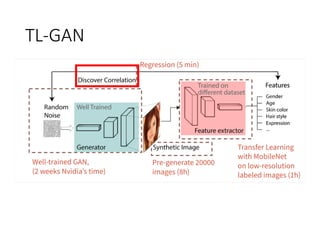

- 10. TL-GAN

- 11. TL-GAN Discover Correlation 1. Z와 Label간의 coefficient (2D) 를 다변량 다중 선형 회귀를 통해 구한다. 그리고 각 계수를 normalize https://github.com/SummitKwan/transparent_latent_gan/blob/04439c24ec5b9 d2da458bb933c6a7296d6bed9dd/src/tl_gan/script_label_regression.py

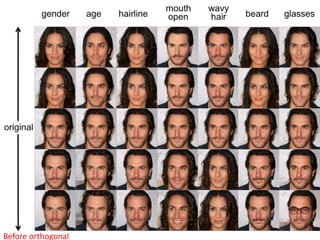

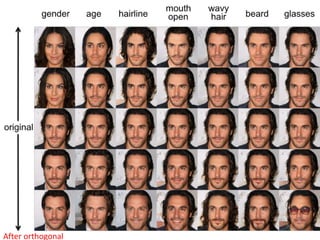

- 12. TL-GAN Discover Correlation 2. Vector들을 orthogonal하게 조정

- 14. After orthogonal

- 15. 다른 성질을 가지는 vector를 어떻게 맞추었을까? Unlabeled 데이터에 대해 label를 붙이기 위한 노오력

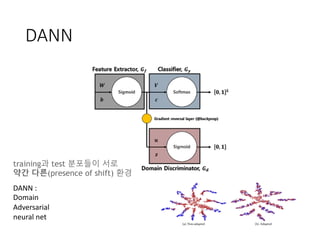

- 16. DANN DANN : Domain Adversarial neural net training과 test 분포들이 서로 약간 다른(presence of shift) 환경

- 17. DANN

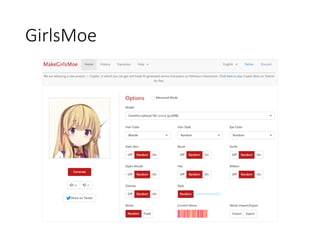

- 18. GirlsMoe

- 19. GirlsMoe – similar approach with tl-gan Label with pre-trained model (illustration2vec) To ALL training data & Train C-GAN