Abstract

Executing quantum algorithms on error-corrected logical qubits is a critical step for scalable quantum computing, but the requisite numbers of qubits and physical error rates are demanding for current experimental hardware. Recently, the development of error correcting codes tailored to particular physical noise models has helped relax these requirements. In this work, we propose a qubit encoding and gate protocol for 171Yb neutral atom qubits that converts the dominant physical errors into erasures, that is, errors in known locations. The key idea is to encode qubits in a metastable electronic level, such that gate errors predominantly result in transitions to disjoint subspaces whose populations can be continuously monitored via fluorescence. We estimate that 98% of errors can be converted into erasures. We quantify the benefit of this approach via circuit-level simulations of the surface code, finding a threshold increase from 0.937% to 4.15%. We also observe a larger code distance near the threshold, leading to a faster decrease in the logical error rate for the same number of physical qubits, which is important for near-term implementations. Erasure conversion should benefit any error correcting code, and may also be applied to design new gates and encodings in other qubit platforms.

Similar content being viewed by others

Introduction

Scalable, universal quantum computers have the potential to outperform classical computers for a range of tasks1. However, the inherent fragility of quantum states and the finite fidelity of physical qubit operations make errors unavoidable in any quantum computation. Quantum error correction2,3,4 allows multiple physical qubits to represent a single logical qubit, such that the correct logical state can be recovered even in the presence of errors on the underlying physical qubits and gate operations.

If the logical qubit operations are implemented in a fault-tolerant manner that prevents the proliferation of correlated errors, the logical error rate can be suppressed arbitrarily so long as the error probability during each operation is below a threshold5,6. Fault-tolerant protocols for error correction and logical qubit manipulation have recently been experimentally demonstrated in several platforms7,8,9,10.

The threshold error rate depends on the choice of error correcting code and the nature of the noise in the physical qubit. While many codes have been studied in the context of the abstract model of depolarizing noise arising from the action of random Pauli operators on the qubit, the realistic error model for a given qubit platform is often more complex, which presents both opportunities and challenges. For example, qubits encoded in cat-codes in superconducting resonators can have strongly biased noise11, leading to significantly higher thresholds12,13 given suitable bias-preserving gate operations for fault-tolerant syndrome extraction14. The realization of biased noise models and bias-preserving gates for Rydberg atom arrays has also been discussed15. On the other hand, many qubits also exhibit some level of leakage outside of the computational space6,16, which requires extra gates in the form of leakage-reducing units, decreasing the threshold17.

Another type of error is an erasure, or detectable leakage, which denotes an error at a known location. Erasures are significantly easier to correct than depolarizing errors in both classical18 and quantum3,19 settings. For example, a four-qubit quantum code is sufficient to correct a single erasure error19, and the surface code threshold under the erasure channel approaches 50% (with perfect syndrome measurements), saturating the bound imposed by the no-cloning theorem20. Erasure errors arise naturally in photonic qubits: if a qubit is encoded in the polarization, or path, of a single photon, then the absence of a photon detection signals an erasure, allowing efficient error correction for quantum communication21 and linear optics quantum computing22,23. However, techniques for detecting the locations of errors in matter-based qubits have not been extensively studied.

In this work, we present an approach to fault-tolerant quantum computing in Rydberg atom arrays24,25,26 based on converting a large fraction of naturally occurring errors into erasures. Our work has two key components. First, we present a physical model of qubits encoded in a particular atomic species, 171Yb27,28,29, that enables erasure conversion without additional gates or ancilla qubits. By encoding qubits in the hyperfine states of a metastable electronic level, the vast majority of errors (i.e., decays from the Rydberg state that is used to implement two-qubit gates) result in transitions out of the computational subspace into levels whose population can be continuously monitored using cycling transitions that do not disturb the qubit levels (the use of a metastable state to certify the absence of certain errors was also recently proposed for trapped ion qubits30). As a result, the location of these errors is revealed, converting them into erasures. We estimate a fraction Reâ=â0.98 of all errors can be detected this way. Second, we quantify the benefit of erasure conversion at the circuit level, using simulations of the surface code. We find that the predicted level of erasure conversion results in a significantly higher threshold, pthâ=â4.15%, compared to the case of pure depolarizing errors (pthâ=â0.937%). Finally, we find a faster reduction in the logical error rate immediately below the threshold.

Results

Erasure conversion in 171Yb qubits

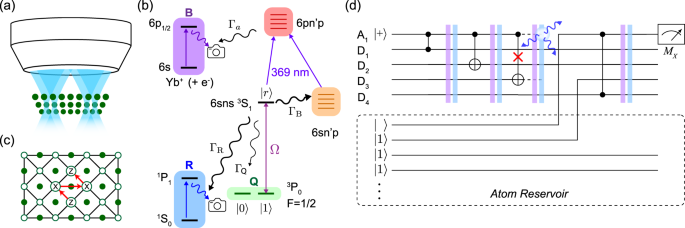

In a neutral atom quantum computer, an array of atomic qubits are trapped, manipulated, and detected using light projected through a microscope objective (Fig. 1a). A variety of atomic species have been explored, but in this work, we consider 171Yb28,29, with the qubit encoded in the Fâ=â1/2 6s6p 3P0 (Fig. 1b) level. This is commonly used as the upper level of optical atomic clocks31, and is metastable with a lifetime of Ïâââ20 s. We define the qubit states as \(\left|1\right\rangle \equiv \left|{m}_{F}=1/2\right\rangle\) and \(\left|0\right\rangle \equiv \left|{m}_{F}={-}1/2\right\rangle\). State preparation, measurement and single-qubit rotations can be performed in a manner similar to existing neutral atom qubits, and a detailed prescription is presented in Supplementary Note 1.

a Schematic of a neutral atom quantum computer, with a plane of atoms under a microscope objective used to image fluorescence and project trapping and control fields. b The physical qubits are individual 171Yb atoms. The qubit states are encoded in the metastable 6s6p 3P0Fâ=â1/2 level (subspace Q), and two-qubit gates are performed via the Rydberg state \(\left|r\right\rangle\), which is accessed through a single-photon transition (λâ=â302 nm) with Rabi frequency Ω. The dominant errors during gates are decays from \(\left|r\right\rangle\) with a total rate Îâ=âÎBâ+âÎRâ+âÎQ. Only a small fraction ÎQ/Îâââ0.05 return to the qubit subspace, while the remaining decays are either blackbody (BBR) transitions to nearby Rydberg states (ÎB/Îâââ0.61) or radiative decay to the ground state 6s2 1S0 (ÎR/Îâââ0.34). At the end of a gate, these events can be detected and converted into erasure errors by detecting fluorescence from ground state atoms (subspace R), or ionizing any remaining Rydberg population via autoionization, and collecting fluorescence on the Yb+ transition (subspace B). c A patch of the XZZX surface code studied in this work, showing data qubits (open circles), ancilla qubits (filled circles) and stabilizer operations, performed in the order indicated by the arrows. d Quantum circuit representing a measurement of a stabilizer on data qubits D1âââD4 using ancilla A1 with interleaved erasure conversion steps. Erasure detection is applied after each gate, and erased atoms are replaced from a reservoir as needed using a moveable optical tweezer. It is strictly only necessary to replace the atom that was detected to have left the subspace, but replacing both protects against the possibility of undetected leakage on the second atom.

To perform two-qubit gates, the state \(\left|1\right\rangle\) is coupled to a Rydberg state \(\left|r\right\rangle\) with Rabi frequency Ω. For concreteness, we consider the 6s75s 3S1 state with \(\left|F,{m}_{F}\right\rangle=\left|3/2,\,3/2\right\rangle\)32. Selective coupling of \(\left|1\right\rangle\) to \(\left|r\right\rangle\) can be achieved by using a circularly polarized laser and a large magnetic field to detune the transition from \(\left|0\right\rangle\) to the mFâ=â1/2 Rydberg state28.

The resulting three level system \(\{\left|0\right\rangle,\;\left|1\right\rangle,\;\left|r\right\rangle \}\) is analogous to hyperfine qubits encoded in alkali atoms, for which numerous gate protocols have been proposed and demonstrated24,25,33,34,35,36,37. These gates are based on the Rydberg blockade: the van der Waals interaction Vrr(x)â=âC6/x6 between a pair of Rydberg atoms separated by x prevents their simultaneous excitation to \(\left|r\right\rangle\) if Vrr(x)ââ«âΩ. The gate duration is of order tgâââ2Ï/Ωââ«â2Ï/Vrr, and during this time, the Rydberg state can decay with probability \(p=\left\langle {P}_{r}\right\rangle {{\Gamma }}{t}_{g}\), where \(\left\langle {P}_{r}\right\rangle \;\approx\; 1/2\) is the average population in \(\left|r\right\rangle\) during the gate, and Î is the total decay rate from \(\left|r\right\rangle\). This is the fundamental limitation to the fidelity of Rydberg gates26. It can be suppressed by increasing Ω (up to the limit imposed by Vrr), but in practice, Ω is often constrained by the available laser power. We note that the Yb 3S1 series has similar interaction strength28,38 and lifetime32 to Rydberg series in alkali atoms.

The state \(\left|r\right\rangle\) can decay via radiative decay to low-lying states (RD), or via blackbody-induced transitions to nearby Rydberg states (BBR)26. Crucially, a large fraction of RD events do not reach the metastable qubit subspace Q, but instead go to the true atomic ground state 6s2 1S0 (with suitable repumping of the other metastable state, 6s6p 3P2). For an nâ=â75 3S1 Rydberg state, we estimate that 61% of decays are BBR, 34% are RD to the ground state, and only 5% are RD to the qubit subspace (see Supplementary Note 2). Therefore, a total of 95% of all decays leave the qubit in disjoint subspaces, whose population can be detected efficiently, converting these errors into erasures. The remaining 5% can only cause errors in the computational spaceâthere is no possibility for leakage, as the Q subspace has only two sublevels.

Decays to states outside of Q can be detected using fluorescence on closed cycling transitions that do not disturb atoms in Q. Population in the 1S0 level can be efficiently detected using fluorescence on the 1P1 transition at 399 nm39,40 (subspace R in Fig. 1c). This transition is highly cyclic, with a branching ratio of â1âÃâ10â7 back into Q41. Population remaining in Rydberg states at the end of a gate can be converted into Yb+ ions by autoionization on the 6sâââ6p1/2 Yb+ transition at 369ânm38. The resulting slow-moving Yb+ ions can be detected using fluorescence on the same Yb+ transition, as has been previously demonstrated for ensembles of Sr+ ions in ultracold strontium gases42 (subspace B in Fig. 1c). As the ions can be removed after each erasure detection round with a small electric field, this approach also eliminates correlated errors from leakage to long-lived Rydberg states43. We estimate that site-resolved detection of atoms in 1S0 with a fidelity Fâ>â0.99944, and Yb+ ions with a fidelity Fâ>â0.99, can be achieved in a 10âμs imaging period (see Supplementary Note 3). We note that two nearby ions created in the same cycle will likely not be detected because of mutual repulsion, but this occurs with a very small probability relative to other errors, as discussed below.

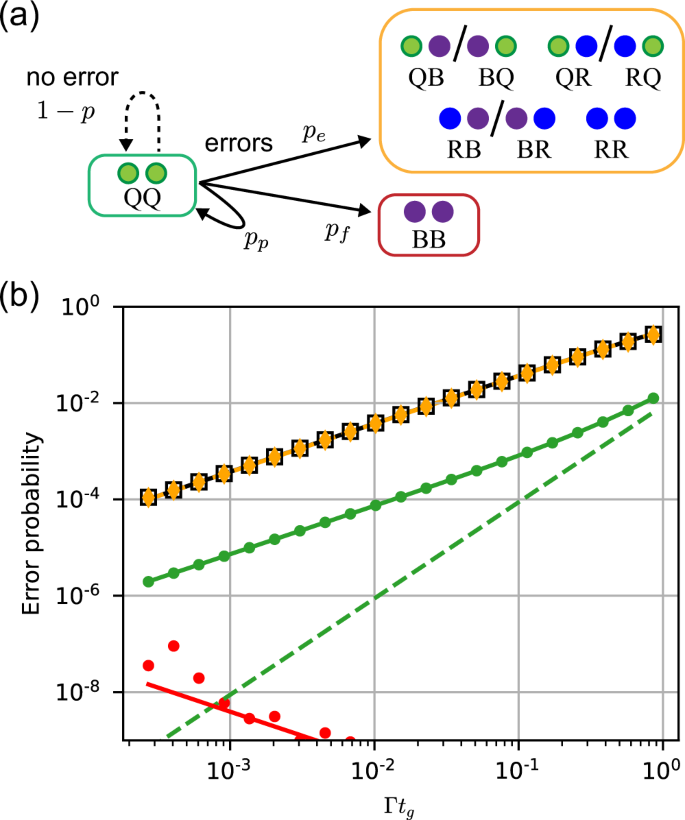

We divide the total spontaneous emission probability, p, into three classes depending on the final state of the atoms (Fig. 2a). The first outcome is stated corresponding to detectable erasures (BQ/QB, RQ/QR, RB/BR, and RR), with probability pe. The second is the creation of two ions (BB), which cannot be detected, occurring with probability pf. The third outcome is a return to the qubit subspace (QQ), with probability pp, which results in an error on the qubits within the computational space.

a Possible atomic states at the end of a two-qubit gate. The configurations grouped in the yellow box are detectable erasure errors; red, undetectable errors; and green, the computational space. b Gate error as a function of the gate duration tg. The average gate infidelity \(1-{{{{{{{\mathcal{F}}}}}}}}\) (black squares) is dominated by detectable erasures with probability pe (orange points). The infidelity conditioned on not detecting an erasure, \(1-{{{{{{{{\mathcal{F}}}}}}}}}_{\bar{e}}\) (green points) is about 50 times smaller. This reflects decays to Q with probability pp, and a no-jump evolution contribution (green dashed line). The probability pf of undetectable leakage (red points) is very small. The lines are analytic estimates of each quantity, while the symbols are numerical simulations. Both assume Vrr/Îâ=â106, and Ω is varied along the horizontal axis.

The value of p and its decomposition depends on the specific Rydberg gate protocol. We study a particular example, the symmetric CZ gate from ref. 35, using a combination of analytic and numerical techniques, detailed in Supplementary Note 4 and summarized in Fig. 2b. The probability of a detectable erasure, pe, is almost identical to the average gate infidelity \(1-{{{{{{{\mathcal{F}}}}}}}}\), indicating that the vast majority of errors are of this type. We infer pp from the fidelity conditioned on not detecting an erasure, \({{{{{{{{\mathcal{F}}}}}}}}}_{\bar{e}}\), as \({p}_{p}=1-{{{{{{{{\mathcal{F}}}}}}}}}_{\bar{e}}\), and find ppâââpe/50. Non-detectable leakage (BB) is strongly suppressed by the Rydberg blockade, and we find pf < 10â4âÃâpe over the relevant parameter range. Since decays occur preferentially from \(\left|1\right\rangle\), continuously monitoring for erasures introduces an additional probability of gate error from non-Hermitian no-jump evolution45, proportional to \({p}_{e}^{2}\), which is insignificant for peâ<â0.1 (see Methods).

We conclude that this approach effectively converts a fraction Reâ=âpe/(peâ+âpp)â=â0.98 of all spontaneous decay errors into erasures. This is a larger fraction than would be naïvely predicted from the branching ratio into the qubit subspace, 1âââÎQ/Îâ=â0.95, because decays to Q in the middle of the gate result in re-excitation to \(\left|r\right\rangle\) with a high probability, triggering an erasure detection. This value is in agreement with an analytic estimate (Supplementary Note 4).

Surface code simulations

We now study the performance of an error correcting code with erasure conversion using circuit-level simulations. We consider the planar XZZX surface code46, which has been studied in the context of biased noise, and performs identically to the standard surface code for the case of unbiased noise. We implement Monte Carlo simulations of errors in a dâÃâd array of data qubits to implement a code with distance d, and estimate the logical failure rate after d rounds of measurements.

In the simulation, each two-qubit gate experiences either a Pauli error with probability ppâ=âp(1âââRe), or an erasure with probability peâ=âpRe. The Pauli errors are drawn uniformly at random from the set {I,âX,âY,âZ}â2\{IâââI}, each with probability pp/15. Following a two-qubit gate in which an erasure error occurs, both atoms are placed in the mixed state I/2, which is modeled in the simulations by applying a Pauli error chosen uniformly at random from {I,âX,âY,âZ}â247 (in the experiment, the replaced atoms can be in any state, since the subsequent stabilizer measurements and decoding are equivalent to a depolarizing error). We do not consider single-qubit gate errors or ancilla initialization or measurement errors at this stage.

The syndrome measurement results, together with the locations of the erasure errors, are decoded with weighted Union Find (UF) decoder48,49 to determine whether the error is correctable or leads to a logical failure. The UF decoder is optimal for pure erasure errors50, and performs comparably to conventional matching decoders for Pauli errors, but is considerably faster48,49.

In Fig. 3a, we present the simulation results for Reâ=â0 and Reâ=â0.98. The former corresponds to pure Pauli errors, while the latter corresponds to the level of erasure conversion anticipated in 171Yb. The logical errors are significantly reduced in the latter case. The fault-tolerance threshold, defined as the physical error rate where the logical error rate decreases with increasing d, increases by a factor of 4.4, from pthâ=â0.937% to pthâ=â4.15%. In Fig. 3b, we plot the threshold as a function of Re. It reaches 5.13% when Reâ=â1. The smooth increase of the threshold with Re is qualitatively consistent with previous studies of the surface code performance with mixed erasure and Pauli errors20,48,51.

a Scaling of the logical error rate with the physical qubit error rate p in the case of pure computational errors (Reâ=â0, open circles, dashed lines) and in the case of a high conversion to erasure errors, Reâ=â0.98 (filled circles, solid lines). The error thresholds are pthâ=â0.937(4)% and pthâ=â4.15(2)%, respectively, determined from the crossing of dâ=â11 and dâ=â15. The error bars indicate the 95% confidence interval in pL, estimated from the number of trials in the Monte Carlo simulation. b pth as a function of Re (The green star highlights Reâ=â0.98).

In addition to increasing the threshold, the high fraction of erasure errors also results in a faster decrease in the logical error rate below the threshold. Below the threshold, pL can be approximated by Apν, where the exponent ν is the number of errors needed to cause a logical failure. A larger value of ν results in a faster suppression of logical errors below the threshold, and better code performance for a fixed number of qubits (i.e., fixed d).

In Fig. 4a, we plot the logical error rate as a function of the physical error rate for a dâ=â5 code for several values of Re. When normalized by the threshold error rates (Fig. 4b), it is evident that the exponent (slope) ν increases with Re. The fitted exponents (Fig. 4c) smoothly increase from the expected value for pure Pauli errors, νpâ=â(dâ+â1)/2â=â3, to the expected value for pure erasure errors, νeâ=âdâ=â5 (in fact, it exceeds this value slightly in the region sampled, which is close to the threshold). For Reâ=â0.98, νâ=â4.35(2). Achieving this exponent with pure Pauli errors would require dâ=â7, using nearly twice as many qubits as the dâ=â5 code in Fig. 4. For very small p, the exponent will eventually return to νp, as the lowest weight failure (νp Pauli errors) will become dominant. The onset of this behavior is barely visible for dâ=â5 in Fig. 3a.

a pL vs p at a fixed code distance dâ=â5 for various values of Re [colors correspond to the diamond points in panel (c)]. In panel (b), the physical and logical error rates are rescaled by their values at the threshold. c Logical error exponent ν, extracted from the slope of the curves in (b). The dashed lines show the expected asymptotic exponents for pure computational errors (νpâ=â3) and pure erasure errors (νeâ=â5). The error bars indicate the 95% confidence interval in the exponent ν, estimated from a chi-squared analysis of the fit.

Discussion

There are several points worth discussing. First, we note that the threshold error rate for Reâ=â0.98 corresponds to a two-qubit gate fidelity of 95.9%, which is exceeded by the current state-of-the-art. Recently, entangled states with fidelity \({{{{{{{\mathcal{F}}}}}}}}=97.4\%\) were demonstrated for hyperfine qubits in Rb35, and we also note that \({{{{{{{\mathcal{F}}}}}}}}=99.1\%\) has been demonstrated for ground-Rydberg qubits in 88Sr52. With reasonable technical improvements, a reduction of the error rate by at least one order of magnitude has been projected37, which would place neutral atom qubits far below the threshold, into a regime of genuine fault-tolerant operation. Arrays of hundreds of neutral atom qubits have been demonstrated53,54, which is a sufficient number to realize a single surface code logical qubit with dâ=â11, or five logical qubits with dâ=â5. While we analyze the surface code in this work because of the availability of simple, accurate decoders, we expect erasure conversion to realize a similar benefit on any code. In combination with the flexible connectivity of neutral atom arrays enabled by dynamic rearrangement55,56,57, this opens the door to implementing a wide range of efficient codes58.

Second, in order to compare erasure conversion to previous proposals for achieving fault-tolerant Rydberg gates by repumping leaked Rydberg population in a bias-preserving manner15, we have also simulated the XZZX surface code with biased noise and bias-preserving gates. For noise with bias η (i.e., if the probability of X or Y errors is η times smaller than Z errors), we find a threshold of pthâ=â2.27% for the XZZX surface code when ηâ=â100, which increases to pthâ=â3.69% when ηââââ. For comparison, the threshold with erasure conversion is higher than the case of infinite bias if Reââ¥â0.96, with the additional benefit of not requiring bias-preserving gates.

Third, we consider the role of imperfect erasure detection, or other sources of atom loss. Since two-qubit blockade gates have well-defined behavior with regard to lost atoms (i.e., the lost atoms act as if they are in \(\left|0\right\rangle\)), these events can be handled fault-tolerantly with no extra ancillas and only one extra gate per stabilizer measurement, using the "quick circuit" for leakage reduction introduced in ref. 17. In that work it was shown that the impact on the threshold was very slight if the loss probability was small compared to other errors17, and the same behavior can be expected in the scheme considered here. We leave a detailed analysis to future work.

Fourth, our analysis has focused on two-qubit gate errors, since they are dominant in neutral atom arrays, and are also the most problematic for fault-tolerant error correction59. However, with very efficient erasure conversion for two-qubit gate errors, the effect of single-qubit errors, initialization and measurement errors, and atom loss may become more significant. In Supplementary Note 6, we present additional simulations showing that the inclusion of initialization, measurement, and single-qubit gate errors with reasonable values does not significantly affect the threshold two-qubit gate error. We also note that erasure conversion can also be effective for other types of spontaneous errors, including Raman scattering during single qubit gates, the finite lifetime of the 3P0 level, and certain measurement errors.

Lastly, we highlight that erasure conversion can lead to more resource-efficient, fault-tolerant subroutines for universal computation, such as magic-state distillation60, which uses several copies of faulty resource states to produce fewer copies with lower error rate. This is expected to consume large portions of the quantum hardware59,61, but the overhead can be reduced by improving the fidelity of the input raw magic states. By rejecting resource states with detected erasures, the error rate can be reduced from O(p)62,63,64,65 to O((1âââRe)p). Therefore, 98% erasure conversion can give over an order of magnitude reduction in the infidelity of raw magic states, resulting in a large reduction in overheads for magic state distillation.

While this work provides a novel motivation to pursue qubits based on Yb and other alkaline earth-like atoms, these atoms have also attracted recent interest thanks to other potential advantages28,29,40,52,66,67,68,69. In particular, long qubit coherence times28,29,69, narrow-line laser cooling, and rapid single-photon Rydberg excitation from the metastable 3P0 level offer the potential for improved entangling gate fidelities and a suppression of technical noise. We note that the highest reported Rydberg entanglement fidelity was achieved using the analogous metastable state in 88Sr52. The use of a metastable electronic level offers other benefits, including straightforward mid-circuit measurement and array reloading capabilities, as demonstrated recently in the context of trapped ions30,70,71.

In conclusion, we have proposed an approach for efficiently implementing fault-tolerant quantum logic operations in neutral atom arrays using 171Yb. By leveraging the unique level structure of this alkaline earth atom, we convert the dominant source of error for two-qubit gatesâspontaneous decay from the Rydberg stateâinto directly detected erasure errors. We find a 4.4-fold increase in the circuit-level threshold for a surface code, bringing the threshold within the range of current experimental gate fidelities in neutral atom arrays. Combined with a steeper scaling of the logical error rate below the threshold, this approach is promising for demonstrating fault-tolerant logical operations with near-term experimental hardware. We anticipate that erasure conversion will also be applicable to other codes and other physical qubit platforms30.

Methods

Error correcting code simulations

In this section, we provide additional details about the simulations used to generate the results shown in Figs. 3 and 4. We assign each two-qubit gate to have an error from the set {I,âX,âY,âZ}â2\{IâââI} with probability pp/15, and an erasure error with probability pe, with pe/(ppâ+âpe)â=âRe. Immediately after an erasure error on a two-qubit gate, both qubits are re-initialized in a completely mixed state which is modeled using an error channel (IÏIâ+âXÏXâ+âYÏYâ+âZÏZ)/4 on each qubit. We choose this model for simplicity, but in the experiment, better performance may be realized using an ancilla polarized into \(\left|1\right\rangle\), as Rydberg decays only happen from this initial state. In addition, we note that the majority of errors result in only one of the atoms leaving Q (Supplementary Note 4), but the other atom has an error anyway and should still be considered as part of the erasure. We assume the existence of native CZ and CNOT gates, so a stabilizer cycle can be completed without single-qubit gates. We also neglect idle errors, since these are typically insignificant for atomic qubits.

Ancilla initialization (measurement) are handled in a similar way, with a Pauli error following (preceding) a perfect operation, with probability pm (pmâ=â0 in Figs. 3 and 4, but results for pmâ>â0 are discussed in Supplementary Note 6).

We simulate the surface code with open boundary conditions. Each syndrome extraction round proceeds in six steps: ancilla state preparation, four two-qubit gates applied in the order shown in Fig. 1, and finally a measurement step. For a dâÃâd lattice, we perform d rounds of syndrome measurements, followed by one final round of perfect measurements. The decoder graph is constructed by connecting all space-time points generated by errors in the circuit applied as discussed above. Each of these edges is then weighted by \({{{{\rm{ln }}}}}(p^{\prime} )\) truncated to the nearest integer, where \(p^{\prime}\) is the largest single error probability that gives rise to the edge. After sampling an error, the weighted UF decoder is applied to determine error patterns consistent with the syndromes. We do not apply the peeling decoder but account for logical errors by keeping track of the parity of the number of defects crossing the logical boundaries. Our implementation of the decoder was separately benchmarked against the results in ref. 49 and yields same thresholds.

For the comparison to the threshold of the XZZX code when the noise is biased, we apply errors from Qâ=â{I,âX,âY,âZ}â2\{IâââI} after each two qubit gate with probability pQ. The first (second) operator in the tensor product is applied to the control (target) qubit. In the case of CNOT, we assume bias-preserving gates, and thus set pZIâ=âp, pIZâ=âpZZâ=âp/2 with the probability of other non-pure-dephasing Pauli errors set to p/η13. For the CZ gate we use pZIâ=âp, pIZâ=âp with the probability of other non-pure-dephasing Pauli errors set to p/η. For the threshold quoted in the main text no single-qubit preparation and measurement noise is applied, to facilitate direct comparison to the threshold with erasure conversion in Fig. 3. In the main text we quote threshold in terms of the total two-qubit gate infidelity ~2p for large η, to facilitate comparison to the threshold in Fig. 3.

Lastly, we note that the no-jump evolution discussed in Fig. 2b is described by the Kraus operator \({K}_{nj}=I+(\sqrt{(1-p)}-1)\left|1\right\rangle \left\langle 1\right|\approx I-(p/2)\left|1\right\rangle \left\langle 1\right|\) (for small p), where p is the decay probability. The Pauli-twirl approximation (PTA) reduces any error channel to a Pauli error channel by removing off-diagonal terms in the process matrix. Under the PTA, the non-Hermitian operator Knj effectively applies a Pauli-Z error at a rateâââp2. This error model is similar to the amplitude damping channel, and previous work has found that the performance of the surface code with the PTA is identical to the exact amplitude damping channel72.

Data availability

The Monte Carlo simulation data of the error correcting code performance generated in this study have been deposited in the Harvard Dataverse database under accession code https://doi.org/10.7910/DVN/H9LV4H.

References

Montanaro, A. Quantum algorithms: an overview. npj Quantum Inf. 2, 15023 (2016).

Shor, P. W. Scheme for reducing decoherence in quantum computer memory. Phys. Rev. A 52, R2493 (1995).

Gottesman, D. Stabilizer codes and quantum error correction. Preprint at http://arxiv.org/abs/quant-ph/9705052 (1997).

Knill, E. & Laflamme, R. Theory of quantum error-correcting codes. Phys. Rev. A 55, 900 (1997).

Aharonov, D. & Ben-Or, M. Fault-tolerant quantum computation with constant error rate. SIAM J. Computing 38, 1207 (2008).

Knill, E., Laflamme, R. & Zurek, W. Threshold accuracy for quantum computation. Preprint at http://arxiv.org/abs/quant-ph/9610011 (1996).

Egan, L. et al. Fault-tolerant control of an error-corrected qubit. Nature 598, 281â286 (2021).

Ryan-Anderson, C. et al. Realization of real-time fault-tolerant quantum error correction. Phys. Rev. X 11, 041058 (2021).

Abobeih, M. H. et al. Fault-tolerant operation of a logical qubit in a diamond quantum processor. Nature 606, 884â889 (2022).

Postler, L. et al. Demonstration of fault-tolerant universal quantum gate operations. Nature 605, 675â680 (2022).

Grimm, A. et al. Stabilization and operation of a Kerr-cat qubit. Nature 584, 205 (2020).

Aliferis, P. & Preskill, J. Fault-tolerant quantum computation against biased noise. Phys. Rev. A 78, 052331 (2008).

Darmawan, A. S., Brown, B. J., Grimsmo, A. L., Tuckett, D. K. & Puri, S. Practical quantum error correction with the XZZX code and kerr-cat qubits. PRX Quantum 2, 030345 (2021).

Puri, S., Flammia, S. T. & Girvin, S. M. Biaspreserving gates with stabilized cat qubits. Sci. Adv. 6, eaay5901 (2020).

Cong, I. et al. Hardware-efficient, fault-tolerant quantum compu-tation with Rydberg atoms. Phys. Rev. X 12, 021049 (2022).

Preskill, J. Fault-tolerant quantum computation. Preprint at http://arxiv.org/abs/quant-ph/9712048 (1997).

Suchara, M., Cross, A. W. & Gambetta, J. M. Leakage suppression in the toric code. Quantum Inf. Computation 15, 997 (2015).

Cover, T. M. & Thomas, J. A. Elements of Information Theory, 2nd edn. (Wiley, Hoboken, NJ, 2006).

Grassl, M., Beth, T. & Pellizzari, T. Codes for the quantum erasure channel. Phys. Rev. A 56, 33 (1997).

Stace, T. M., Barrett, S. D. & Doherty, A. C. Thresholds for topological codes in the presence of loss. Phys. Rev. Lett. 102, 200501 (2009).

Muralidharan, S., Kim, J., Lütkenhaus, N., Lukin, M. D. & Jiang, L. Ultra-fast and fault-tolerant quantum communication across long distances. Phys. Rev. Lett. 112, 250501 (2014).

Knill, E., Laflamme, R. & Milburn, G. A scheme for efficient quantum computation with linear optics. Nature 409, 7 (2001).

Kok, P. et al. Linear optical quantum computing with photonic qubits. Rev. Modern Phys. 79, 135 (2007).

Jaksch, D. et al. Fast quantum gates for neutral atoms. Phys. Rev. Lett. 85, 2208 (2000).

Lukin, M. Dipole blockade and quantum information processing in mesoscopic atomic ensembles. Phys. Rev. Lett. 87, 037901 (2001).

Saffman, M., Walker, T. G. & Mølmer, K. Quantum information with Rydberg atoms. Rev. Modern Phys. 82, 2313 (2010).

Noguchi, A., Eto, Y., Ueda, M. & Kozuma, M. Quantum-state tomography of a single nu-clear spin qubit of an optically manipulated ytterbium atom. Phys. Rev. A 84, 030301 (2011).

Ma, S. et al. Universal gate operations on nuclear spin qubits in an optical tweezer array of Yb 171 atoms. Phys. Rev. X 12, 021028 (2022).

Jenkins, A., Lis, J. W., Senoo, A., McGrew, W. F. & Kaufman, A. M. Ytterbium nuclear-spin qubits in an optical tweezer array. Phys. Rev. X 12, 021027 (2022).

Campbell, W. C. Certified quantum gates. Phys. Rev. A 102, 022426 (2020).

Ludlow, A. D., Boyd, M. M., Ye, J., Peik, E. & Schmidt, P. O. Optical atomic clocks. Rev. Modern Phys. 87, 637 (2015).

Wilson, J. T. et al. Trapping alkaline earth Rydberg atoms optical tweezer arrays. Phys. Rev. Lett. 128, 033201 (2022).

Isenhower, L. et al. Demon-stration of a neutral atom controlled-NOT quantum gate. Phys. Rev. Lett. 104, 010503 (2010).

Wilk, T. et al. Entanglement of two individual neutral atoms using Rydberg blockade. Phys. Rev. Lett. 104, 010502 (2010).

Levine, H. et al. Parallel implementation of high-fidelity multiqubit gates with neutral atoms. Phys. Rev. Lett. 123, 170503 (2019).

Mitra, A. et al. Robust Mølmer-Sørensen gate for neutral atoms using rapid adiabatic Rydberg dressing. Phys. Rev. A 101, 030301 (2020).

Saffman, M., Beterov, I. I., Dalal, A., Páez, E. J. & Sanders, B. C. Symmetric Rydberg controlled-Z gates with adiabatic pulses. Phys. Rev. A 101, 062309 (2020).

Burgers, A. P. et al. Controlling Rydberg excitations using ion-core transitions in alkaline-earth atom-tweezer arrays. PRX Quantum 3, 020326 (2022).

Yamamoto, R., Kobayashi, J., Kuno, T., Kato, K. & Takahashi, Y. An ytterbium quantum gas microscope with narrow-line laser cooling. New J. Phys. 18, 23016 (2016).

Saskin, S., Wilson, J. T., Grinkemeyer, B. & Thompson, J. D. Narrow-line cooling and imaging of ytterbium atoms in an optical tweezer array. Phys. Rev. Lett. 122, 143002 (2019).

Loftus, T., Bochinski, J. R., Shivitz, R. & Mossberg, T. W. Power-dependent loss from an ytterbium magneto-optic trap. Phys. Rev. A 61, 051401 (2000).

McQuillen, P., Zhang, X., Strickler, T., Dunning, F. B. & Killian, T. C. Imaging the evolution of an ultracold strontium Rydberg gas. Phys. Rev. A 87, 013407 (2013).

Goldschmidt, E. A. et al. Anomalous broadening in driven dissipative Rydberg systems. Phys. Rev. Lett. 116, 113001 (2016).

Bergschneider, A. et al. Spin-resolved single-atom imaging of Li 6 in free space. Phys. Rev. A 97, 063613 (2018).

Plenio, M. B. & Knight, P. L. The quantum-jump approach to dissipative dynamics in quantum optics. Rev. Modern Phys. 70, 101 (1998).

Bonilla Ataides, J. P., Tuckett, D. K., Bartlett, S. D., Flammia, S. T. & Brown, B. J. The XZZX surface code. Nat. Commun. 12, 2172 (2021).

Bennett, C. H., DiVincenzo, D. P. & Smolin, J. A. Capacities of quantum erasure channels. Phys. Rev. Lett. 78, 3217 (1997).

Delfosse, N. & Nickerson, N. H. Almostlinear time decoding algorithm for topological codes. Quantum 5, 595 (2021).

Huang, S., Newman, M. & Brown, K. R. Fault-tolerant weighted union-find decoding on the toric code. Phys. Rev. A 102, 012419 (2020).

Delfosse, N. & Zémor, G. Linear-time max-imum likelihood decoding of surface codes over the quantum erasure channel. Phys. Rev. Res. 2, 033042 (2020).

Barrett, S. D. & Stace, T. M. Fault tolerant quantum computation with very high threshold for loss errors. Phys. Rev. Lett. 105, 200502 (2010).

Madjarov, I. S. et al. High-fidelity entanglement and detec-tion of alkaline-earth Rydberg atoms. Nat. Phys. 16, 857 (2020).

Ebadi, S. et al. Quantum phases of matter on a 256-atom programmable quantum simulator. Nature 595, 227 (2021).

Scholl, P. et al. Quantum simulation of 2D antiferromagnets with hundreds of Rydberg atoms. Nature 595, 233 (2021).

Beugnon, J. Two-dimensional transport and transfer of a single atomic qubit in optical tweezers. Nat. Phys. 3, 696 (2007).

Yang, J. et al. Coherence preservation of a single neutral atom qubit transferred between magic-intensity optical traps. Phys. Rev. Lett. 117, 123201 (2016).

Bluvstein, D. et al. A quantum processor based on coherent transport of entangled atom arrays. Nature 604, 451 (2022).

Breuckmann, N. P. & Eberhardt, J. N. Quantum low-density parity-check codes. PRX Quantum 2, 21 (2021).

Fowler, A. G., Mariantoni, M., Martinis, J. M. & Cleland, A. N. Surface codes: towards practical large-scale quantum computation. Phys. Rev. A 86, 032324 (2012).

Bravyi, S. & Kitaev, A. Universal quantum computation with ideal clifford gates and noisy ancillas. Phys. Rev. A 71, 022316 (2005).

Fowler, A. G., Devitt, S. J. & Jones, C. Surface code implementation of block code state dis-tillation. Sci. Rep. 3, 1 (2013).

Horsman, C., Fowler, A. G., Devitt, S. & Van Meter, R. Surface code quantum computing by lattice surgery. New J. Phys. 14, 123011 (2012).

Landahl, A. J. & Ryan-Anderson, C. Quantum computing by color-code lattice surgery. arXiv:1407.5103 (2014).

Li, Y. A magic stateâs fidelity can be superior to the operations that created it. New J. Phys. 17, 023037 (2015).

Luo, Y.-H. et al. Quantum teleportation of physical qubits into logical code spaces. Proc. Natl Acad. Sci. USA 118, e2026250118 (2021).

Cooper, A. et al. Alkaline-earth atoms in optical tweezers. Phys. Rev. X 8, 041055 (2018).

Norcia, M. A., Young, A. W. & Kaufman, A. M. Microscopic control and detection of ultracold strontium in optical-tweezer arrays. Phys. Rev. X 8, 041054 (2018).

Schine, N., Young, A. W., Eckner, W. J., Martin, M. J. & Kaufman, A. M. Long-lived Bell states in an array of optical clock qubits. [cond-mat, physics:physics, physics:quant-ph]. Preprint at http://arxiv.org/abs/2111.14653 (2021).

Barnes, K. et al. Assembly and coherent control of a register of nuclear spin qubits. Nat. Commun. 13, 2779 (2022).

Yang, H.-X. et al. Realizing coherently convertible dual-type qubits with the same ion species. [physics, physics:quant-ph]. Preprint at http://arxiv.org/abs/2106.14906 (2021).

Allcock, D. T. C. et al. Omg blueprint for trapped ion quantum computing with metastable states. Appl. Phys. Lett. 119, 214002 (2021).

Darmawan, A. S. & Poulin, D. Tensor-network simulations of the surface code under realistic noise. Phys. Rev. Lett. 119, 040502 (2017).

Acknowledgements

We gratefully acknowledge Alex Burgers, Shuo Ma, Genyue Liu, Jack Wilson, Sam Saskin, and Bichen Zhang for helpful conversations, and Ken Brown, Steven Girvin and Mark Saffman for a critical reading of the manuscript. S.P. and J.D.T. acknowledge support from the National Science Foundation (QLCI grant OMA-2120757). J.D.T. also acknowledges additional support from ARO PECASE (W911NF-18-10215), ONR (N00014-20-1-2426), DARPA ONISQ (W911NF-20-10021) and the Sloan Foundation. S.K. acknowledges support from the National Science Foundation (QLCI grant OMA-2016136) and the ARO (W911NF-21-1-0012).

Author information

Authors and Affiliations

Contributions

J.D.T. and S.K. calculated the physical error model, and Y.W. and S.P. performed the error correcting code simulations. All authors discussed the results and contributed to writing the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks the anonymous reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisherâs note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the articleâs Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the articleâs Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wu, Y., Kolkowitz, S., Puri, S. et al. Erasure conversion for fault-tolerant quantum computing in alkaline earth Rydberg atom arrays. Nat Commun 13, 4657 (2022). https://doi.org/10.1038/s41467-022-32094-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-022-32094-6

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.