Abstract

Robots have transformed many industries, most notably manufacturing1, and have the power to deliver tremendous benefits to society, such as in search and rescue2, disaster response3, health care4 and transportation5. They are also invaluable tools for scientific exploration in environments inaccessible to humans, from distant planets6 to deep oceans7. A major obstacle to their widespread adoption in more complex environments outside factories is their fragility6,8. Whereas animals can quickly adapt to injuries, current robots cannot ‘think outside the box’ to find a compensatory behaviour when they are damaged: they are limited to their pre-specified self-sensing abilities, can diagnose only anticipated failure modes9, and require a pre-programmed contingency plan for every type of potential damage, an impracticality for complex robots6,8. A promising approach to reducing robot fragility involves having robots learn appropriate behaviours in response to damage10,11, but current techniques are slow even with small, constrained search spaces12. Here we introduce an intelligent trial-and-error algorithm that allows robots to adapt to damage in less than two minutes in large search spaces without requiring self-diagnosis or pre-specified contingency plans. Before the robot is deployed, it uses a novel technique to create a detailed map of the space of high-performing behaviours. This map represents the robot’s prior knowledge about what behaviours it can perform and their value. When the robot is damaged, it uses this prior knowledge to guide a trial-and-error learning algorithm that conducts intelligent experiments to rapidly discover a behaviour that compensates for the damage. Experiments reveal successful adaptations for a legged robot injured in five different ways, including damaged, broken, and missing legs, and for a robotic arm with joints broken in 14 different ways. This new algorithm will enable more robust, effective, autonomous robots, and may shed light on the principles that animals use to adapt to injury.

Similar content being viewed by others

References

Siciliano, B. & Khatib, O. Springer Handbook of Robotics (Springer, 2008).

Murphy, R. R. Trial by fire. Robot. Automat. Mag. 11, 50–61 (2004).

Nagatani, K. et al. Emergency response to the nuclear accident at the Fukushima Daiichi nuclear power plants using mobile rescue robots. J. Field Robot. 30, 44–63 (2013).

Broadbent, E., Stafford, R. & MacDonald, B. Acceptance of healthcare robots for the older population: review and future directions. Int. J. Social Robot. 1, 319–330 (2009).

Thrun, S. et al. Stanley: the robot that won the DARPA grand challenge. J. Field Robot. 23, 661–692 (2006).

Sanderson, K. Mars rover Spirit (2003–10). Nature 463, 600 (2010).

Antonelli, G. Fossen, T. I. & Yoerger, D. R. in Springer Handbook of Robotics (eds Siciliano, B. & Khatib, O. ) 987–1008 (Springer, 2008).

Carlson, J. & Murphy, R. R. How UGVs physically fail in the field. IEEE Trans. Robot. 21, 423–437 (2005).

Blanke, M. Kinnaert, M., Lunze, J. & Staroswiecki, M. Diagnosis and Fault-Tolerant Control (Springer, 2006).

Sproewitz, A., Moeckel, R., Maye, J. & Ijspeert, A. Learning to move in modular robots using central pattern generators and online optimization. Int. J. Robot. Res. 27, 423–443 (2008).

Christensen, D. J., Schultz, U. P. & Stoy, K. A distributed and morphology-independent strategy for adaptive locomotion in self-reconfigurable modular robots. Robot. Auton. Syst. 61, 1021–1035 (2013).

Kober, J., Bagnell, J. A. & Peters, J. Reinforcement learning in robotics: a survey. Int. J. Robot. Res. 32, 1238–1274 (2013).

Verma, V., Gordon, G., Simmons, R. & Thrun, S. Real-time fault diagnosis. Robot. Automat. Mag. 11, 56–66 (2004).

Bongard, J., Zykov, V. & Lipson, H. Resilient machines through continuous self-modeling. Science 314, 1118–1121 (2006).

Kluger, J. & Lovell, J. Apollo 13 (Mariner Books, 2006).

Jarvis, S. L. et al. Kinematic and kinetic analysis of dogs during trotting after amputation of a thoracic limb. Am. J. Vet. Res. 74, 1155–1163 (2013).

Fuchs, A., Goldner, B., Nolte, I. & Schilling, N. Ground reaction force adaptations to tripedal locomotion in dogs. Vet. J. 201, 307–315 (2014).

Argall, B. D., Chernova, S., Veloso, M. & Browning, B. A survey of robot learning from demonstration. Robot. Auton. Syst. 57, 469–483 (2009).

Wolpert, D. M., Ghahramani, Z. & Flanagan, J. R. Perspective and problems in motor learning. Trends Cogn. Sci. 5, 487–494 (2001).

Santello, M. Postural hand synergies for tool use. J. Neurosci. 18, 10105–10115 (1998).

Rasmussen, C. E. & Williams, C. K. I. Gaussian Processes for Machine Learning (MIT Press, 2006).

Mockus, J. Bayesian Approach to Global Optimization: Theory and Applications (Kluwer Academic, 2013).

Borji, A. & Itti, L. Bayesian optimization explains human active search. Adv. Neural Inform. Process. Syst. 26, 55–63 (2013).

Grillner, S. The motor infrastructure: from ion channels to neuronal networks. Nature Rev. Neurosci. 4, 573–586 (2003).

Benson-Amram, S. & Holekamp, K. E. Innovative problem solving by wild spotted hyenas. Proc. R. Soc. Lond. B 279, 4087–4095 (2012).

Pouget, A., Beck, J. M., Ma, W. J. & Latham, P. E. Probabilistic brains: knowns and unknowns. Nature Neurosci. 16, 1170–1178 (2013).

Körding, K. P. & Wolpert, D. M. Bayesian integration in sensorimotor learning. Nature 427, 244–247 (2004).

Derégnaucourt, S., Mitra, P. P., Fehér, O., Pytte, C. & Tchernichovski, O. How sleep affects the developmental learning of bird song. Nature 433, 710–716 (2005).

Wagner, U., Gais, S., Haider, H., Verleger, R. & Born, J. Sleep inspires insight. Nature 427, 352–355 (2004).

Ito, M. Control of mental activities by internal models in the cerebellum. Nature Rev. Neurosci. 9, 304–313 (2008).

Kohl, N. & Stone, P. Policy gradient reinforcement learning for fast quadrupedal locomotion. In Proc. IEEE Int. Conf. on ‘Robotics and Automation’ (ICRA) 2619–2624 (IEEE, 2004).

Lizotte, D. J. Wang, T. Bowling, M. H. & Schuurmans, D. Automatic gait optimization with Gaussian process regression. In Proc. Int. Joint Conf. on ‘Artificial Intelligence’ (IJCAI) 944–949 (2007).

Tesch, M. Schneider, J. & Choset, H. Using response surfaces and expected improvement to optimize snake robot gait parameters. In Proc. IEEE/RSJ Int. Conf. on ‘Intelligent Robots and Systems (IROS)’ 1069–1074 (IEEE, 2011).

Calandra, R. Seyfarth, A., Peters, J. & Deisenroth, M. P. An experimental comparison of bayesian optimization for bipedal locomotion. In Proc. IEEE Int. Conf. on ‘Robotics and Automation’ (ICRA) 1951–1958 (IEEE, 2014).

Mouret, J.-B. & Clune, J. Illuminating search spaces by mapping elites. Preprint at http://arxiv.org/abs/1504.04909 (2015).

Acknowledgements

We thank L. Tedesco, S. Doncieux, N. Bredeche, S. Whiteson, R. Calandra, J. Droulez, P. Bessière, F. Lesaint, C. Thurat, S. Ivaldi, C. Lan Sun Luk, J. Li, J. Huizinga, R. Velez, H. Mengistu, M. Norouzzadeh, T. Clune, and A. Nguyen for feedback and discussions. This work has been funded by the ANR Creadapt project (ANR-12-JS03-0009), the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme (grant agreement number 637972), and a Direction Générale de l’Armement (DGA) scholarship to A.C.

Author information

Authors and Affiliations

Contributions

A.C. and J.-B. M. designed the study. A.C. and D.T. performed the experiments. A.C., J.-B.M., D.T. and J.C. analysed the results, discussed additional experiments, and wrote the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Extended data figures and tables

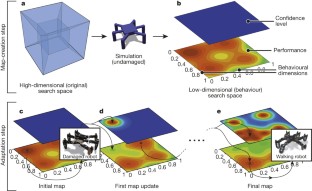

Extended Data Figure 1 An overview of the IT&E algorithm.

a, Behaviour–performance map creation. After being initialized with random controllers, the behavioural map (a2), which stores the highest-performing controller found so far of each behaviour type, is improved by repeating the process depicted in a1 until newly generated controllers are rarely good enough to be added to the map (here, after 40 million evaluations). This step, which occurs in simulation (and required roughly two weeks on one multi-core computer; see Supplementary Methods, section ‘Running time’), is computationally expensive, but only needs to be performed once per robot (or robot design) before the robot is deployed. b, Adaptation. In b1, each behaviour from the behaviour–performance map has an expected performance based on its performance in simulation (dark green line) and an estimate of uncertainty regarding this predicted performance (light green band). The actual performance on the now-damaged robot (black dashed line) is unknown to the algorithm. A behaviour is selected for the damaged robot to try. This selection is made by balancing exploitation—trying behaviours expected to perform well—and exploration—trying behaviours whose performance is uncertain (Supplementary Methods, section ‘Acquisition function’). Because all points initially have equal, maximal uncertainty, the first point chosen is that with the highest expected performance. Once this behaviour is tested on the physical robot (b4), the performance predicted for that behaviour is set to its actual performance, the uncertainty regarding that prediction is lowered, and the predictions for, and uncertainties about, nearby controllers are also updated (according to a Gaussian process model, see Supplementary Methods, section ‘Kernel function’), the results of which can be seen in b2. The process is then repeated until performance on the damaged robot is 90% or greater of the maximum expected performance for any behaviour (b3). This performance threshold (orange dashed line) lowers as the maximum expected performance (the highest point on the dark green line) is lowered, which occurs when physical tests on the robot underperform expectations, as occurred in b2.

Extended Data Figure 2 The contribution of each subcomponent of the IT&E algorithm.

a, Adaptation progress versus the number of robot trials. The walking speed achieved with IT&E and several ‘knockout’ variants that are missing one of the algorithm’s key components (defined in the table below the plots). Some variants (4 and 5) correspond to state-of-the-art learning algorithms (policy gradient31 and Bayesian optimization32,33,34). MAP-Elites (written by J.-B.M. and J.C.; ref. 35) is the algorithm that IT&E uses to create the behaviour–performance map (see Supplementary Methods). The bold lines represent the medians and the coloured areas extend to the 25th and 75th percentiles. b, c, Adaptation performance after 17 and 150 trials. Shown is the speed of the compensatory behaviour discovered by each algorithm after 17 and 150 evaluations on the simulated robot, respectively. For all panels, data are pooled across six damage conditions (the removal of each of the six legs in turn) for eight independently generated maps and replicated ten times (for a total of 480 replicates). P values are computed via a Wilcoxon ranksum test. The central circle is the median, the edges of the box show the 25th and 75th percentiles, the whiskers extend to the most extreme data points that are not considered outliers, and outliers are plotted individually. See experiment 2 in the Supplementary Information for methods and analysis.

Extended Data Figure 3 The IT&E algorithm is robust to environmental changes.

Each plot shows both the performance and required adaptation time for IT&E when the robot must adapt to walk on terrains of different slopes. a, Adaptation performance on an undamaged, simulated robot. On all slope angles, with very few trials, the IT&E algorithm (pink shaded region) finds fast gaits that outperform the reference gait (black dashed line). We performed ten replicates for each of the eight maps and each one-degree increment between −20° and +20° degrees (a total of 3,280 experiments). b, Adaptation performance on a damaged, simulated robot. The robot is damaged by having each of its six legs removed in six different damage scenarios. Data are pooled from all six of these damage conditions. For each damage condition, we performed ten replicates for each of the eight maps and each two-degree increment between −20° and +20° degrees (a total of 10,080 experiments). The median compensatory behaviour found via IT&E outperforms the median reference controller on all slope angles. The middle, black lines represent medians, while the colored areas extend to the 25th and 75th percentiles. In a, the black dashed line is the performance of a classic tripod gait for reference. In b, the reference gait is tried in all six damage conditions and its median (black line) and 25th and 75th percentiles (black coloured area) are shown. See experiment 3 in the Supplementary Information for methods and analysis.

Extended Data Figure 4 The IT&E algorithm is largely robust to alternative choices of behaviour descriptors.

a, b, The speed of the compensatory behaviour discovered by IT&E for various choices of behaviour descriptors. Performance is plotted after 17 and 150 evaluations in a and b, respectively. Experiments were performed on a simulated, damaged hexapod. The damaged robot has each of its six legs removed in six different damage scenarios. Data are pooled across all six damage conditions. As described in experiment 5 of the Supplementary Information, the evaluated behaviour descriptors characterize the following: (1) time each leg is in contact with the ground (‘Duty factor’); (2) orientation of the robot frame (‘Orientation’); (3) instantaneous velocity of the robot (‘Displacement’); (4) energy expended by the robot in walking (‘Energy (Total)’, ‘Energy (Relative)’); (5) deviation from a straight line (‘Deviation’); (6) ground reaction force on each leg (‘GRF (Total)’, ‘GRF (Relative)’); (7) the angle of each leg when it touches the ground (‘Lower-leg angle (Pitch)’, ‘Lower-leg angle (Roll)’, ‘Lower-leg angle (Yaw)’); and (8) a random selection without replacement from subcomponents of all the available behaviour descriptors (1)–(7) (‘Random’). We created eight independently generated maps for each of 11 intentionally chosen behavioral descriptors (88 in total). We created one independently generated map for each of 20 randomly chosen behavioural descriptors (20 in total). For each of these 108 maps, we performed ten replicates of IT&E for each of the six damage conditions. In total, there were thus 8 × 11 × 10 × 6 = 5,280 experiments for the intentionally chosen behavioural descriptors and 1 × 20 × 10 × 6 = 1,200 experiments for the randomly chosen behavioural descriptors. For the hand-designed reference gait (yellow) and the compensatory gaits found by the default duty factor behaviour descriptor (green), the bold lines represent the medians and the coloured areas extend to the 25th and 75th percentiles of the data. For the other treatments, including the duty factor treatment, black circles represent the median, the edges of the boxes show the 25th and 75th percentiles, the whiskers extend to the most extreme data points that are not considered outliers, and outliers are plotted individually. See experiment 5 in the Supplementary Information for methods and analysis.

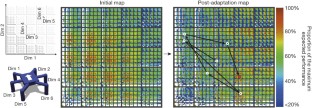

Extended Data Figure 5 How the behaviour performance map (data normalized) is explored to discover a compensatory behaviour.

Data are normalized each iteration to highlight the range of remaining performance predictions. The robot is adapting to damage condition C4 (see Fig. 3a). Colours represent the performance prediction for each point in the map relative to the highest performing prediction in the map at that step of the process. A black circle indicates the next behaviour to be tested on the physical robot. A red circle indicates the behaviour that was just tested (note that the performance predictions surrounding it have changed versus the previous panel). Arrows reveal the order that points have been explored. The red circle in the last map is the final, selected, compensatory behaviour. In this scenario, the robot loses leg number 3. The six-dimensional space is visualized according to the inset legend.

Extended Data Figure 6 How the behaviour performance map (data not normalized) is explored to discover a compensatory behaviour.

The data are not normalized in order to highlight that performance predictions decrease as it is discovered that predictions from the simulated, undamaged robot do not work well on the damaged robot. The robot is adapting to damage condition C4 (see Fig. 3a). Colours represent the performance prediction for each point in the map relative to the highest performing prediction in the first map. A black circle indicates the next behaviour to be tested on the physical robot. A red circle indicates the behaviour that was just tested (note that the performance predictions surrounding it have changed versus the previous panel). Arrows reveal the order that points have been explored. The red circle in the last map in the sequence is the final, selected, compensatory behaviour. In this scenario, the robot loses leg number 3. The six-dimensional space is visualized according to the inset legend. The data visualized in this figure are identical to those in the previous figure: the difference is simply whether the data are renormalized for each new map in the sequence.

Extended Data Figure 7 IT&E works on a completely different type of robot (the robotic arm experiment).

a, The robotic arm experimental setup. b, Tested damage conditions. c, Example of behaviour performance maps (colour maps) and behaviours (overlaid arm configurations) obtained with MAP-Elites. Left: A typical behaviour–performance map produced by MAP-Elites with five example behaviours, where a behaviour is described by the angle of each of the eight joints. The colour of each point is a function of its performance (in radians squared), which is defined as having low variance in the joint angles (that is, a zigzag arm has lower performance than a straighter arm that reaches the same point). Right: Neighbouring points in the map tend to have similar behaviours, thanks to the performance function, which would penalize more jagged ways of reaching those points. That neighbours have similar behaviours justifies updating predictions about the performance of nearby behaviours after testing a single behaviour on the real (damaged) robot. d, Accuracy (in metres) versus trial number for IT&E and traditional Bayesian optimization. The experiment was conducted on the physical robot, with 15 independent replications for each of the 14 damage conditions. Accuracy is pooled from all of these 14 × 15 = 210 experiments for each algorithm. The middle lines represent medians, while the coloured areas extend to the 25th and 75th percentiles. e, Success for each damage condition. Shown is the success rate for the 15 replications for each damage condition, defined as the percentage of replicates in which the robot reaches within 5 cm of the bin centre. f, Trials required to adapt. Shown is the number of iterations required to reach within 5 cm of the bin centre. g, Accuracy after 30 physical trials for each damage condition (with the stopping criterion disabled). For f and g, the central circle is the median, the edges of the box show the 25th and 75th percentiles, the whiskers extend to the most extreme data points that are not considered outliers, and outliers are plotted individually. See experiment 1 in the Supplementary Information for methods and analysis.

Supplementary information

Supplementary Information

This file contains Supplementary Methods, Supplementary Experiments 1 to 5, full captions for Supplementary Videos 1-2, and Supplementary References. (PDF 499 kb)

Damage Recovery in Robots via Intelligent Trial and Error

This video shows the Intelligent Trial and Error Algorithm in action on the two experimental robots in this paper: a hexapod robot and a robotic arm (Fig. 3). The video shows several examples of the different types of behaviours that are produced during the behaviour-performance map creation step, from classic hexapod gaits to more unexpected forms of locomotion. Then, it shows how the hexapod robot uses that behaviour-performance map to adapt to damage that deprives one of its leg of power (Fig. 3a:C3). The video also illustrates how the Intelligent Trial and Error Algorithm also finds a compensatory behaviour for the robot arm. Finally, adaptation to a second damage condition is shown for both the hexapod and robotic arm. (MP4 27802 kb)

A Behavior-Performance Map Containing Many Different Types of Walking Gaits.

In the behavior-performance map creation step, the MAP-Elites algorithm produces a diverse collection of different types of walking gaits. The video shows several examples of the different types of behaviors that are produced, from classic hexapod gaits to more unexpected forms of locomotion. (MP4 18899 kb)

Rights and permissions

About this article

Cite this article

Cully, A., Clune, J., Tarapore, D. et al. Robots that can adapt like animals. Nature 521, 503–507 (2015). https://doi.org/10.1038/nature14422

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/nature14422

- Springer Nature Limited

This article is cited by

-

A geometric semantic macro-crossover operator for evolutionary feature construction in regression

Genetic Programming and Evolvable Machines (2024)

-

Towards Jumping Skill Learning by Target-guided Policy Optimization for Quadruped Robots

Machine Intelligence Research (2024)

-

Knowledge-integrated machine learning for materials: lessons from gameplaying and robotics

Nature Reviews Materials (2023)

-

Muscle-inspired soft robots based on bilateral dielectric elastomer actuators

Microsystems & Nanoengineering (2023)

-

From rigid to soft to biological robots

Artificial Life and Robotics (2023)