SUBSET ARMA MODEL IDENTIFICATION USING GENETIC

ALGORITHMS

By Carlo Gaetan

University of Padua

First version received July 1998

Abstract. Subset models are often useful in the analysis of stationary time series.

Although subset autoregressive models have received a lot of attention, the same

attention has not been given to subset autoregressive moving-average (ARMA) models,

as their identi®cation can be computationally cumbersome. In this paper we propose to

overcome this disadvantage by employing a genetic algorithm. After encoding each

ARMA model as a binary string, the iterative algorithm attempts to mimic the natural

evolution of the population of such strings by allowing strings to reproduce, creating

new models that compete for survival in the next population. The success of the

proposed procedure is illustrated by showing its ef®ciency in identifying the true model

for simulated data. An application to real data is also considered.

Keywords. Subset models; identi®cation; genetic algorithm; BIC.

1.

INTRODUCTION

Subset models are often useful in time series analysis, especially when the data

exhibit some form of periodic behaviour with a range of different natural

periods, for instance days, weeks, months and years.

Recentlly, a class of subset autoregressive (SAR) models has been proposed

by several reseachers, such as McClave (1975), Penm and Terrell (1982),

Haggan and Oyetunji (1984), Yu and Lin (1991) and Zhang and Terrell (1997).

A zero-mean stationary stochastic process fX t g is said to be a SAR model

of order k (k 1 , . . ., k p ), with k 1 , . . . , k p , if it satis®es the stochastic

difference equation

X t ÿ ök 1 X tÿ k 1 ÿ . . . ÿ ö k p X tÿ k p W t

(1)

where fW t g is a white noise sequence with zero mean and variance ó 2 . 0.

This model is a special case of the autoregressive (AR) model of order k p

with zero coef®cients for some intermediate lags. As AR models are the

simplest models to estimate amongst linear time series models and may be

easily used for forecasting purposes, selection procedures for SAR models have

received a lot of attention in the literature.

Usually the selection of the optimal subset model is based upon the

0143-9782/00/04 559±570

JOURNAL OF TIME SERIES ANALYSIS Vol. 21, No. 5

# 2000 Blackwell Publishers Ltd., 108 Cowley Road, Oxford OX4 1JF, UK and 350 Main Street,

Malden, MA 02148, USA.

�560

C. GAETAN

Kullback±Leibler information. For a linear time series model, this can be

approximated by the penalized function

log ó^ 2m m

C(T )

T

where m is the number of parameters in the linear stochastic difference equation

(1), ó^ 2m is the conditional maximum likelihood estimate of the residual variance

ó 2 and C(T ) is a function of the number T of observations. The Akaike

information criterion (AIC) (Akaike, 1973), the Bayesian information criterion

(BIC) (Schwartz, 1978) and the Hannan±Quinn criterion (Hannan and Quinn,

1979) correspond to the choices C(T ) 2, C(T ) log T and C(T )

2 log log T , respectively.

In the case of full AR models, the small sample properties of the criteria are

discussed by Hurvich and Tsai (1989), where it appears that among the

consistent criteria the BIC selects the true model more frequently.

In principle, without considering the computational effort, one can ®nd the

best SAR model of a speci®ed size m by comparing ó^ 2m among the SAR

models with all possible lag combinations and selecting the one with smallest

ó^ 2m . The penalized function is then used to identify the optimal SAR from the

previously selected SAR models by letting m vary.

McClave (1975) proposed an ef®cient algorithm to search for the optimal

SAR model without having to evaluate all possible lag combinations for a

speci®ed size. However, this means that if several models of size m have very

similar ó^ 2m, only one of them, the one with the smallest ó^ 2m , will be considered

in the ®nal comparison procedure.

On the other hand the diagnostic checking of different models with criterion

values near to optimum can be advisable. Therefore, Haggan and Oyetunji

(1984) developed an algorithm to evaluate all possible models ef®ciently. Since

the number of possible models increases exponentially, the Haggan±Oyetunji

method becomes less ef®cient when k p becomes large. In addition the unknown

parameter k p should be carefully selected before modelling.

Yu and Lin (1991) modi®ed the Haggan±Oyetunji method to overcome these

disadvantages. Their proposal is to use an iterative model-building approach.

First, a tentative model is chosen with a lag that has the most signi®cant

absolute value in the inverse correlation function. Then, this ®rst choice is

re®ned using he cross-correlation function (CCF) between the residuals and the

observed series to choose an additional lag that has the most signi®cant CCF

value.

More recently, Zhang and Terrell (1997) introduced a new statistic, the

projection modulus, and developed a new algorithm for selecting the optimal

SAR that is considerably more ef®cient than the McClave algorithm, in the

sense that fewer SAR models need to be included to ®nd the optimal one.

Moreover it is more accurate than the Yu±Lin method in the sense of a true

model being identi®ed with higher probability.

SAR models have received a lot of attention in the literature, but similar

# Blackwell Publishers Ltd 2000

�SUBSET ARMA MODEL IDENTIFICATION

561

attention has not been given to the subset selection for autoregressive movingaverage (ARMA) models. Nevertheless, these models play an important role in

time series analysis; see, for example, the so-called airline model (Box et al.,

1994)

X t ÿ X tÿ1 ÿ X tÿ12 X tÿ13 W t ÿ èW tÿ1 ÿ ÈW tÿ12 èÈW tÿ13 :

Selection of subset ARMA models is more complicated because the search

space of possible models is larger than in the previous case. It is easy to see,

for example, that the Yu±Lin and Zhang±Terrell search procedures are

infeasible. In addition, (conditional) maximum likelihood (ML) estimation

methods are onerous to apply.

In this paper we develop a selection procedure for subset ARMA models

based on innovation regression methods and stochastic binary search

algorithms. In particular we shall consider the genetic algorithms introduced

by Holland (1975) in order to perform the selection. There are several reasons

for the choice of a genetic algorithm. First, the space of the solutions (all

possible models) is ®nite, discrete but large. Second, each model can be coded

quite easily in terms of binary strings. In addition the ®nal population of

models generated by this algorithm allows us to consider many interesting

models.

The plan of the paper is as follows. In Section 2 we describe the selection

procedure that is a slight modi®cation of the Hannan±Rissanen method (1982).

In Section 3 the genetic algorithm is introduced and in Section 4 we discuss

particular features of the genetic algorithm suitable for the selection problem.

Some numerical examples with synthetic and real data are presented in Section

5. Conclusions are drawn in Section 6.

2.

SELECTION PROCEDURE

Denote k (k 1 , . . ., k p ), i (i1 , . . ., iq ), with k 1 , . . . , k p and i1 , . . .

, iq , and consider a zero-mean stationary stochastic process fX t g generated by

the ARMA(k, i) model

X t ÿ ö k 1 X tÿ k 1 ÿ . . . ÿ ö k p X tÿ k p W t ÿ èi1 W tÿi1 ÿ . . . ÿ èi q W tÿi q :

The model is assumed to be invertible.

Innovation regression methods for ARMA modelling (Hannan and Rissanen,

1982) basically consist of two steps that determine the orders as well as

estimate the parameters. The ®rst step is to ®t an AR model with high order

to the observations. The second step is to apply the least squares (LS) method to

the observations and the estimated innovations of the AR model in order to

estimate the ARMA parameters. Because the optimization problems in these

steps are quadratic, they are computationally cheaper than the ML method. We

shall use the steps of the Hannan±Rissanen procedure as follows.

# Blackwell Publishers Ltd 2000

�562

C. GAETAN

1. Fit an AR(n) model, using the Yule±Walker equations

n

X

á l X tÿ l W t :

Xt

l1

Hannan and Kavalieris (1984) recommended choosing the order n using the

~ 1 , . . ., á

~n

AIC, and the upper bound (ln T ) a, 0 , a , 1, is suggested for n. Let á

be the estimates of á1 , . . ., án . Then calculate the estimated innovations

n

X

~t Xt ÿ

~ l X tÿ l

á

t n 1, . . ., T :

Z

l1

2. Let P and Q be suf®ciently large so that they are greater than the true

maximum order for k and i, respectively. For each k and i such that k p < P

� of ö (ö k , . . ., ö k )9,

� and è,

and iq < Q calculate the LS estimates, ö

1

p

è (èi1 , . . ., èi q )9, minimizing

!2

q

p

T

X

X

1X

2

~ tÿi l

ö k j X tÿ k j

èi l Z

Xt ÿ

ó (ö, è)

T t t0

j1

l1

where t0 max(n k p , n iq ). Choose k � and i� minimizing

� ( p q) ln T :

� è)

BIC(k, i) ln ó 2 (ö,

T

Note that if P . n and Q . 0 the design matrix in the LS procedure could

not have full rank, so a sensible upper bound for P is n. We can reduce the

computations in the second stage substantially if we set t0

max(n P, n Q). For large T there will be no notable effects in BIC values.

In this case if the number 2 PQ of possible subset models is of order 107 , the

global optimum is obtainable using branch-and-bound techniques (Miller, 1990,

p. 63). However, for reasonably large P and Q an exhaustive optimum search

becomes impractical, so a random search algorithm can be very helpful, as we

shall see in the following sections.

3.

GENETIC ALGORITHMS

Genetic algorithms (GAs) are randomized global search techniques that are

based on the principles of natural selection to ®nd the maximum or other high

values of an objective function g. GAs differ from traditional search techniques

in that they use a chromosomal representation of the problem space, gather and

evaluate information on many points in the search space simultaneously, and use

recombination operators to preserve discovered information. Holland's schema

theorem (see, for example, Goldberg, 1989, p. 28) suggests that the genetic

structure of relatively good solutions can be combined to ®nd the genetic

# Blackwell Publishers Ltd 2000

�SUBSET ARMA MODEL IDENTIFICATION

563

structure of better solutions. Therefore, while GAs are randomized and

probabilistic, they do not perform a directionless search. Statistical applications

of GAs have been discussed by Chatterjee et al. (1996) and cover optimization

problems where the usual mathematical requirements, e.g. continuity, differentiability and convexity, do not apply. Relevant areas of application are variable

selection problems in regresion analysis (Leardi et al., 1992) and outlier

detection (Crawford and Wainwright, 1995; Baragona et al., 1997). The simplest

GA starts with a population of size N of binary encoded strings, or

chromosomes, of length L. Each of the L positions represents a gene and each

gene's value is randomly chosen to be zero or one. The string represents one

solution in the search space. A positive ®tness function f is calculated as a

monotone increasing function of g for each chromosome in the current

generation. Then N parents for the next generation are selected with

replacement, with the probability pj of choosing the jth chromosome in the

current population being proportional to its ®tness f j , i.e.

fj

pj P N

i1 f i

:

(2)

These parents are considered in pairs. For each pair a crossover operator

randomly selects a point between 1 and L with probability pc and two children

are created by exchanging the parent's genetic structure after the crossover point.

If not crossover takes place, the two children are formed that are exact copies of

their respective parents.

Afterwards a mutation operator is applied on each child's genes. This

operator ¯ips the bits with probability Pm. Then the children replace their

parents in the population.

The breeding cycle is iterated for a ®xed number of generations or until

some convergence criterion is met. On termination, the string in the ®nal

population with the best value of g can be returned as the solution to the

optimization problem. The theoretical basis for the convergence to global

optimum strings is given by Holland's schema theorem (but see Jenninson and

Sheehan (1995) for a critical discussion about this theorem). With a binary

representation, schemata are strings based on a ternary alphabet: 0, 1, and �

(do not care). However, since a good solution may be lost by the algorithm, a

more ef®cient strategy is to save the best solution found at any iteration and

return this as a solution. Variations of the simple GA and detailed explanations

of the mechanics are given by Goldberg (1989). The construction of a GA

requires speci®cation of the string representation and the population size N ,

choice of ®tness function, implementation of crossover and mutation operators,

and a methodology for creating the initial population. In the next section we

discuss these issues in tackling the identi®cation problem for subset ARMA

models.

# Blackwell Publishers Ltd 2000

�564

C. GAETAN

4.

APPLYING A GA TO THE IDENTIFICATION PROBLEM

Implementation of the GA, as described in Section 3, ®rst requires a string

representation. Each chromosome z (z1 , . . ., zPQ ) represents an ARMA

model. Its length is equal to P Q, i.e. to the maximum orders allowed in

stage 2. In this binary encoded string, the ®rst P genes correspond to the AR

parameters, the last Q to the MA ones, and a gene is 1 if the corresponding

parameter is not equal to zero. For instance, if P Q 5, the chromosome

z (0, 1, 0, 0, 0, 1, 0, 0, 1, 0) corresponds to the ARMA model

X t ÿ ö2 X tÿ2 W t ÿ è1 W tÿ1 ÿ è4 W tÿ4 :

After deciding on encoding, the second decision to make in using a GA is

how to perform selection. From a general point of view, the function f must

provide suf®cient selectivity to ensure that the algorithm prefers superior

solutions to the extent that it eventually reaches an optimal or near-optimal

answer. On the other hand, selectivity must not be so strong that populations

polarize at an early stage. In this case the potential advantages of maintaining a

diverse collection of ARMA models are lost. Since the BIC may take positive

values, the simple choice f ÿBIC is unsuitable as it provides negative

®tness. In the case of a negative value of f , Goldberg (1989, p. 76) suggests

using an adaptive form of the ®tness function, namely

f j M 1 ÿ BIC j :

(3)

BIC j is the BIC value for the jth chromosome in the current population, M is

the maximum value of BIC in the current population and the constant 1 is added

to ensure that any chromosome in the current population has a non-zero

probability of selection.

However, this choice is also not completely satisfactory. In fact our

experiments have shown that the ®tness function (3) can maintain the diversity

in the population for more than 100 generations but does not provide a high

enough level of selectivity in the later stages to move towards the best

solutions.

One can try to avoid this problem by using an `elitist selection' (ES) that

retains a number r of the best chromosomes in each generation and replaces

randomly chosen chromosomes with them. No speci®c rule for the choice is

given in the literature. We have found that r 2 is suf®cient.

Another selection scheme that we have experimented with is the `Boltzmann

selection' (BS) (Goldberg, 1990), in which a continuously varying `temperature' ä . 0 controls the rate of selection according to a preset schedule. In this

scheme the ®tness function is given by

�

�

BIC j

ä

:

(4)

f j exp ÿ

ä

A high starting temperature means that every chromosome has a reasonable

probability of reproduction. When the GA is running, the temperature is

# Blackwell Publishers Ltd 2000

�565

SUBSET ARMA MODEL IDENTIFICATION

gradually lowered, allowing the GA to narrow more closely to the best part of

the search space while maintaining the appropriate degree of diversity. The

classic schedule employs a constant temperature decrement of the form

ä k ä0 á k , with ä0 . 0 and 0:85 , á , 0:99. Given these guidelines, after

experimenting with several values we set á 0:95 and ä0 1.

Results showed little dependence on the choice of population size for values

of M in the range 20±40, and on the choice of the crossover probability for

values of pc in the range 0.5±0.9. Smaller values of pc caused a lack of ability

ot explore the entire space of solutions and premature convergence.

Low values for the probability of mutation pm are usually recommended in

order to avoid the disruption of good schemata. We chose pm 0:05.

Finally, according to the parsimony principle, we started from an initial

population with few parameters on average. So each string of the initial

population is chosen according to N independent trials from the Bernoulli

distribution with probability 0.3.

5.

NUMERICAL EXAMPLES

5.1. Synthetic data

The subset selection algorithm was applied to 100 independent simulations (each

with different sample sizes T 100, 300, 600, 1000) of the ARMA models

shown in Table I with W t � N (0, 1).

For each model we set the maximum orders P, Q. Since, in the ®rst step, the

selected order n of the AR model could be less than P, especially for small T ,

the chromosome length N is set equal to min(P, n) Q. The parmeters of the

GA are reported in Table II. We used the GA in a kind of deterministic

fashion, i.e. we ®xed the number of iterations equal to 100 and, before each

GA run, the seed of the pseudo-random generator was set to the same value,

regardless of the selection procedure. Thus for each of the 100 simulations the

procedure employed the same pseudo-random numbers and performed 3000

regressions.

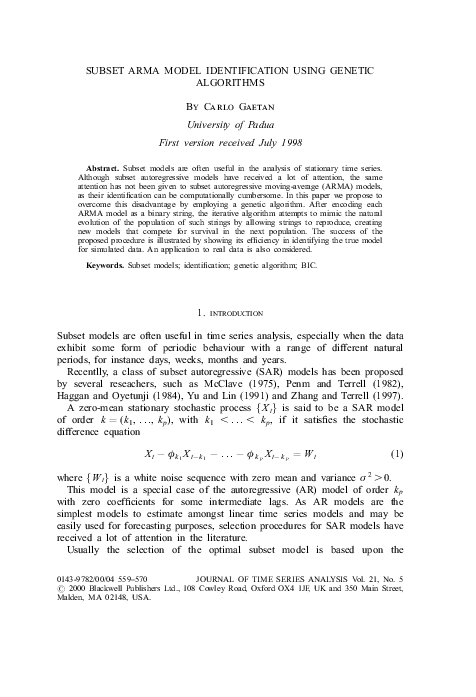

As an illustrative example we shall consider one of these simulations for

Model C with T 300. Figure 1 shows the minimum, the maximum and the

mean values of BIC evaluated in each population during 100 iterations with BS.

TABLE I

Design of Simulation Experiments

Model

A

B

C

AR

MA

ö1 0:8, ö3 ÿ0:4, ö12 0:2

±

ö1 0:8, ö3 0:4, ö12 0:2

±

è3 ÿ0:3, è9 0:6

è8 ÿ0:8

# Blackwell Publishers Ltd 2000

�566

C. GAETAN

TABLE II

Parameters of the GA

Model

A

B

C

P

14

3

14

Q

5

11

8

M

30

30

30

pc

0.8

0.8

0.8

pm

0.05

0.05

0.05

äk

r

k

0.95

0.95 k

0.95 k

2

2

2

Figure 1. Genetic algorithm iterations.

The outcomes of these simulations are summarized in Table III. Four

statistics, s1 , s2 , s3 , s4 , are used to summarize the results: s1 is the proportion

of simulations in which the true model is detected; s2 is the proportion of

simulations in which non-existent lags were erroneously included; s3 is the

proportion of simulations in which true lags were erroneously excluded; s4 is

the proportion of simulations in which we have erroneous exclusions and

inclusion of lags. The results indicate that no substantial difference exists

between BS and ES. Note that when T 100 the selection procedure performs

very badly. The result is not surprising, especially for Model A because the

condition P < n is seldom attained. In other cases the procedure seems to work

well.

To illustrate the ef®ciency of the GA with BS in identifying the true model,

we show in Table IV the mean and the standard deviation of the ML estimates

for Model C when T 300. The results indicate that mean values are close to

the true values used to generate the synthetic series.

# Blackwell Publishers Ltd 2000

�567

SUBSET ARMA MODEL IDENTIFICATION

TABLE III

Results from Simulation Experiments

BS

ES

Model

s1

s2

s3

s4

s1

s2

s3

s4

T 100

A

B

C

5

32

12

1

9

5

93

58

83

1

1

0

3

30

13

2

9

6

94

60

81

1

1

0

T 300

A

B

C

72

76

55

18

18

31

10

6

14

0

0

0

69

76

53

17

18

32

14

6

15

0

0

0

T 600

A

B

C

87

85

77

12

14

22

1

1

1

0

0

0

88

85

67

11

14

29

1

1

4

0

0

0

T 600

A

B

C

85

88

81

14

12

18

1

0

1

0

0

0

84

88

72

15

12

28

1

0

0

0

0

0

TABLE IV

Mean Values of the Estimtaed Parameters for Model C and T 300 (Standard

Deviation in Parentheses)

^ i , i 1, . . ., 14

ö

0.7900 (0.0415)

ÿ0.0014 (0.0140)

ÿ0.0071 (0.0304)

0.0063 (0.0319)

^

èi , i 1, . . ., 8

ÿ0.0016 (0.0105)

0.0000 (0.0000)

ÿ0.0030

0.0000

ÿ0.0008

ÿ0.0004

(0.0379)

(0.0000)

(0.0125)

(0.0209)

ÿ0.4040 (0.0464)

ÿ0.0051 (0.0353)

0.0106 (0.0439)

0.0000 (0.0000)

0.0023 (0.0315)

0.1789 (0.0800)

0.0024 (0.0145)

0.0002 (0.0115)

0.0023 (0.0230)

0.0004 (0.0035)

ÿ0.0009 (0.0093)

ÿ0.8134 (0.0950)

5.2. Real data

We consider the monthly sales of red wine by Australian winemakers from

Janurary 1980 through October 1991 (Brockwell and Davis, 1996). Figure 2

shows sample autorocorrelations and partial autocorrelations after taking natural

logarithms and differencing at lag 12. Inspecting the standard errors of the

coef®cient estimators, Brockwell and Davis (BD) suggest two different ARMA

models for the trnasformed data (see Table V). We ran the GA algorithm using

BS for 100 iterations with parameters P 14, Q 14, M 20, pc 0:8,

pm 0:05, ä k 0:95 k , r 2.

Note that in this case the number of possible subset models is of order 108 .

# Blackwell Publishers Ltd 2000

�568

C. GAETAN

Figure 2. Sample autocorrelation and partial autocorrelation functions of transformed wine data.

TABLE V

Wine Data Models

Model

BD: AR(12)

BD: MA(13)

GA:ARMA(8,12)

Non-zero coef®cients

BIC

AIC

ö1 , ö5 , ö8 , ö11 , ö12

è1 , è2 , è5 , è8 , è10 , è12 , è13

ö1 , ö5 , ö8 , è12

ÿ4.0823

Not invertible

ÿ4.2644

ÿ4.1997

ÿ4.4128

ÿ4.3583

Table V shows the best model found in these iterations. The sample

autocorrelation and partial autocorrelation functions of residuals (Figure 3)

indicate that an adequate and parsimonious model was obtained.

6.

CONCLUDING REMARKS

As a general comment we should say that, although we are able to report a

successful application of the GA to the subset selection of ARMA models,

further development remain to be explored. This paper deals primarily with

univariate ARMA models, but the procedure would be very useful in the case of

vector ARMAX models. The author is currently investigating extensions in this

direction. Secondly, another general purpose optimization algorithm is available

# Blackwell Publishers Ltd 2000

�SUBSET ARMA MODEL IDENTIFICATION

569

Figure 3. Sample autocorrelation and partial autocorrelation functions of residuals.

in the literature: the simulated annealing algorithm. An interesting study would

be to compare simulated annealing (Kirkpatrick et al., 1983) with GAs. Finally,

a natural question concerns the statistical properties of the ®nal population of

the GA.

REFERENCES

Akaike, H. (1973) Information theory and an extension of the maximum likelihood principle. In

2nd International Symposium on Information Theory (eds B. Petrov and F. Csaki). Budapest:

AkadeÂmiai KiadoÂ, pp. 267±81.

Baragona, B., Battaglia, F. and Calzini, C. (1997) Genetic algorithms for outlier identi®cation

in time series. Technical Report, Dipartimento di Sociologia, UniversitaÂ, `La Sapienza', Rome.

Box, G. E. P., Jenkins, G. M. and Reinsel, G. C. (1994) Time Series Analysis, Forecasting and

Control, Revised Edition. San Francisco, CA: Holden-Day.

Brockwell, P. J. and Davis, R. A. (1996) Introduction to Time Series and Forecasting. New

York: Springer.

Chatterjee, S., Laudato, M. and Lynch, L. A. (1996) Genetic algorithms and their statistical

applications: an introduction. Comput. Stat. Data Anal. 22, 633±51.

Crawford, K. D. and Wainwright, R. L. (1995) Applying genetic algorithms to outlier

detection. In Proceedings of the Sixth International Conference on Genetic Algorithms (ed. L. J.

Eshelman). San Mateo, CA: Morgan Kaufmann, pp. 546±50.

Goldberg, D. E. (1989) Genetic Algorithms in Search, Optimization and Machine Learning.

Reading, MA: Addison-Wesley.

б (1990) A note on Boltzmann tournament selection for genetic algorithms and populationoriented simulated annealing. Complex Syst. 4, 445±60.

# Blackwell Publishers Ltd 2000

�570

C. GAETAN

Haggan, V. and Oyetunji, O. B. (1984) On the selection of subset autoregressive time series

models. J. Time Ser. Anal. 5, 103±13.

Hannan, E. J. and Kavalieris, L. (1984) A method for autoregressive-moving average estimation.

Biometrika 72, 273±80.

б and Quinn, B. G. (1979) The determination of the order of an autoregression. J. R. Stat. Soc.,

Ser. B 41, 190±95.

б and Rissanen, J. (1982) Recursive estimation of mixed autoregressive-moving average order.

Biometrika 69, 81±94. Correction: 70 (1983), 303.

Holland, J. H. (1975) Adaptation in Natural and Arti®cial Systems. Ann Arbor MI: University of

Michigan Press.

Hurvich, C. M. and Tsai, C. L. (1989) Regression and time series model selection in small

samples. Biometrika 76, 297±307.

Jenninson, C. and Sheehan, N. (1995) Theoretical and empirical properties of the genetic

algorithm as a numerical optimizer. J. Comput. Gr. Stat. 4, 296±318.

Kirkpatrick, S., Gelatt, C. D. and Vecchi, M. P. (1983) Optimization by simulated annelaing.

Science 220, 671±80.

Leardi, R., Boggia, R. and Terrile, M. (1992) Genetic algorithm as a strategy for feature

selection. J. Chemomet. 6, 267±81.

McClave, J. (1975) Subset autoregression. Technometrics 17, 213±19.

Miller, A. J. (1990) Subset Selection in Regression. London: Chapman and Hall.

Penm, J. H. W. and Terrell, R. D. (1982) On the recursive ®tting of subset autoregressions.

J. Time Ser. Anal. 3, 43±59.

Schwartz, G. (1978) Estimating the dimension of a model. Ann Stat. 6, 461±64.

Yu, G. and Lin, Y. (1991) A methodology for selecting subset autoregressive time series models.

J. Time Ser. Anal. 12, 363±73.

Zhang, X. and Terrell, R. D. (1997) Projection modulus: a new direction for selecting subset

autoregressive models. J. Time Ser. Anal. 18, 195±212.

# Blackwell Publishers Ltd 2000

�

Carlo Gaetan

Carlo Gaetan