In this article, I look at the history of running untrusted code in browsers, contrasting WebAssembly to Java and Flash on an architectural level. To top it off, I will also show a Rust code example to demonstrate the sandboxing techniques of Wasm. I hope by reading this article, you will gain a new appreciation for the design decisions behind WebAssembly.

This is a continuation of the Wasm in the Wild series, where I go deep into how and why WebAssembly is used in practice. We are still at the beginning of the journey, covering the basics and building up an understanding of why Wasm was created in the first place. As we move along, episodes will be more practice-oriented and include code from real-world Wasm projects.

Introduction

Good morning, I hope you are ready for the next leg of our journey to the WebAssembly mountains. Previously, in Fundamentals of WebAssembly, we started at an altitude of 728 m and finished the day at 922 m. On the way there, I explained what Wasm is and what kind of work a Wasm runtime does. We briefly looked at the Wasm stack machine and its efficient type and memory checks.

Today, we will stay around the 920 m height while I tell you why sandboxed execution is a useful thing to have inside the browser. Then, we will go a bit lower and have a look at why WebAssembly is specifically suited for running in the browser. This will require a bit of history around in-browser code execution but will end with a zero-dependency, low-level example connecting JavaScript and Rust before we set up camp again.

When is WebAssembly the right tool?

Let us begin with the why. Why is WebAssembly, or Wasm for short, useful in any circumstances?

The fact is, Wasm is put to use in many contexts, ranging from the browser to cloud data centers, to embedded systems, and even to blockchains. They all use Wasm as a sandboxed execution environment. Portability and performance are often mentioned as advantages of Wasm over other sandboxing technologies. That’s great and I will try to back up this claim today by exploring some architectural decisions behind WebAssembly. But first I want to explain why we even need sandboxed execution in the first place.

The new question then is, why is sandboxing necessary? It mostly boils down to the difference between running a trusted installed program or running code that comes as input over the wire. It’s the difference between how much you trust your browser versus how much you trust a random website.

Your browser usually doesn’t need to run in a sandbox, running it as a normal process directly managed by the operating system is fine. We generally trust the code to do nothing nefarious with that level of access to your system.

But the JavaScript code running inside the browser is a different beast. We should not trust that code, at all. The code is from an unknown source and executed immediately after loading a page. Every time you click on a link on the web, you essentially agree to download code from an unknown source and run it without further questions. This is scary.

That’s why it’s important JavaScript runs in what we call a sandbox. The sandbox isolates the code from the rest of your system. Technically speaking, this means JavaScript is not allowed to directly communicate with the operating system. A normal process would do that through system calls, which programmers usually use through the standard library of their language. This is necessary to access system resources like the filesystem, the network, a webcam, etc.

JavaScript, by design, simply has no way to initiate systems calls. The language is not intended to interact with your operating system directly. Instead, code always has to go through explicit web APIs implemented by your browser. This is a very effective way of isolating code from the rest of the system but allowing specific access through a protective API.

But sometimes running only JS in the browser is not enough. Maybe you have code written in a different language that you want to run in the browser. WebAssembly can allow that other language to run inside a sandbox, like many older technologies allowed to do as well. I am here to tell you the tale of why Wasm has become the best choice to solve this problem.

This is where we take a sharp turn and start going down the history route.

Code execution in the browser

When JavaScript was first announced in 1995, it was the first time websites could run arbitrary code inside the browser. However, the capabilities were very limited. Basic DOM manipulation was possible but even that was not as flashy as you might think, since HTML was also much more basic at the time. JavaScript as a language was also much barren, without classes or modules, let alone asynchronous execution. Oh and obviously, there was no ecosystem with packages and frameworks around it as we are so used to today.

Hence, it’s not very surprising that developers were looking into ways of taking full applications, written in “real” programming languages and running them in the browser. But then we run into the question of sandboxing again.

Let’s say your application is written in C. The standard library of C, also known as libc, assumes it is running as a process managed by an operating system and not by a browser. Retrofitting some kind of sandbox on top of that probably seemed like an impossible task. So instead, people looked to programming languages that run inside a runtime that can be used to sandbox the code but still grant more power to the code than what was possible in JavaScript at that time.

To make a long story short, Java Applets were born as one of the answers. Since Java was also new in 1995, the ecosystem was not necessarily larger than that of JS. But Java Applets ran in a separate process from the browser and could render a graphical window into a frame of the browser. Java Applets were essentially standalone applications that could also be started from inside a browser. They couldn’t access the DOM but that was not a huge loss. Developers didn’t really want to bother with the DOM, rendering pixels directly gives much tighter control to the frameworks implemented in Java.

With Java Applets, sandboxing is done inside the Java Virtual Machine (JVM) which loads Java Applets in a restricted environment. For example, filesystem access is forbidden and network requests are only allowed to the server that served the code, preventing cross-site scripting attacks. And similar to how a Wasm runtime type-checks code before executing it, the JVM also checks the Java Bytecode before executing it, ensuring memory isolation and control flow integrity.

To make this work inside the browser, users needed to install a plugin by Sun Microsystems. This adds a fourth software-maintaining entity to the existing three (OS, website, browser) when a website is displayed. This means more chances of compatibility failures and security holes.

Alternative ecosystems were created, too. One around Adobe with the Flash Player plugin running primarily ActionScript code. Another from Microsoft with Silverlight running primarily C# code. They also rely on plugins to bring more powerful languages into the browser.

Plugins like these have fallen out of favor. All three mentioned above have been discontinued for many years now. Many modern web developers probably don’t realize how popular they once were, if they know about their existence at all. That’s how low they have fallen by 2024.

But what lead to the fall of these giants? I wasn’t old enough to be a professional developer when this started to unfold, but from my research on the topic, I would say it was a number of things.

HTML5 was released in 2008 and introduced <video> and <canvas> among other

things. With that, suddenly there was native support in browsers for highly

interactive content. Playing a video no longer required a plugin that could

handle the real-time requirements of video decoding, nor did it require a

special way of rendering pixels to the screen. The browser can do videos

natively now and even JavaScript can now render pixels to the screen for things

like games.

Around the same time, smartphones became more popular, after the announcement of the initial iPhone happened in 2007. The third-party, proprietary plugins struggled to adapt to the new requirements, such as touch input and energy efficiency. They might have been able to catch up if they had the support of the rising mobile platform software giants, Google and Apple. But both of them helped push forward open standards such as HTML5 in favor of third-party plugins.

Case in point, Steve Jobs pointed out various technical shortcomings of code execution through browser plugins in his open letter Thoughts on Flash in 2010. With that, Apple certainly accelerated the move of the industry towards HTML5.

In the same letter, Jobs also made it clear what his most important reason for banning Flash from iPhones, iPods, and iPads was. He wanted apps to be tailored for Apple’s devices instead of cross-platform products that always lag behind in features and innovation. In other words, if an application is more sophisticated than a browser can handle already natively without plugins, then this application should become an app in the App Store instead of a website.

The rise of apps and app stores also changed how developers distributed code to users. What might have been Java Applets before, now became Apps, as the industry hype around mobile apps was at its peak. Indeed, “App” was voted word of the year in 2010 by the American Dialect Society, giving you a hint of the Zeitgeist back then.

And with that, the need for complex code in the browser simply disappeared and everybody lived happily ever after with JavaScript as the only code running in the browser.

Obviously, that’s not true. Maybe the old way of running non-JS code in the browser became obsolete with HTML5 and more potent JavaScript. But the software industry only grew since then and people are running more and more complex applications right in the browser.

JavaScript is more capable nowadays but it is still not enough for everyone. Even with all the new additions to the language, JS was fundamentally designed for simple DOM manipulations and not for high-performance code execution. JS code simply doesn’t translate well to CPU instructions, for many reasons.

As a demonstrative example, think about const a = b + c; in JavaScript. The

CPU is supposed to do this in a single cycle if those are integers. But in JS,

even integers are represented as double-precision floats, which is a more

complicated operation. To make things worse, the JS runtime has to first look up

the types of b and c to figure out if this even is an addition, or maybe

something else. Here is a particularly stupid example, which is valid JS.

const c = "10" + {};

// c is now a string with content "10[object Object]", due to implicit conversions

A correct JS runtime has to handle this case in exactly this way. No cheating. This becomes a larger problem when wrapped inside a function.

function add(a,b) {

return a + b;

}

const c = add("10",{});

Even when add is executed with numbers, dynamic type-checks are required

inside add for correctness. Code for adding all possible type combinations

needs to be included in the JIT compiler’s output.

Many of those type checks can be avoided through smarter and smarted optimizations in just-in-time compilers alongside new features in JavaScript, such as typed arrays. Frankly, it is surprising how well JS performs these days. A toast to the brilliant engineers behind the best JS engines. It is patchwork nevertheless, working around the fundamental limitations of JavaScript rather than removing them.

Speaking of patchwork, have you heard about asm.js? It first appeared in 2013, a time when third-party plugins were still holding on strong. Asm.js is a subset of JavaScript that restricts code in just the right way so that it can be executed faster by JavaScript engines.

To quote the asm.js specification:

Validation in asm.js limits JavaScript programs to only use operations that can be mapped closely to efficient data representations and machine operations of modern architectures, such as 32-bit integers and integer arithmetic.

This means asm.js code is written in a particular way that engines can easily construct proofs about the types and drop weird corner cases like the one I showed earlier.

In practice, this involves type casts to asm.js types, written as valid JS code. For example, if you want to let the engine know a variable is a 32-bit integer, you can do a bitwise OR with 0 to filter out objects and erase fractional parts of the number.

function add(a,b) {

const aIsInt32 = a|0;

const bIsInt32 = b|0;

return aIsInt32 + bIsInt32;

}

const c = add("10",{});

// c is now a number with value 10, due to added type casts

In this example, aIsInt32 + bIsInt32 can be translated to a single CPU

instruction because the engine knows the result of a bitwise OR is always a

whole number in the range representable by a 32-bit signed integer. If the

entire program uses these typing rules, the engine can get rid of almost all

dynamic type checks.

At this point, I should mention that you aren’t really supposed to write code in asm.js by hand. Instead, you compile code written in another language and have a compiler generate this restricted subset of JavaScript code.

This approach overcomes many of the usual speed limits of JS and allows running code originally written in another language right inside the JS engine. So it kind of gives us everything we want from a language-agnostic, sandboxed runtime in the browser. As it turns out, to run C in the browser, we didn’t need to sandbox C, we better compile it to something that can run inside the JavaScript engine. Not only does it solve the sandboxing but it also works without a plugin. I think we can call this progress when compared to Flash and Java Applets in the browser.

But asm.js is a crazy hack if I have ever seen one. Saying it requires no plugin also makes it sound like nothing needs to happen on the browser side. But in reality, browser vendors still have to explicitly make use of these optimizations. This requires a degree of collaboration between browsers and standardization.

If we’re already going that route, why not start with a blank slate and design something without the hackery? Asm.js has shown what is possible inside JS engines. Now it’s just a matter of clearing that path and turning it from a hack into a clean standard.

2017, WebAssembly released its first version. Like asm.js could, you compile other languages to it and then run it inside the JavaScript engine. And of course, it is an open standard integrated natively in all browsers, rather than a proprietary third-party software installed through plugins, as it was done in the past.

From the beginning, WebAssembly was designed to be fast to compile, fast to initialize the runtime, and fast to execute. I’ve explained previously how the stack-machine approach helps with fast compilation to native code. And for fast execution, it’s essentially what I just explained how asm.js is faster than normal JavaScript.

There is also something to be said about how Wasm binaries are structured. This is not the time to dig too deep but let me give you at least the high-level talking points.

Unlike asm.js, Wasm code is compact. Types are encoded efficiently, describing a

value as i32 takes a single byte in the transmitted binary, rather than

something like const x = a|0;.

Another impressive quality of Wasm is that it can be compiled incrementally. If downloading the Wasm code takes let’s say one second, the JavaScript engine will not just wait for it to finish downloading. Wasm binaries are divided into sections in a predictable order, with headers describing the size and content of each section. Thus, memory allocations for a section can happen as soon as the header bytes for the section are read.

But there is more pipelining going on. The runtime can also compile each Wasm function to native code without knowledge of the Wasm code that comes afterward. All types it needs must have been declared before the function body, which means even if it calls a function that is defined later, at least the type of it is known and hence all information for compilation is available.

Structured control flow is also key for this to work since a goto pointer

kind of operation would break function isolation. This topic deserves a more

detailed explanation, just not right now. Today, I’d rather show you how this

nice design of Wasm can be integrated into the browser. So let’s get a bit deeper

into the weeds now!

To support Wasm in JS, the W3C added a handful of new tricks to the JavaScript Web API.

WebAssembly.compile

is used to prepare a

WebAssembly.Module

from binary Wasm code. The module is basically an executable, type-checked and

translated to the native CPU architecture. Then,

WebAssembly.instantiate

allows creating a

WebAssembly.Instance,

which is like a running process, including all its memory and other state.

As a bonus, there is also

WebAssembly.instantiateStreaming,

which does both steps in one go, starting compilation before even the full Wasm

code finished downloading, as I promised you earlier.

JavaScript can now call the exported functions of the Wasm module. And the Wasm code can use imported functions, injected from JS, to go the other way. Let’s see this in a minimal but complete example.

Starting with the Wasm module, here is a small Rust program that can be compiled into Wasm.

// lib.rs

extern "C" {

#[link_name = "print_char"]

fn js_print_wrapper(c: u32);

}

#[export_name = "hello_world"]

pub fn hello_world() {

for c in "hello world!".chars() {

unsafe {

js_print_wrapper(c as u32);

}

}

}

This example prints “hello world!” character by character. To access the JS

console, we have to go through an injected helper JS function, named

js_print_wrapper. Wasm doesn’t have a string type, so doing it letter by

letter is simpler.

The extern "C" section defines what we want to import from JavaScript. Don’t

worry too much about the “C”, it’s just telling the compiler that we want to use

standard calling conventions the same way a C program would expect it.

The link_name attribute lets us define the name we expect from the JS code.

It’s completely optional. The function name from the Rust source code will be

used in the export if left unspecified.

The hello_world function is exported to JS. With the

export_name attribute, we can again define the name used on the interface.

This is not entirely optional. If you put nothing there, the compiler might

decide to “optimize” your code by deleting the entire function. Use

#[no_mangle] if you want to ensure the function is included with the exact

name used in Rust, or make it explicit with export_name, as I did in the

example.

Now we can compile this to a Wasm module using cargo.

# add wasm target if not installed yet

rustup target add wasm32-unknown-unknown

# compile to Wasm

cargo build --release --target wasm32-unknown-unknown

Running this puts hello_js.wasm in my target directory. I copy it to a

different directory, where I create a JS file.

// main.js

async function fetchAndRunWasm() {

const wasmModule = await WebAssembly.instantiateStreaming(fetch('hello_js.wasm'), {

env: {

print_char: (c) => {

const char = String.fromCharCode(c);

console.log(char);

}

}

});

// Get the exports from the WASM module

const { hello_world } = wasmModule.instance.exports;

// Call the exported function

hello_world();

}

fetchAndRunWasm();

In this code, fetch('hello_js.wasm') creates a promise that returns the Wasm

binary on success. instantiateStreaming accepts this as the first argument.

The second argument injects the imports to Wasm, in this case a function that

prints a character to the JS console.

Once the module is loaded and instantiated, the code calls the exported

hello_world function.

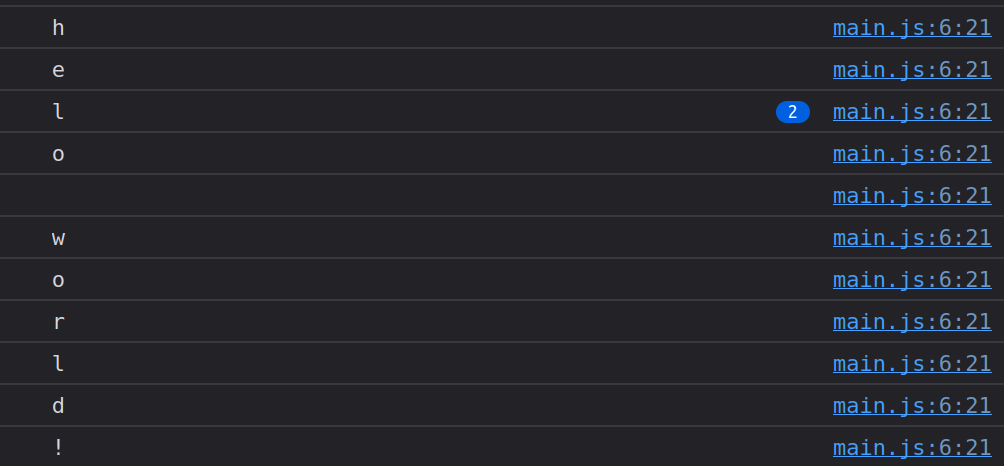

If I load this in an HTML file, Firefox shows me the Hello World output with one character per line, as expected.

The example above should show how easy it is to call Rust code from JS and vice versa. But it should also show how limited it is. Wasm can’t even access the JS console, nor can it pass entire strings to JS.

We can do better and print the output in a single line if we reach a bit deeper in our toolbox. As noted before, we cannot directly pass a Rust string to JS. But we can return a Wasm pointer and a length!

// lib.rs

extern "C" {

#[link_name = "print_string"]

fn js_print_wrapper2(start: *const u8, len: u32);

}

#[export_name = "hello_world_2"]

pub fn hello_world_2() {

static MSG: &str = "hello world!";

unsafe {

js_print_wrapper2(MSG.as_ptr(), MSG.len() as u32);

}

}

Yeah, so this gives JS a pointer into Wasm. You know what can we do with that?

We can create a Uint8Array and decode the content to text using a

TextDecoder.

const buf = new Uint8Array(memory.buffer, start, len);

const msg = new TextDecoder('utf8').decode(buf);

console.log(msg);

The values for start and len have been passed as arguments to

js_print_wrapper2. But where does memory.buffer come from?

You see, when we call instantiateStreaming, the JS engine allocates a

continuous memory region for the Wasm module. And the Wasm module exports this

memory region. I will spare you the details for another time but the default

name for the export when we compile with cargo is always just “memory”. Hence,

we can access the Wasm memory region as wasm.instance.exports.memory.

const { hello_world, hello_world_2, memory } = wasm.instance.exports;

With this memory instance, we can access everything in linear Wasm memory. So,

when the Rust code produces a pointer to the static string “hello world!”

using MSG.as_ptr(), the produced number is the actual byte offset in the

memory buffer where this string is stored in the Wasm linear memory.

Putting everything together, this is the new JS code calling both the old and new hello world method.

// index.js

async function fetchAndRunWasm() {

// Function will be overwritten once we have a memory instance to access.

let readWasmString = (_start, _len) => "";

// functions to inject into Wasm

const imports = {

print_char: (c) => {

const char = String.fromCharCode(c);

console.log(char);

},

print_string: (start, len) => {

const msg = readWasmString(start, len);

console.log(msg);

}

};

// efficiently download and load Wasm module instance

const wasm = await WebAssembly.instantiateStreaming(fetch('hello_js.wasm'), {

env: imports,

});

// Get the exports from the WASM module, functions and memory

const { hello_world, hello_world_2, memory } = wasm.instance.exports;

// overwrite `readWasmString` to access the correct Wasm memory buffer

readWasmString = (start, len) => {

// Read data directly from Wasm memory

const buf = new Uint8Array(memory.buffer, start, len);

return new TextDecoder('utf8').decode(buf);

}

// Call the exported functions

hello_world();

hello_world_2();

}

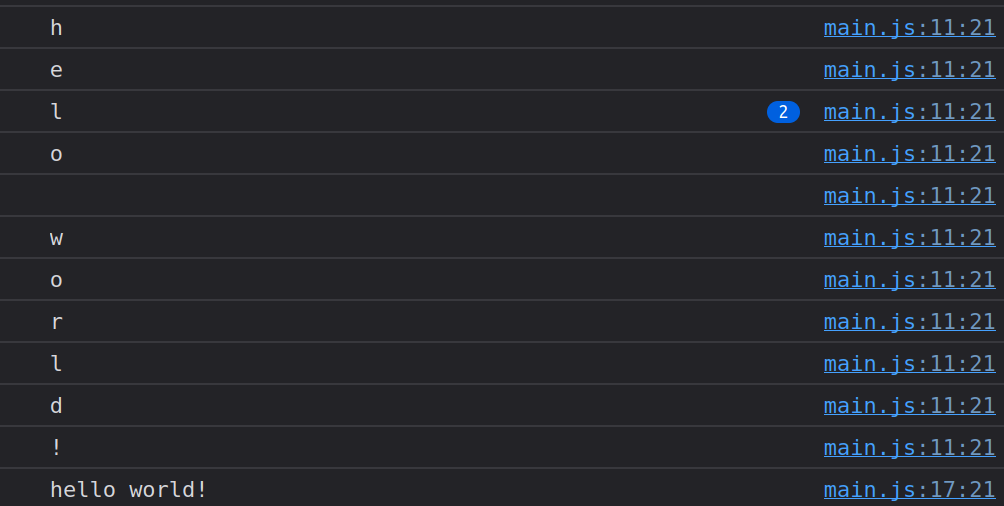

Running this will now also print “hello world” in a single line.

The full code of the example is available on GitHub.

This approach with explicit imports and exports is a sound idea for proper sandboxing if you ask me. It gives no access by default but enables developers to do anything they want through JS. In that sense, Wasm is more restricted by default than a Java Applet. With the right set of JS functions injected, however, Wasm can do much more than what Java Applets could do at their height.

Now, if you want to use Wasm in production, I would not recommend doing direct memory access by hand. This was just to show how things work under the hood. Passing strings or printing to the console can be done much easier with existing tooling. wasm-bindgen for Rust helps a lot. Through it, you get wrappers around all Web APIs, usually with nice Rust types.

It’s a similar story with emscripten, an LLVM-based toolchain to compile a range of languages to WebAssembly. It also provides wrappers for all Web APIs. But they take it one step further by also providing a libc implementation as part of the toolchain. In that case, you don’t even have to worry about specific Web APIs. All the necessary JavaScript adapter code to translate from libc to Web APIs gets bundled in for you.

You want to open a file? Emscripten creates a virtual filesystem for you. Need to open a TCP connection to a server? Well, that’s a bit more tricky since there is no Web API for TCP connections. But emscripten has you covered! They will emulate it over a WebSocket, which works if the server is set up for it, too.

It’s not recommended to use this if you can also use Web-native protocols, like directly using WebSockets. But if you have existing code and don’t want to port it all to Web APIs, this is a really awesome way to get it done quickly. Just take your existing code that was never meant to run in the browser, compile it through emscripten, and it might just work in the browser.

So much about how Wasm sandboxing in the browser works. In future articles, I plan to show practical examples of this in action. There are so many fascinating topics to cover in great depth. Like, how can we set up dynamic event handlers from inside Wasm? Or, how does memory ownership work across the JS and Rust boundary? I’ve collected plenty of gotchas from my own experience to share and I hope you will enjoy reading about them. But that is for another day.

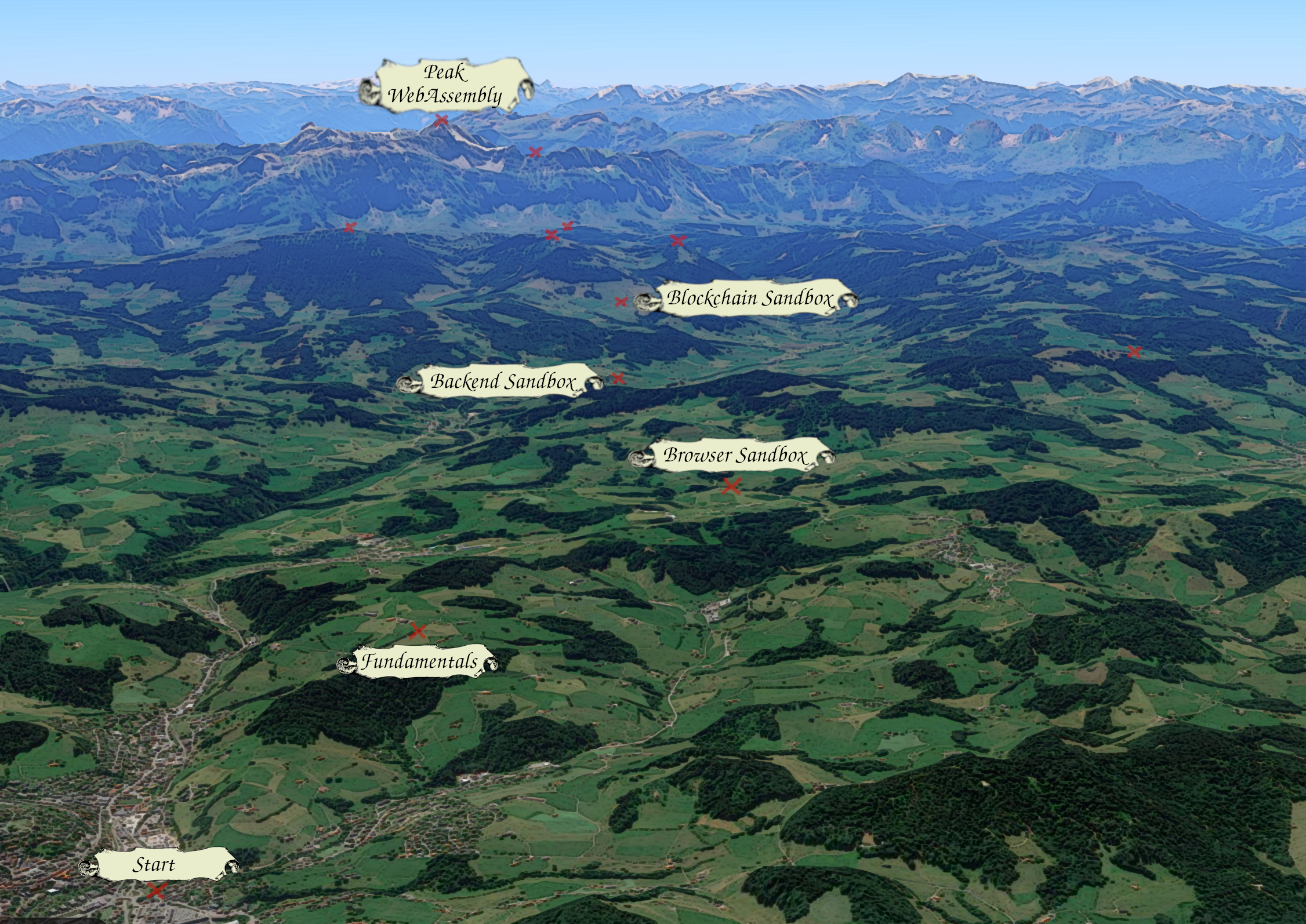

We reached our destination for today, at an altitude of 850 m above sea level. How did you enjoy the journey so far? If you have any feedback, please don’t hesitate to write a comment on Reddit or drop me a message at inbox@jakobmeier.ch.

I have already mapped out the journey for the next two legs. It will bring us much closer to the WebAssembly mountains and up to a height of 1100 m. You can expect the corresponding articles to be finished within the next couple of weeks.

Beyond that, I only know that the terrain will become more rocky. I plan to lead you through slightly more challenging paths compared to today, with more focus on technical deep dives. This should eventually bring us to Peak WebAssembly, at an altitude of 2500 m. But the actual path we will take depends on your feedback. Please, let me know what you find useful and what I can skip.

Thanks for reading to the end! I hope it was worth your time.