Why and how are people running WebAssembly on the backend? I make the case it's simply the best way to run sandboxed code written in systems languages and why you should consider using it in your applications. Examples will be in Rust but the lesson applies to many languages.

This is part 3 of the Wasm in the Wild series. Each post can be read in isolation. But for the best narrative from start to finish, start with part 1.

Introduction

WebAssembly sounds like it was made for the Web. For good reasons, as I explained in the previous post. For a long time, the browser wanted a general-purpose sandbox, not tied to a specific language or ecosystem. Wasm brought that. But Wasm in the cloud is also growing fast.

Why do we need a sandbox like Wasm on the server? Obviously, isolating different applications from each other can be a use case. I will show you how Wasm is particularly useful for that in a moment. But this is not all.

Wasm is also good for easy and portable deployment without the Docker overhead. In fact, it’s so good at it that Docker, Inc. now allows running Wasm modules side-by-side with traditional Docker containers. Code examples for that follow in this article, too.

The article is structured in the following sections.

- Alternatives before Wasm

- Pure Wasm on the Server

- WASI on the Server

- Wasm frameworks on the Server

- Docker integration of Wasm

- Conclusion

Wasm in the Wild map with route of today.

Wasm in the Wild map with route of today.

The start of today’s journey.

The start of today’s journey.

Alternatives before Wasm

Quick refresher. The purest form of running code on your CPU would be to run it all in privileged mode with full system access. That’s how it used to be. But eventually, humanity decided it would be better to have an operating system in place that isolates different processes from each other. Only a part of the OS should run with full system access, which we nowadays call the kernel, while all other code runs in a restricted environment, the user space.

While the reason for sandboxing like this is to restrict access, usually the more interesting engineering work is to put the right API in place to overcome restrictions. We want to put a roadblock in place to control who passes the road, not entirely make it impassable.

With the split between user space and kernel, system calls are used to kindly

ask the kernel to do what we cannot do inside userspace. How system calls work

is defined on a hardware level on the CPU. What each system call does is handled

by the operating system. POSIX defines a

standard of system calls for portability between compatible OSs. This makes file

manipulation through open, read, write, and close portable across Linux

and macOS, for example. WinAPI defines them differently, making Windows

compatible with Windows only. Luckily, we have standard libraries hiding the

actual system calls and their differences between operating systems from us.

So far so good, we have isolated processes and we have an API for accessing what’s outside the sandbox. But the age of a computer system can be measured by the number of layers it has, similar to counting the rings in a tree trunk.

The next layer would be full VMs, grouping multiple processes into a shared sandbox that’s isolated from other VMs on the same physical machine. It gives a full OS to each sandbox, including kernel and user space.

Another layer is created by containers, like Docker for a POSIX-compatible sandbox. Compared to VMs, containers can share the OS kernel with the host. This can reduce startup time and memory overhead substantially. As an example, an empty Debian Docker image is only around 50 MB and starts in less than a second. Often a negligible overhead compared to the applications running inside.

The Docker sandbox restricts containers to only access the private filesystem

and network by default. Developers can explicitly set up permissions to break

these restrictions when starting a container. Tools like docker compose help

automate this configuration step.

A Docker-based setup is pretty good for long-running applications that need a full POSIX interface. But for finer granularity, it is still too heavy. For cloud computing paradigms closer to Function-as-a-Service, a container per function call is usually not viable.

For an extra layer of isolation with finer granularity, a Wasm runtime is a great solution. Starting it usually has a sub-millisecond overhead. Spawning a new process would be slower than starting a Wasm runtime inside the existing process.

Wasm modules are also compact in size by design, since they must be fast to download even over a bad cellular network connection. The code for a FaaS module would likely be less than 100 kB if optimized properly.

But we can go beyond FaaS with the isolation granularity of Wasm. Why not run every endpoint invocation of your public API in a separate Wasm runtime? You would keep your web server with session management but hand off all business logic and computations to run as Wasm. This approach has a couple of benefits.

First, you can easily limit the compute time and memory consumption for the request, for a basic DoS mitigation. Second, you can hot-swap the Wasm code for an updated endpoint with zero downtime.

A third benefit is complete memory isolation for each request. Even if you write buggy code and have something like a memory buffer over-read, the request cannot access secret data from other requests or even the host.

You could achieve the same architecture with a separate process per endpoint. But then you have to deal with the overhead of starting a process and inter-process communication. In the Wasm approach, multiple Wasm runtimes can share the same host process. It’s much easier to share something like a pool of DB connections in this way.

But with Wasm, we have a blazing-fast sandbox that can run in the same process. It can be a powerful tool to have in your repertoire as a developer in 2024.

Ready to walk up that hill?

Ready to walk up that hill?

Next, I will show 4 ways of running Rust code in a Wasm sandbox on the backend, starting with the lowest level and adding one layer at a time.

- Run it as pure Wasm.

- Run it through the Wasm System Interface (WASI).

- Run it through one of many Wasm backend frameworks.

- Run it as a Docker container.

Pure Wasm on the Server

The purest form of running Rust in a Wasm sandbox is to compile it for

wasm32-unknown-unknown, which makes no assumptions on the runtime. Here is a

complete example.

# Cargo.toml

[package]

name = "is-thirteen"

version = "0.1.0"

edition = "2021"

[lib]

crate-type = ["cdylib"]

[dependencies]

// src/lib.rs

#[no_mangle]

pub fn is_thirteen(num: u32) -> bool {

num == 13

}

As you can see, the example code is extremely simple. We just take a number as input and return a boolean value whether it is equal to the number 13 or not.

Cargo can compile this into a portable and runtime-agnostic Wasm module.

$ cargo build --release --target wasm32-unknown-unknown

Now, using wasmtime as the runtime in this example, we can execute this module.

$ wasmtime run is_thirteen.wasm --invoke is_thirteen 1

0

$ wasmtime run is_thirteen.wasm --invoke is_thirteen 12

0

$ wasmtime run is_thirteen.wasm --invoke is_thirteen 13

1

$ wasmtime run is_thirteen.wasm --invoke is_thirteen 14

0

Now, to quickly demonstrate one of the benefits of sandboxing. We can, for example, limit the memory of the Wasm module to 2MB like so.

$ wasmtime run -W max-memory-size=2000000 is_thirteen.wasm --invoke is_thirteen 13

I hope this makes sense so far, at least on a high level. But there is already a bunch of magic happening that I want to explain.

As mentioned in the intro, sandboxes restrict access but also have to define a way to overcome restrictions and communicate with what’s outside the box. In this example, the input comes from outside the sandbox and the result is returned to the outside world.

When running the example, the wasmtime CLI made a few assumptions for us. It took

the argument we gave it on the command line, which is a string, and converted it to

a number. Then it pushed that on the virtual Wasm stack before calling

is_thirteen. After the function finished, it popped the return value,

which we defined to be a bool in Rust. This is then represented as 0 or 1

in a string output we see on the command line.

These steps are not part of the Wasm standard. It’s a choice of the CLI on how to

make running pure Wasm modules somewhat useful. To understand better what is

really going on under the hood, we can inspect the Wasm module with wasm2wat

from The WebAssembly Binary Toolkit.

$ wasm2wat ./target//wasm32-unknown-unknown/release/is_thirteen.wasm

(module

(type (;0;) (func (param i32) (result i32)))

(func $is_thirteen (type 0) (param i32) (result i32)

local.get 0

i32.const 13

i32.eq)

(table (;0;) 1 1 funcref)

(memory (;0;) 16)

(global $__stack_pointer (mut i32) (i32.const 1048576))

(global (;1;) i32 (i32.const 1048576))

(global (;2;) i32 (i32.const 1048576))

(export "memory" (memory 0))

(export "is_thirteen" (func $is_thirteen))

(export "__data_end" (global 1))

(export "__heap_base" (global 2)))

This is the Wasm code produced by cargo, without knowing anything about the runtime

we are going to use to run it.

The module is only 15 lines long. Four of them are the actual function, which reads parameter 0 and compares it to the constant 13. The return is implicit by keeping the result on the stack.

(type (;0;) (func (param i32) (result i32)))

(func $is_thirteen (type 0) (param i32) (result i32)

local.get 0

i32.const 13

i32.eq)

Before the function, the type is defined as a function that takes one parameter

and returns a result. Since this is the first definition in the module, it is

implicitly type 0 for references further down in the code. For convenience, the

wasm2wat command also puts that into a comment ((;0;)). (Yes, WebAssembly text

format inline comments start with (; and end with ;)).

Did you notice how i32 is used in the binary despite the u32 and the bool in the code?

Well, Wasm doesn’t have a native u32 or bool type. So on the binary level, it uses i32.

Any u32 value can be encoded as an i32 and thanks to Two’s

complement encoding,

arithmetic operations are equivalent. That’s good enough if the caller knows

how to encode values. But does wasmtime know it? Let’s try.

$ wasmtime run is_thirteen.wasm --invoke is_thirteen 2147483647

0

$ wasmtime run is_thirteen.wasm --invoke is_thirteen 2147483648

Error: failed to run main module `is_thirteen.wasm`

Caused by:

number too large to fit in target type

Oh, oh. If we try to pass a number higher than i32::MAX = 2147483647, wasmtime

fails to encode it, even though it would fit in a u32. This makes sense, given

the Wasm module defines the parameter as i32 and there is no way for wasmtime

to know that our original code wanted a u32. That shows how the glue between a

portable app and a runtime is important. The CLI can only do so much.

What else is exposed by the module for the runtime?

(export "memory" (memory 0))

(export "is_thirteen" (func $is_thirteen))

(export "__data_end" (global 1))

(export "__heap_base" (global 2))

The module has 4 export entries. One of them is our function we just looked at, exported with the name “is_thirteen”. Before that is a memory region named “memory”, which internally references memory number 0. This was previously defined to hold 16 pages of 64 KiB each, hence 1 MiB in total.

(memory (;0;) 16)

This tells the runtime any code inside will only access memory addresses 0 to 1 MiB. The runtime can allocate it accordingly.

The last two exports are pointers into the memory called __data_end and

__heap_base. This helps the runtime understand how the module organizes its

memory internally.

(global (;1;) i32 (i32.const 1048576))

(global (;2;) i32 (i32.const 1048576))

Both are set to 1048576, exactly at 1 MiB. With __data_end set to this

value, it means the module has the region from 0 to 1MiB for the data section.

If we had constant strings in our code, they would show up in the data section.

At the exact same address 1048576, the heap starts, meaning everything

afterward is dynamically managed memory. Since the entire memory region is only

1 MiB, we can conclude the module has no heap in this case.

If there was some space between __data_end and __heap_base, this could be

used as a stack by the Wasm code. Not the stack of the Wasm virtual stack

machine as I explained in Fundamentals of WebAssembly but the

stack where your programming language can put data temporarily, without a

dynamic memory allocator. But anyway, this example has no such stack since the

code is simple enough.

Okay, the module exposes its internal memory details. But why? You might have guessed it, it has to do with sandboxing and communicating across its boundaries.

First, defining the memory region allows the runtime to map Wasm addresses to host virtual addresses efficiently. In Wasm, if a pointer has a value of 8, for example, this is relative to the Wasm module. Another module in the same runtime could also have an address of 8 that references something completely different. The runtime has to translate the Wasm addresses of a module to valid native pointers. Ideally, without allowing sandbox escapes.

So for our sample code, the runtime allocates a 1 MiB memory region for the data

section of the Wasm module. Let’s say that region has a native address of

0xffff_0000_0000_0000. An interpreter would now add 0xffff_0000_0000_0000 to

each data section access to do the address mapping.

A more efficient runtime can avoid doing this work on each memory access. It can translate all addresses during the compilation of the Wasm code to native code. Hence, this addition happens during module loading/compilation, not during during execution.

For the data section, which is a static size during execution, this works well enough. The stack and the heap, however, can usually grow dynamically. Ideally, the runtime should start with a small allocation and be ready to dynamically increase it as necessary. That’s one of the reasons why it can be good for the runtime to know which addresses are considered to be on the heap by the program.

You don’t have to understand these intricate details of memory management to use Wasm. But they are important to understand the architecture and design of Wasm on a deeper level. Other runtimes, like Java, decided to put memory management on the runtime and dictate the usage of a garbage collector. Wasm tries to dictate as little as possible and allow maximum flexibility in how the host and the guest want to split the work of memory management. That’s why it works well with Rust and C but can also be used with Go and C#.

So much about this pure Wasm situation. It has very tight sandboxing. So much so that it’s hard to show a more useful example of it. If we wanted to do something interesting, like even just printing something to the console, we would need to switch gears and invoke wasmtime as a library from code, so that we can define imports like we did in the JS example. You might also recall from the previous post how much work was necessary to pass a string from Wasm to JS without wasm-bindgen. It would be the same work here.

But instantiating a Wasm runtime inside your native code is for another day. For now, I want to finish the overview of how to compile Rust to Wasm and run it as a standalone Wasm. Let’s add another layer, it will make things easier.

Let’s leave this barren hill.

Let’s leave this barren hill.

WASI on the Server

The most vanilla layer of abstraction on top of pure Wasm, for the backend,

would be the Wasm System Interface (WASI). Like POSIX system calls, it is an API

to give the program access to system resources. Using the wasm32-wasi target

for Rust, the standard library will use this interface instead of system calls.

For other programming languages, you want to look at the

standalone Wasm

option for emscripten.

Through WASI, you may access system resources like files or the network. Like Wasm, WASI is a standard developed by the W3C. Wasm had its 1.0 release a while ago and all major browsers support it. WASI builds on top of it and naturally lags behind a bit regarding stability.

The first WASI standard preview was floating around for a couple of years and has been implemented by multiple Wasm runtimes. Today, what came out of it is known as WASI 0.1. Despite the number indicating instability, it works well enough that it is used in production.

There is also WASI 0.2, which I believe is supposed to replace WASI 0.1. But it was only released in February 2024, so I wouldn’t quite call WASI 0.1 deprecated, yet.

With WASI, we can create a more interesting example. Let’s create a Wasm component that takes a message and a signature as input from the user and then validates the signature using a certificate stored in a file.

# Cargo.toml

[package]

name = "wasm-signature-checker"

version = "0.1.0"

edition = "2021"

[dependencies]

ed25519-compact = "2"

hex = "0.4"

anyhow = "1"

// main.rs

use ed25519_compact::{PublicKey, Signature};

fn main() -> anyhow::Result<()> {

println!("> Wasm signature verifier, featuring WASI.");

println!("> Please enter the message text.");

let message = read_line()?;

println!("> Please enter the signature in hex encoding.");

let hex_input = read_line()?;

let binary_input = hex::decode(hex_input.clone())?;

let signature = Signature::from_slice(&binary_input)?;

println!("> Please enter the path to the certificate.");

let path = read_line()?;

let pk_vec = std::fs::read(&path)?;

let pk_array: [u8; 32] = pk_vec.try_into().unwrap();

let pk = PublicKey::new(pk_array);

println!(">");

println!("> Verifying ");

println!("> {message}");

println!("> {hex_input}");

println!("> {path}");

match pk.verify(message, &signature) {

Ok(()) => println!("valid"),

Err(_) => println!("invalid"),

}

Ok(())

}

/// Read a line from stdin and remove trailing whitespace, such as \n

fn read_line() -> Result<String, anyhow::Error> {

let mut line = String::new();

std::io::stdin().read_line(&mut line)?;

line.truncate(line.trim().len());

Ok(line)

}

# set up certificate in a new directory

mkdir guest_dir

echo "D0A6947EF7BDA615D38B43A896AE6EA6FDE87F98729C18B6D9AB447051B0E7A0" | xxd -r -p > ./guest_dir/my_pk.cert

Note how I’m using the Rust standard library to read from standard input and

read a file from a path. Also, println! prints to the standard output.

None of this would work in pure Wasm. But when I compile it for --target

wasm32-wasi, it all gets mapped to the WASI interface.

cargo build --release --target wasm32-wasi

cp ./target/wasm32-wasi/release/wasm-signature-checker.wasm ./

Finally, we can run the code with wasmtime again, this time interactively.

$ wasmtime wasm-signature-checker.wasm --dir ./guest_dir::/

> Wasm signature verifier, featuring WASI.

> Please enter the message text.

Hello WASI!

> Please enter the signature in hex encoding.

96D7065598D79299AD539B1299E83196EA19A2660342ED480FD1500E813E636DCA4112D3A49B783B207219B432E7F0882D8F3840D6D7591ECA271CB028A3D608

> Please enter the path to the certificate.

my_pk.cert

>

> Verifying

> Hello WASI!

> 96D7065598D79299AD539B1299E83196EA19A2660342ED480FD1500E813E636DCA4112D3A49B783B207219B432E7F0882D8F3840D6D7591ECA271CB028A3D608

> my_pk.cert

valid

$

Probably the most important part here is how we have to give explicit permission

to read the file ./guest_dir/my_pk.cert. By adding --dir ./guest_dir::/ as

an argument, I mapped ./guest_dir to / inside the filesystem the Wasm

runtime exposes. Anything outside the directory is unaccessible, as I can

demonstrate by trying to access for example ../secret_key.

# Demonstrate sandboxing in WASI

# ...

$ wasmtime wasm-signature-checker.wasm --dir ./guest_dir::/

> Please enter the path to the certificate.

../secret_key

Error: Operation not permitted (os error 63)

As you can see, the sandboxing model of WASI is similar to Docker. By default, no access. But all the tools are available to quickly give access to specific parts of the system.

If instead I run the example as a native binary and not as a Wasm module, the program has access to anything my current user has access to…

# Demonstrate non-sandboxing in native

$ cargo run

# ...

> Please enter the path to the certificate.

/home/jakmeier/.ssh/id_ed25519.pub

thread 'main' panicked at src/main.rs:16:48:

called `Result::unwrap()` on an `Err` value: [ READACTED ]

In the example above, running this on my dev machine, my real ed25519 public key was printed out. It panicked because the format was unexpected but it did read the file content and printed it as part of the panic message.

It wouldn’t work with my private key, since I have the usual file permission setup where only the root can read private keys. But here we are again, relying on specific configurations on the OS level for isolation of resources. Running it in a Wasm sandbox seems more foolproof to me.

Before moving on from WASI, let me briefly mention the component model. It’s part of WASI 0.2 and allows the composition of Wasm modules, even across the programming language boundary. Traditionally, we would call this concept linking of shared libraries in native binaries, or maybe dynamic class loading in the JVM or CLR.

The sandboxing properties of it are quite interesting. Even components have strong isolation from each other. Communication also works over imports and exports, plus there is an interface definition layer to define how components can be combined.

The component model is really cool. So cool, in fact, that I want to show it off in a dedicated post. Right now, we need to move on if we want a chance at reaching the planned destination before it gets dark.

Somehow this house we pass feels right for the next section.

Somehow this house we pass feels right for the next section.

Wasm frameworks on the Server

The list of Wasm runtimes is larger than I can keep track. And as a layer on top of the runtimes, there are Wasm platforms, which I also think I lost the overview. But from the platforms I have tested, they mostly follow a similar pattern.

The Wasmer runtime is an example of a well-documented and widely used project that also comes with a platform built on top. Their platform is called “Wasmer Edge” and has been available as a public Beta version since October 2023. (General Wasmer Edge Announcement, Announcement of Public Beta)

The idea is that you can build Wasm modules and deploy them directly to a battery-included, scalable cloud. No infrastructure for you to maintain, just a bunch of Wasm modules accessible through your domain.

It’s a bit like FaaS again but promises to be cheaper due to tiny resource requirements for Wasm runtimes. That’s fundamentally what gives fuel to this direct attack on the established model of the hyperscalers today.

Wasm platforms also promise to be more flexible than FaaS platforms like AWS Lambda since the platform has WASI support. In the case of Wasmer Edge, they even defined a superset of WASI called WASIX which gives full POSIX compatibility. Essentially, WASIX is for the impatient who don’t want to use things like multi-threading, subprocesses, full networking support, and other cool features before things get standardized in a formal WASI preview. Even setjmp and longjmp work in WASIX, which is kind of crazy.

WasmEdge, despite its very similar name, is a completely separate platform with a similar approach. They also have their own Wasm runtime and implement WASI. But without the X, so you get access to most things you need but not quite the full POSIX compliance. But using their SDK, you can easily access resources on a higher level, including networking on the HTTP level, database driver bindings, and of course AI integrations.

There is also Spin by Fermyon and wasmCloud by wasmCloud LLC. These are two further platforms that will cheaply host your Wasm modules. I lump these two together because both use wasmtime, the runtime implemented by the Bytecode Alliance nonprofit. This makes them WASI-compliant. And again, they have SDKs for all the things I mentioned for WasmEdge.

As you can see, the namespace of “Wasm” plus something about cloud or edge is already getting scarce. Probably, we have enough of these projects already but if you must create another one, please don’t call it “Cloud Wasm”.

Seriously though, you don’t have to create another one of these. As far as I can see, all those projects have their code on GitHub under open-source licenses. You can even self-host these platforms, so no danger of vendor lock-in. Just use their code and contribute potential changes to the upstream.

But I have a feeling most of you won’t abandon the existing infrastructure and port everything to Wasm modules. Luckily, there is a way to integrate Wasm modules natively into Docker and thus adopt the technology incrementally.

Finally a paved path to follow!

Finally a paved path to follow!

Docker integration of Wasm

To round up the Wasm on the backend part, let’s talk about Wasm and Docker.

Of course, you could always put a Wasm runtime inside a Docker container, copy a Wasm module inside, and execute it like that. I wouldn’t blame you, it gives you the benefit of being integrated with existing tooling around Docker containers.

But in this way, you give up on the fast startup times of Wasm, the small memory footprint, and even portability is dampened (Dockerfiles differ by CPU architectures).

A better way to integrate Wasm modules inside Docker is with “Wasm workloads” for Docker Desktop as they are currently called in the official documentation.

You can define a Wasm workload in a dockerfile by starting with a completely empty image and only copying in the Wasm to execute.

FROM scratch

COPY --from=build /build/hello_world.wasm /hello_world.wasm

ENTRYPOINT [ "/hello_world.wasm" ]

Like any other dockerfile, you can push this to a Docker image repository and run it with the usual commands. But when you run it, you have to specify which runtime you want. All the runtimes I mentioned previously are available, and more. Check out the full list here.

For example, to pick wasmtime:

docker run \

--runtime=io.containerd.wasmtime.v1 \

--platform=wasi/wasm \

secondstate/rust-example-hello

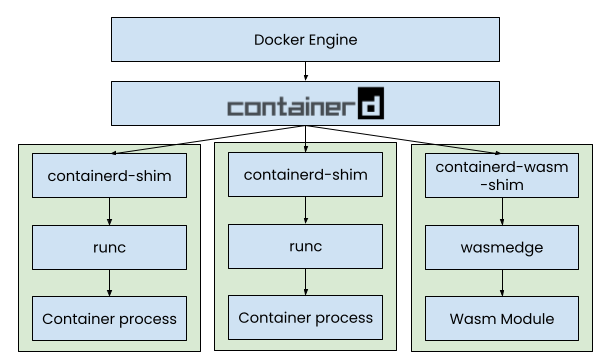

In the background, containerd (managed through Docker in this example) will

spawn a special container. Inside the container, you will find

containerd-wasm-shim instead of the normal containerd-shim acting as a

bridge between the container and containerd. This shim then talks directly to

wasmtime, which executes the Wasm module specified in the dockerfile. Here is

the diagram from the official announcement.

Source: Introducing the Docker+Wasm Technical Preview, Docker Inc

Source: Introducing the Docker+Wasm Technical Preview, Docker Inc

That’s pretty cool, right? We get the familiar Docker tooling and the well-tested sandboxing properties of it. But your applications can be really small, easily below 1 MB for simple applications. And startup times can be a few milliseconds.

But be aware that the Wasm workloads in Docker are still Beta and are not recommended for use in production. I really hope this changes soon, since I believe this would really accelerate Wasm adoption.

Look, a clear view on Peak WebAssembly!

Look, a clear view on Peak WebAssembly!

Conclusion

That’s all for now. What do you think? Do you see the benefit of running your code in a Wasm sandbox? Will it eliminate another class of security bugs for future software, comparable to how Rust eliminates memory corruption bugs? Or is it just a niche tool and a bunch of hype? I’m curious to read where all of you stand on this, please let me know on the Reddit discussion!

Blockchain sandbox next, with a skipped-for-now mystery to the right.

Blockchain sandbox next, with a skipped-for-now mystery to the right.