Poweredge Server Gpu Matrix

Poweredge Server Gpu Matrix

Uploaded by

phongnkCopyright:

Available Formats

Poweredge Server Gpu Matrix

Poweredge Server Gpu Matrix

Uploaded by

phongnkOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Copyright:

Available Formats

Poweredge Server Gpu Matrix

Poweredge Server Gpu Matrix

Uploaded by

phongnkCopyright:

Available Formats

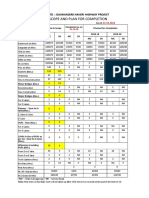

GPUs on supported platforms

AMD

PLATFORM

A100 40GB SXM4 A100 80GB SXM4

A100 80GB PCIe A40 A30 A16 A10 A2 M10 T4 MI100 MI210

(Nvlink) (Nvlink)

1 1

XE8545 Shipping (4 ) Shipping (4 )

R750xa

Shipping (43) Shipping (43) Shipping (43) Shipping (43) Shipping (43) Shipping (63) Shipping (23) Shipping (63) Shipping (43)

R750 Shipping (2) Shipping (2) Shipping (2) Shipping (2) Shipping (3) Shipping (6) Shipping (2) Shipping (6)

R650 Shipping (3) Shipping (3)

C6520 Shipping (1) Shipping (1)

R7525 - Milan

Shipping (3) Shipping (3) Shipping (3) Shipping (3) Shipping (3) Shipping (6) Shipping (2) Shipping (6) Shipping (3) Shipping (3)

R7525 - Rome

Shipping (3) Shipping (3) Shipping (3) Shipping (3) Shipping (3) Shipping (6) Shipping (2) Shipping (6) Shipping (3) Shipping (3)

R7515 - Milan Shipping (1) Shipping (1) Shipping (4) Shipping (4) Shipping (1)

R7515 - Rome Shipping (1) Shipping (1) Shipping (4) Shipping (4) Shipping (1)

R6525 - Rome & Milan Shipping (3) Shipping (3)

R6515 - Rome & Milan Shipping (2) Shipping (1)

C6525 - Rome & Milan Shipping (1) Shipping (1)

XR12 Shipping (2) Shipping (2) Shipping (2) Shipping (2) Shipping (2)

XR11 Shipping (2) Shipping (2)

DSS8440 Shipping (4/8/10 )

1 1

Shipping (4/8/10 ) Shipping (4/8/10 )

1

Shipping (8/12/16 )

1

R940XA Shipping (4)

R840 Shipping (2)

R740/XD Shipping (3) Shipping (3) Shipping (3) Shipping (3) Shipping (3) Shipping (6) Shipping (2) Shipping (6**)

R640 Shipping (3) Shipping (3)

T640 Shipping (2)

T550 Shipping(2) Shipping(2) Shipping (5) Shipping (5)

XR2 Shipping (1)

XE2420 Shipping (2) Shipping (2) Shipping (4)

1 – XE8545, DSS8440 are set configs

2 – subject to change

3 - R750XA at a minimum requires 2GPUs to be installed at the factory

(qty) - max number of GPUs allowed, maximum number of GPUs allowed might differ in different configurations on the same platform

Version: August 2022

© 2022 Dell Technologies Inc. or its subsidiaries. All Rights Reserved.

Dell, EMC, and other trademarks are trademarks of Dell Inc. or its subsidiaries.

1 Other trademarks may be trademarks of their respective owners.

GPUs on supported platforms

GPU

Memory Memory Max Power Slot Auxiliary

Brand Model GPU Memory Graphic Bus/ System Interface Interconnect Bandwidth Height/Lengt Workload1

ECC Bandwidth Consumption Width Cable

h

AMD MI210 64 GB HBM2e Y 1638 GB/sec 300W PCIe Gen4x16/ Infinity Fabric Link bridge 64 GB/sec (PCIe 4.0) DW FHFL CPU 8 pin HPC/Machine learning training

AMD MI100 32 GB HBM2 Y 1228 GB/sec 300W PCIe Gen4x16 64 GB/sec (PCIe 4.0) DW FHFL PCIe 8 pin HPC/Machine learning training

Nvidia A100 80 GB HBM2 Y 2039 GB/sec 500W NVIDIA NVLink 600 GB/sec (3rd Gen NVLink) N/A N/A N/A HPC/AI/Database Analytics

Nvidia A100 40 GB HBM2 Y 1555 GB/sec 400W NVIDIA NVLink 600 GB/sec (3rd Gen NVLink) N/A N/A N/A HPC/AI/Database Analytics

Nvidia A100 80 GB HBM2e Y 1935 GB/sec 300W PCIe Gen4x16/ NVLink bridge8 64 GB/sec5 (PCIe 4.0) DW FHFL CPU 8 pin HPC/AI/Database Analytics

Nvidia A30 24 GB HBM2 Y 933 GB/sec 165W PCIe Gen4x16/ NVLink bridge8 64 GB/sec5 (PCIe 4.0) DW FHFL CPU 8 pin mainstream AI

8 5

Nvidia A40 48 GB GDDR6 Y 696 GB/sec 300W PCIe Gen4x16/ NVLink bridge 64 GB/sec (PCIe 4.0) DW FHFL CPU 8 pin Performance graphics/VDI

Nvidia A16 64 GB GDDR6 Y 800 GB/sec 250W PCIe Gen4x16 64 GB/sec (PCIe 4.0) DW FHFL CPU 8 pin VDI

Nvidia A2 16 GB GDDR6 Y 200 GB/sec 60W PCIe Gen 4x8 32 GB/sec (PCIe 4.0) SW HHHL N/A Inferencing/Edge/VDI

Nvidia A2 (v2) 16 GB GDDR6 Y 200 GB/sec 60W PCIe Gen 4x8 32 GB/sec (PCIe 4.0) SW HHHL N/A Inferencing/Edge/VDI

Nvidia A2 16 GB GDDR6 Y 200 GB/sec 60W PCIe Gen 4x8 32 GB/sec (PCIe 4.0) SW FHHL N/A Inferencing/Edge/VDI

Nvidia A2 (v2) 16 GB GDDR6 Y 200 GB/sec 60W PCIe Gen 4x8 32 GB/sec (PCIe 4.0) SW FHHL N/A Inferencing/Edge/VDI

Nvidia A10 24 GB GDDR6 Y 600 GB/sec 150W PCIe Gen4x16 64 GB/sec (PCIe 4.0) SW FHFL PCIe 8 pin mainstream graphics/VDI

Nvidia M10 32 GB GDDR5 N 332 GB/sec 225W PCIe Gen3x16 32 GB/sec (PCIe 3.0) DW FHFL PCIe 8 pin VDI

Nvidia T4 16 GB GDDR6 Y 300 GB/sec 70W PCIe Gen3x16 32 GB/sec (PCIe 3.0) SW HHHL N/A Inferencing/Edge/VDI

Nvidia T4 16 GB GDDR6 Y 300 GB/sec 70W PCIe Gen3x16 32 GB/sec (PCIe 3.0) SW FHHL N/A Inferencing/Edge/VDI

Nvidia A100 40 GB HBM2 Y 1555 GB/sec 250W PCIe Gen4x16/ NVLink bridge8 64 GB/sec5 (PCIe 4.0) DW FHFL CPU 8 pin HPC/AI/Database Analytics

Nvidia V100S 32 GB HBM2 Y 1134 GB/sec 250W PCIe Gen3x16 32 GB/sec (PCIe 3.0) DW FHFL CPU 8 pin HPC/AI/Database Analytics

Nvidia V100 32 GB HBM2 Y 900 GB/sec 250W PCIe Gen3x16 32 GB/sec (PCIe 3.0) DW FHFL CPU 8 pin HPC/AI/Database Analytics

Nvidia V100 16 GB HBM2 Y 900 GB/sec 250W PCIe Gen3x16 32 GB/sec (PCIe 3.0) DW FHFL CPU 8 pin HPC/AI/Database Analytics

Nvidia V100 32 GB HBM2 Y 900 GB/sec 300W NVIDIA NVLink 300 GB/sec (2nd Gen NVLink) N/A N/A N/A HPC/AI/Database Analytics

Nvidia V100 16 GB HBM2 Y 900 GB/sec 300W NVIDIA NVLink 300 GB/sec (2nd Gen NVLink) N/A N/A N/A HPC/AI/Database Analytics

Nvidia RTX6000 24 GB GDDR6 Y 624 GB/sec 250W PCIe Gen3x16/ NVLink bridge3 32 GB/sec3 (PCIe 3.0) DW FHFL CPU 8 pin VDI/ Performance Graphics

Nvidia RTX8000 48 GB GDDR6 Y 624 GB/sec 250W PCIe Gen3x16/ NVLink bridge3 32 GB/sec3 (PCIe 3.0) DW FHFL CPU 8 pin VDI/ Performance Graphics

Nvidia P100 16 GB HBM2 Y 732 GB/sec 300W NVIDIA NVlink 160 GB/sec (1st Gen NVLink) N/A N/A N/A HPC/AI/Database Analytics

Nvidia P100 16 GB HBM Y 732 GB/sec 250W PCIe Gen3x16 32 GB/sec (PCIe 3.0) DW FHFL CPU 8 pin HPC/AI/Database Analytics

Nvidia P100 12 GB HBM2 Y 549 GB/sec 250W PCIe Gen3x16 32 GB/sec (PCIe 3.0) DW FHFL CPU 8 pin HPC/AI/Database Analytics

Nvidia P40 24 GB DDR5 N 346 GB/sec 250W PCIe Gen3x16 32 GB/sec (PCIe 3.0) DW FHFL CPU 8 pin HPC/AI/Database Analytics

1

suggested ideal workloads, but can be used for other workloads

2

Different SKUs are mentioned because different platforms might support different SKUs. This sheet doesn't specifically call out platform-SKU associations

3

upto 100GB/sec when RTX NVLink bridge is used, RTX NVLink bridge is only supported on T640

4

Structural Sparsity enabled

5

upto 600GB/sec for A100 when NVLink bridge is used, upto 200GB/sec for A30 when NVLink bridge is used, upto 112.5GB/sec for A40 when NVLink bridge is used

6

Peak performance numbers shared by Nvidia or AMD for MI100

7

Refer to Max#GPUs on supported platforms tab for detail support on Rome vs Milan processors

8

A100 w/Nvlink bridge is supported on R750XA and DSS8440, A40 w/Nvlink bridge is supported on R750XA, DSS8440 and T550, A30 w/NVLink bridge is supported on

R750XA and T550

DW - Double Wide, SW - Single Wide, FH- Full Height, FL - Full Length, HH - Half Height, HL - Half Length

© 2022 Dell Technologies Inc. or its subsidiaries. All Rights Reserved.

Dell, EMC, and other trademarks are trademarks of Dell Inc. or its subsidiaries.

Aguust 2022 2 Other trademarks may be trademarks of their respective owners.

You might also like

- Sor 01 04 2017Document574 pagesSor 01 04 2017Sharath Ande100% (6)

- Export Declaration - (21GB06X42251452019) - EAD Data United KingdomDocument3 pagesExport Declaration - (21GB06X42251452019) - EAD Data United Kingdomdaniel lisNo ratings yet

- Vicidial - Load Balancing SetupDocument8 pagesVicidial - Load Balancing SetupLeoNo ratings yet

- Vector Webinar AUTOSAR Basics 20121009 enDocument34 pagesVector Webinar AUTOSAR Basics 20121009 enVinu Avinash100% (4)

- SAP Navigation TrainingDocument34 pagesSAP Navigation Trainingssbhagat001No ratings yet

- European Union: Certification by Competent Authorities (G)Document3 pagesEuropean Union: Certification by Competent Authorities (G)Narsa Reddy MaraNo ratings yet

- Transworld Tariff Transworld-1Document9 pagesTransworld Tariff Transworld-1SANAJY DAVENo ratings yet

- Green Logistics Policies For Sustainable Development in JapanDocument26 pagesGreen Logistics Policies For Sustainable Development in Japananek.agrawalNo ratings yet

- DSR Report Summary Feb 19 - OwnDocument14 pagesDSR Report Summary Feb 19 - OwnSunil SahuNo ratings yet

- Transportation Planning Factors v.2Document13 pagesTransportation Planning Factors v.2Steve Richards100% (1)

- "Indo-Tibetan Border Police Force": (Ministry of Home)Document30 pages"Indo-Tibetan Border Police Force": (Ministry of Home)roomanlosal20No ratings yet

- Retail Catalog MAY18Document22 pagesRetail Catalog MAY18Bethoro KatongNo ratings yet

- Iap Charts (Fir - Vo)Document81 pagesIap Charts (Fir - Vo)jade_rpNo ratings yet

- South Asia Pakistan Terminals Limited - Counter Tariff: Effective From 2nd July 2018Document2 pagesSouth Asia Pakistan Terminals Limited - Counter Tariff: Effective From 2nd July 2018Aqib Sheikh100% (1)

- Austria Economic A Diciembre 1805Document6 pagesAustria Economic A Diciembre 1805PEDRONo ratings yet

- SCM Assignment - PKCL - Prabhuti SinghaniaDocument10 pagesSCM Assignment - PKCL - Prabhuti SinghaniaPrabhuti SinghaniaNo ratings yet

- Questionnaire Socio-Economic Structure of Small-Scale Coastal Fishermen at Barangay Bonawon, Siaton, Negros OrientalDocument6 pagesQuestionnaire Socio-Economic Structure of Small-Scale Coastal Fishermen at Barangay Bonawon, Siaton, Negros OrientalGuilbert Atillo100% (1)

- Field Engineer's Manual (12.7.2016)Document27 pagesField Engineer's Manual (12.7.2016)ZEC LIMITEDNo ratings yet

- Bharatiya Gyan Parampara IKSDocument6 pagesBharatiya Gyan Parampara IKSahirkarannavinbhaiNo ratings yet

- Awpt Loading Summary For Mv. Contrivia V-070.SDocument1 pageAwpt Loading Summary For Mv. Contrivia V-070.SAshley KamalasanNo ratings yet

- Circular Motion - DPP 02 (Of Lec 06) - Arjuna JEE 2024Document3 pagesCircular Motion - DPP 02 (Of Lec 06) - Arjuna JEE 2024shreyankarwalNo ratings yet

- 132 भारत एक सामान्य परिचयLEC 1 notes NADocument13 pages132 भारत एक सामान्य परिचयLEC 1 notes NArajukumarsrv2No ratings yet

- Standby Rate Man Power SupplyDocument5 pagesStandby Rate Man Power SupplyHendri HermawanNo ratings yet

- 4500 Ofs TransmissionDocument2 pages4500 Ofs TransmissionLuis CastelanNo ratings yet

- Verifavia NOON Case StudyDocument18 pagesVerifavia NOON Case Studykritagyasrivastava.asmNo ratings yet

- Coal Handling SystemDocument30 pagesCoal Handling SystemKumaraswamyNo ratings yet

- Center of Gravity ExampleDocument6 pagesCenter of Gravity ExampletsaipeterNo ratings yet

- IAP Charts (FIR - VI)Document41 pagesIAP Charts (FIR - VI)sunil kumarNo ratings yet

- 1209 Ujjain Pre Nurture Class IXPhaseDocument7 pages1209 Ujjain Pre Nurture Class IXPhaseSankalp DwivediNo ratings yet

- Captură de Ecran Din 2022-06-09 La 05.35.22Document8 pagesCaptură de Ecran Din 2022-06-09 La 05.35.22tiberiuionutboengiuNo ratings yet

- SKU Size Piece Rate Total 15-Jul-20Document70 pagesSKU Size Piece Rate Total 15-Jul-20Dio Divano India Pvt. Ltd.No ratings yet

- Allison 4430&4440&4500&4600 SeriesDocument2 pagesAllison 4430&4440&4500&4600 Serieselpidio ferreiraNo ratings yet

- Adobe Scan 13 Jan 2022Document1 pageAdobe Scan 13 Jan 2022Ionut LupuNo ratings yet

- Table of Duties MLC 2023Document1 pageTable of Duties MLC 2023Andrew MabbettNo ratings yet

- I Chobdar) II (Office Assistant) III (Cook) IV (Waterman) V (Room Boy) VI (Watchman) VII Book Restorer) VIII Library Attendant)Document19 pagesI Chobdar) II (Office Assistant) III (Cook) IV (Waterman) V (Room Boy) VI (Watchman) VII Book Restorer) VIII Library Attendant)hemanath ramyaNo ratings yet

- Truck Planning - OctDocument29 pagesTruck Planning - OctThai Hai LyNo ratings yet

- APS1005 Problem Data-SetV3Document6 pagesAPS1005 Problem Data-SetV3daveix3No ratings yet

- Mumbai RevisedDocument3 pagesMumbai RevisedEmma SinotransNo ratings yet

- สถิตย์ศาสตร์วิศวะกรรม จุฬาDocument136 pagesสถิตย์ศาสตร์วิศวะกรรม จุฬาKru Boss Suddee100% (1)

- East Bangkok - Voyage Emission Template - TATA SteelDocument25 pagesEast Bangkok - Voyage Emission Template - TATA SteelkaranNo ratings yet

- WPS OfficeDocument5 pagesWPS OfficeAbubekerNo ratings yet

- Taller Cargas CombinadasDocument7 pagesTaller Cargas CombinadasESNICNo ratings yet

- Appendix A Example of The Standardised Formatting of Class Notations Including QualifiersDocument4 pagesAppendix A Example of The Standardised Formatting of Class Notations Including QualifiersoKDNo ratings yet

- Decados Raider 'Reaper' ClassDocument7 pagesDecados Raider 'Reaper' ClassJohn ChurchillNo ratings yet

- Measure of Position For Ungrouped DataDocument10 pagesMeasure of Position For Ungrouped DataCJRLNo ratings yet

- Random Vehicle GeneratorDocument5 pagesRandom Vehicle GeneratorTyCaineNo ratings yet

- Equipment Request 26 Apr 2024Document1 pageEquipment Request 26 Apr 2024Ade SaputraNo ratings yet

- Economic 8 Chapter-1Document20 pagesEconomic 8 Chapter-1rishabkumar17378No ratings yet

- T3 - Crane BeamDocument7 pagesT3 - Crane BeamhussamNo ratings yet

- Travsfer of Valution in STODocument2 pagesTravsfer of Valution in STOReji GeorgeNo ratings yet

- 4773 Dt. 07.10.2022Document4 pages4773 Dt. 07.10.2022yadavsherni99No ratings yet

- Scan 22 Oct 24 01 01 07Document12 pagesScan 22 Oct 24 01 01 07BS GOURISARANNo ratings yet

- Traffic SL No 4Document1 pageTraffic SL No 4Manjunath JadhavNo ratings yet

- Schedule of Rates - RCD-Bihar-11th Edition-2016Document568 pagesSchedule of Rates - RCD-Bihar-11th Edition-2016sarsij81% (27)

- Total Scope and Plan For Completion: Ircon International LTD:: Davanagere Haveri Highway ProjectDocument1 pageTotal Scope and Plan For Completion: Ircon International LTD:: Davanagere Haveri Highway Projectnagaraj_qce3499No ratings yet

- 1 BillingConfig Oct 2023Document49 pages1 BillingConfig Oct 2023Shatru SinghNo ratings yet

- Case Study PashukhadyaDocument3 pagesCase Study PashukhadyaK ParamNo ratings yet

- Sines Towage and Mooring Public Service 2020 ReboportDocument5 pagesSines Towage and Mooring Public Service 2020 ReboportLazaros KarapouNo ratings yet

- Concept+Strengthening+Sheet+ (CSS 05) +Based+on+AIATS 05+ (CF+OYM) PCBZDocument16 pagesConcept+Strengthening+Sheet+ (CSS 05) +Based+on+AIATS 05+ (CF+OYM) PCBZsouravsinghrathore.2006No ratings yet

- Calculus and Linear AlgebraDocument27 pagesCalculus and Linear AlgebraIrfan AhmedNo ratings yet

- Directional Drilling EquationDocument4 pagesDirectional Drilling EquationMufti Ghazali67% (3)

- Docu91467 - VxRail Appliance Software 4.7.x Release Notes Rev36Document71 pagesDocu91467 - VxRail Appliance Software 4.7.x Release Notes Rev36phongnkNo ratings yet

- VxRail 7.0.x Release Notes Rev12 20210505Document38 pagesVxRail 7.0.x Release Notes Rev12 20210505phongnkNo ratings yet

- VxRail 7.0.x Release NotesDocument39 pagesVxRail 7.0.x Release NotesphongnkNo ratings yet

- Vxrail 4 7 527 Release Notes 41Document74 pagesVxrail 4 7 527 Release Notes 41phongnkNo ratings yet

- PowerEdge R640 Technical GuideDocument51 pagesPowerEdge R640 Technical GuidephongnkNo ratings yet

- SSC-32 Ver 2.0: Manual Written For Firmware Version SSC32-1.06XE Range Is 0.50mS To 2.50mSDocument15 pagesSSC-32 Ver 2.0: Manual Written For Firmware Version SSC32-1.06XE Range Is 0.50mS To 2.50mSSergio Juan100% (1)

- Recueil AnglaisDocument8 pagesRecueil AnglaisRuth NadiameNo ratings yet

- Ep224 10Document16 pagesEp224 10Marcelo BulfonNo ratings yet

- Akhir Sman - 17 - Batam 2019 07 07Document15 pagesAkhir Sman - 17 - Batam 2019 07 07Dinda KiranaNo ratings yet

- DP5 Practice Activities - AnswersDocument3 pagesDP5 Practice Activities - Answersgery sumual100% (1)

- Racf - Auditing Unix On ZosDocument29 pagesRacf - Auditing Unix On ZosAlejandro PonceNo ratings yet

- Assignment 1: 1: Sensitivity: Internal & RestrictedDocument26 pagesAssignment 1: 1: Sensitivity: Internal & RestrictedHYTDYRUGNo ratings yet

- Controlling Execution: True-False QuestionsDocument14 pagesControlling Execution: True-False QuestionsBaraka MakuyaNo ratings yet

- Create A Space Shooter Game in Flash Using AS3Document24 pagesCreate A Space Shooter Game in Flash Using AS3Juan ManuelNo ratings yet

- Publimes InventarioDocument2 pagesPublimes InventarioBiblioteca EcotecNo ratings yet

- GPS 6010 Manual EDocument17 pagesGPS 6010 Manual EYordan Kostadinov StrahinovNo ratings yet

- DF2010ProceedingsBody Revisado Cca2.PDF A4 v9Document94 pagesDF2010ProceedingsBody Revisado Cca2.PDF A4 v9AbelGuilherminoNo ratings yet

- Ansible For Cisco Nexus Switches v1Document22 pagesAnsible For Cisco Nexus Switches v1pyxpdrlviqddcbyijuNo ratings yet

- Project IphoneDocument35 pagesProject IphoneAkhil SumanNo ratings yet

- Android Interview Questions - TutorialspointDocument5 pagesAndroid Interview Questions - TutorialspointPasanNanayakkara100% (1)

- w3 Itt565 Lecture3 UfutureDocument35 pagesw3 Itt565 Lecture3 UfutureMuhammad Imran HaronNo ratings yet

- CS 2640 - Syllabus Summer 2020Document2 pagesCS 2640 - Syllabus Summer 2020Canjian ChenNo ratings yet

- Mac 1200 ServiceDocument150 pagesMac 1200 Servicesoso loloNo ratings yet

- TWRP Commandline GuideDocument2 pagesTWRP Commandline GuideShawn CarswellNo ratings yet

- Luxel f6000 User Manual PDFDocument184 pagesLuxel f6000 User Manual PDFAnonymous qVBlTATrNo ratings yet

- Erbil Polytechnic University Soran Technical College IT DepartmentDocument9 pagesErbil Polytechnic University Soran Technical College IT DepartmentOrhan ArgoshiNo ratings yet

- DN 7061 PDFDocument2 pagesDN 7061 PDFKhaled DNo ratings yet

- Cover Letter TCDocument2 pagesCover Letter TCsunikesh shuklaNo ratings yet

- Xix Embedded Multi Cameras Brochure HQDocument4 pagesXix Embedded Multi Cameras Brochure HQDominicNo ratings yet

- Windows Fundamentals HTBDocument8 pagesWindows Fundamentals HTBmercyjoash5100% (1)

- Types of Wireless Networks - PPTDocument19 pagesTypes of Wireless Networks - PPTAjit PawarNo ratings yet

- W342 E1 11+CS CJ CP+ReferenceManualDocument277 pagesW342 E1 11+CS CJ CP+ReferenceManualRyan Yudha AdhityaNo ratings yet