Data Preprocessing For Supervised Learning

Uploaded by

Muzungu Hirwa SylvainData Preprocessing For Supervised Learning

Uploaded by

Muzungu Hirwa SylvainSee discussions, stats, and author profiles for this publication at: https://www.researchgate.

net/publication/228084519

Data Preprocessing for Supervised Learning

Article · January 2006

CITATIONS READS

779 26,352

3 authors:

Sotiris Kotsiantis Dimitris Kanellopoulos

University of Patras University of Patras

255 PUBLICATIONS 15,396 CITATIONS 114 PUBLICATIONS 1,669 CITATIONS

SEE PROFILE SEE PROFILE

P. E. Pintelas

University of Patras

189 PUBLICATIONS 8,085 CITATIONS

SEE PROFILE

Some of the authors of this publication are also working on these related projects:

Machine Learning and Data Mining View project

Time-series forecasting View project

All content following this page was uploaded by P. E. Pintelas on 03 June 2014.

The user has requested enhancement of the downloaded file.

INTERNATIONAL JOURNAL OF COMPUTER SCIENCE VOLUME 1 NUMBER 1 2006 ISSN 1306-4428

Data Preprocessing for Supervised Leaning

S. B. Kotsiantis, D. Kanellopoulos and P. E. Pintelas

certain features are correlated so that is not necessary to

Abstract— Many factors affect the success of Machine Learning include all of them in modeling; and interdependence, where

(ML) on a given task. The representation and quality of the instance two or more features between them convey important

data is first and foremost. If there is much irrelevant and redundant information that is obscure if any of them is included on its

information present or noisy and unreliable data, then knowledge

own [15]. Feature subset selection is the process of identifying

discovery during the training phase is more difficult. It is well known

that data preparation and filtering steps take considerable amount of and removing as much irrelevant and redundant information

processing time in ML problems. Data pre-processing includes data as possible. This reduces the dimensionality of the data and

cleaning, normalization, transformation, feature extraction and may allow learning algorithms to operate faster and more

selection, etc. The product of data pre-processing is the final training effectively. In some cases, accuracy on future classification

set. It would be nice if a single sequence of data pre-processing can be improved; in others, the result is a more compact,

algorithms had the best performance for each data set but this is not

easily interpreted representation of the target concept.

happened. Thus, we present the most well know algorithms for each

step of data pre-processing so that one achieves the best performance Furthermore, the problem of feature interaction can be

for their data set. addressed by constructing new features from the basic feature

set. Transformed features generated by feature construction

Keywords—data mining, feature selection, data cleaning may provide a better discriminative ability than the best subset

of given features

I. INTRODUCTION This paper addresses issues of data pre-processing that can

T HE data preprocessing can often have a significant impact have a significant impact on generalization performance of a

on generalization performance of a supervised ML ML algorithm. We present the most well know algorithms for

algorithm. The elimination of noise instances is one of the each step of data pre-processing so that one achieves the best

most difficult problems in inductive ML [48]. Usually the performance for their data set.

removed instances have excessively deviating instances that The next section covers instance selection and outliers

have too many null feature values. These excessively detection. The topic of processing unknown feature values is

deviating features are also referred to as outliers. In addition, a described in section 3. The problem of choosing the interval

common approach to cope with the infeasibility of learning borders and the correct arity (the number of categorical

from very large data sets is to select a single sample from the values) for the discretization is covered in section 4. The

large data set. Missing data handling is another issue often section 5 explains the data normalization techniques (such as

dealt with in the data preparation steps. scaling down transformation of the features) that are important

The symbolic, logical learning algorithms are able to for many neural network and k-Nearest Neighbourhood

process symbolic, categorical data only. However, real-world algorithms, while the section 6 describes the Feature Selection

problems involve both symbolic and numerical features. (FS) methods. Finally, the feature construction algorithms are

Therefore, there is an important issue to discretize numerical covered in section 7 and the closing section concludes this

(continuous) features. Grouping of values of symbolic work.

features is a useful process, too [18]. It is a known problem

that features with too many values are overestimated in the II. INSTANCE SELECTION AND OUTLIERS DETECTION

process of selecting the most informative features, both for Generally, instance selection approaches are distinguished

inducing decision trees and for deriving decision rules. between filter and wrapper [13], [21]. Filter evaluation only

Moreover, in real-world data, the representation of data considers data reduction but does not take into account

often uses too many features, but only a few of them may be activities. On contrary, wrapper approaches explicitly

related to the target concept. There may be redundancy, where emphasize the ML aspect and evaluate results by using the

specific ML algorithm to trigger instance selection.

Manuscript received Feb 19, 2006. The Project is Co-Funded by the Variable-by-variable data cleaning is straightforward filter

European Social Fund & National Resources - EPEAEK II. approach (those values that are suspicious due to their

S. B. Kotsiantis is with Educational Software Development Laboratory, relationship to a specific probability distribution, say a normal

University of Patras, Greece (phone: +302610997833; fax: +302610997313;

e-mail: sotos@ math.upatras.gr). distribution with a mean of 5, a standard deviation of 3, and a

D. Kanellopoulos is with Educational Software Development Laboratory, suspicious value of 10). Table 1 shows examples of how this

University of Patras, Greece (e-mail: dkanellop@ teipat.gr). metadata can help on detecting a number of possible data

P. E. Pintelas is with Educational Software Development Laboratory,

University of Patras, Greece (e-mail: pintelas@ math.upatras.gr). quality problems. Moreover, a number of authors focused on

IJCS VOLUME 1 NUMBER1 2006 ISSN 1306-4428 111 © 2006 WASET.ORG

INTERNATIONAL JOURNAL OF COMPUTER SCIENCE VOLUME 1 NUMBER 1 2006 ISSN 1306-4428

the problem of duplicate instance identification and sets, and looked specifically at the number of instances

elimination, e.g., [16]. necessary before the learning curves reached a plateau.

Surprisingly, for these nineteen data sets, a plateau was

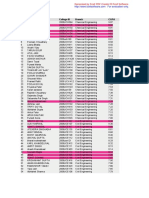

TABLE I

EXAMPLES FOR THE USE OF VARIABLE-BY-VARIABLE DATA reached after very few training instances.

CLEANING Reinartz [42] presents a unifying framework, which covers

individual state of the art approaches related to instance

Problems Metadata Examples/Heuristics selection. First, an application of a statistical sampling

e.g., cardinality (gender)> 2 technique draws an initial sample. In the next step, a

cardinality

indicates problem clustering technique groups the initial sample into subsets of

max, min should not be similar instances. For each of these subsets, the prototyping

Illegal max, min

outside of permissible range step selects or constructs a smaller set of representative

values

variance, deviation of prototypes. The set of prototypes then constitutes the final

variance,

statistical values should not output of instance selection.

deviation

be higher than threshold Typically learners are expected to be able to generalize over

sorting on values often unseen instances of any class with equal accuracy. That is, in

feature

Misspellings brings misspelled values a two class domain of positive and negative examples, the

values

next to correct values learner will perform on an unseen set of examples with equal

accuracy on both the positive and negative classes. This of

An inlier is a data value that lies in the interior of a statistical course is the ideal situation. In many applications learners are

distribution and is in error. Because inliers are difficult to faced with imbalanced data sets, which can cause the learner

distinguish from good data values they are sometimes difficult to be biased towards one class. This bias is the result of one

to find and correct. Multivariate data cleaning is more class being heavily under represented in the training data

difficult, but is an essential step in a complete analysis [43]. compared to the other classes. It can be attributed to two

Examples are the distance based outlier detection algorithm factors that relate to the way in which learners are designed:

RT [22] and the density based outliers LOF [3]. Inductive learners are typically designed to minimize errors

Brodley and Friedl [4] focus on wrapper approach with over the training examples. Classes containing few examples

improving the quality of training data by identifying and can be largely ignored by learning algorithms because the cost

eliminating mislabelled instances prior to applying the chosen of performing well on the over-represented class outweighs

ML algorithm. Their first step is to identify candidate the cost of doing poorly on the smaller class. Another factor

instances by using m learning algorithms to tag instances as contributing to the bias is over-fitting. Over-fitting occurs

correctly or incorrectly labelled. The second step is to form a when a learning algorithm creates a hypothesis that performs

classifier using a new version of the training data for which all well over the training data but does not generalize well over

of the instances identified as mislabelled are removed. unseen data. This can occur on an under represented class

Filtering can be based on one or more of the m base level because the learning algorithm creates a hypothesis that can

classifiers’ tags. easily fit a small number of examples, but it fits them too

However, instance selection isn’t only used to handle noise specifically.

but for coping with the infeasibility of learning from very Imbalanced data sets have recently received attention in the

large data sets. Instance selection in this case is an machine learning community. Common solutions of instance

optimization problem that attempts to maintain the mining selection include:

quality while minimizing the sample size [33]. It reduces data • Duplicating training examples of the under represented

and enables a learning algorithm to function and work class [30]. This is in effect re-sampling the examples and

effectively with huge data. There is a variety of procedures for will be referred to in this paper as over-sampling.

sampling instances from a large data set. The most well • Removing training examples of the over represented class

known are [6]: [26]. This is referred to as downsizing to reflect that the

• Random sampling that selects a subset of instances overall size of the data set is smaller after this balancing

randomly. technique has taken place.

• Stratified sampling that is applicable when the class

values are not uniformly distributed in the training sets. III. MISSING FEATURE VALUES

Instances of the minority class(es) are selected with a

Incomplete data is an unavoidable problem in dealing with

greater frequency in order to even out the distribution.

most of the real world data sources. The topic has been

Sampling is well accepted by the statistics community, who

discussed and analyzed by several researchers in the field of

observe that “a powerful computationally intense procedure

ML [5], [14]. Generally, there are some important factors to

operating on a sub-sample of the data may in fact provide

be taken into account when processing unknown feature

superior accuracy than a less sophisticated one using the entire

values. One of the most important ones is the source of

data base” [12]. In practice, as the amount of data grows, the

’unknownness’: (i) a value is missing because it was forgotten

rate of increase in accuracy slows, forming the familiar

or lost; (ii) a certain feature is not applicable for a given

learning curve. Whether sampling will be effective depends

instance, e.g., it does not exist for a given instance; (iii) for a

on how dramatically the rate of increase slows. Oates and

given observation, the designer of a training set does not care

Jensen [37] studied decision tree induction for nineteen data

IJCS VOLUME 1 NUMBER1 2006 ISSN 1306-4428 112 © 2006 WASET.ORG

INTERNATIONAL JOURNAL OF COMPUTER SCIENCE VOLUME 1 NUMBER 1 2006 ISSN 1306-4428

about the value of a certain feature (so-called don’t-care Continuous Attribute Sort Attribute Sorting

// Sorting continuous attribute

value).

Analogically with the case, the expert has to choose from a Get Cut Point/

// Selects a candidate cutpoint

number of methods for handling missing data [27]: Adjacent Intervals

// or adjacent intervals

• Method of Ignoring Instances with Unknown Feature Evaluation

// Invokes appropriate measure

Values: This method is the simplest: just ignore the Measure

Evaluation

instances, which have at least one unknown feature value.

No Measure

• Most Common Feature Value: The value of the feature Satisfied

// Checks the outcome

that occurs most often is selected to be the value for all // Discretize by splitting or

the unknown values of the feature. Split/Merge

Attribute // merging adjacent intervals

Splitting/

• Concept Most Common Feature Value: This time the Merging

value of the feature, which occurs the most common No Stopping // Controls the overall discretization

within the same class is selected to be the value for all the Criterion

Yes //based on some measure

Stopping

unknown values of the feature. Discretized Attribute

• Mean substitution: Substitute a feature’s mean value Fig. 1 Discretization process

computed from available cases to fill in missing data

values on the remaining cases. A smarter solution than Most discretization methods are divided into top-down and

using the “general” feature mean is to use the feature bottom-up methods. Top down methods start from the initial

mean for all samples belonging to the same class to fill in interval and recursively split it into smaller intervals. Bottom-

the missing value up methods start from the set of single value intervals and

• Regression or classification methods: Develop a iteratively merge neighboring intervals. Some of these

regression or classification model based on complete case methods require user parameters to modify the behavior of the

data for a given feature, treating it as the outcome and discretization criterion or to set up a threshold for the stopping

using all other relevant features as predictors. rule. Boulle [2] presented a recent discretization method

• Hot deck imputation: Identify the most similar case to the named Khiops. This is a bottom-up method based on the

case with a missing value and substitute the most similar global optimization of chi-square.

case’s Y value for the missing case’s Y value. Moreover, error-based methods, for example Maas [35],

• Method of Treating Missing Feature Values as Special evaluate candidate cut points against an error function and

Values: treating “unknown” itself as a new value for the explore a search space of boundary points to minimize the

features that contain missing values. sum of false positive and false negative errors on the training

set. Entropy is another supervised incremental top down

IV. DISCRETIZATION method described in [11]. Entropy discretization recursively

Discretization should significantly reduce the number of selects the cut-points minimizing entropy until a stopping

possible values of the continuous feature since large number criterion based on the Minimum Description Length criterion

of possible feature values contributes to slow and ineffective ends the recursion.

process of inductive ML. The problem of choosing the Static methods, such as binning and entropy-based

interval borders and the correct arity for the discretization of a partitioning, determine the number of partitions for each

numerical value range remains an open problem in numerical feature independent of the other features. On the other hand,

feature handling. The typical discretization process is dynamic methods [38] conduct a search through the space of

presented in the Fig. 1. possible k partitions for all features simultaneously, thereby

Generally, discretization algorithms can be divided into capturing interdependencies in feature discretization. Kohavi

unsupervised algorithms that discretize attributes without and Sahami [23] have compared static discretization with

taking into account the class labels and supervised algorithms dynamic methods using cross-validation to estimate the

that discretize attributes by taking into account the class- accuracy of different values of k. However, they report no

attribute [34]. The simplest discretization method is an significant improvement in employing dynamic discretization

unsupervised direct method named equal size discretization. It over static methods.

calculates the maximum and the minimum for the feature that

is being discretized and partitions the range observed into k V. DATA NORMALIZATION

equal sized intervals. Equal frequency is another unsupervised

method. It counts the number of values we have from the Normalization is a "scaling down" transformation of the

feature that we are trying to discretize and partitions it into features. Within a feature there is often a large difference

intervals containing the same number of instances. between the maximum and minimum values, e.g. 0.01 and

1000. When normalization is performed the value magnitudes

and scaled to appreciably low values. This is important for

many neural network and k-Nearest Neighbourhood

algorithms. The two most common methods for this scope are:

IJCS VOLUME 1 NUMBER1 2006 ISSN 1306-4428 113 © 2006 WASET.ORG

INTERNATIONAL JOURNAL OF COMPUTER SCIENCE VOLUME 1 NUMBER 1 2006 ISSN 1306-4428

• min-max normalization: algorithm, whereas wrapper methods use the inductive

: v' = v − min (new _ max − new _ min ) + new _ min

A algorithm as the evaluation function. The filter evaluation

functions can be divided into four categories: distance,

A A A

maxA − minA

• z-score normalization: v' = v − mean A information, dependence and consistency.

stand _ devA • Distance: For a two-class problem, a feature X is

where v is the old feature value and v’ the new one. preferred to another feature Y if X induces a greater

difference between the two-class conditional probabilities

VI. FEATURE SELECTION than Y [24].

• Information: Feature X is preferred to feature Y if the

Feature subset selection is the process of identifying and

information gain from feature X is greater than that from

removing as much irrelevant and redundant features as

feature Y [7].

possible (see Fig. 2). This reduces the dimensionality of the

• Dependence: The coefficient is a classical dependence

data and enables learning algorithms to operate faster and

measure and can be used to find the correlation between a

more effectively. Generally, features are characterized as:

feature and a class. If the correlation of feature X with

• Relevant: These are features have an influence on the

class C is higher than the correlation of feature Y with C,

output and their role can not be assumed by the rest

then feature X is preferred to Y [20].

• Irrelevant: Irrelevant features are defined as those features

• Consistency: two samples are in conflict if they have the

not having any influence on the output, and whose values

same values for a subset of features but disagree in the

are generated at random for each example.

class they represent [31].

• Redundant: A redundancy exists whenever a feature can Relief [24] uses a statistical method to select the relevant

take the role of another (perhaps the simplest way to features. Relief randomly picks a sample of instances and for

model redundancy). each instance in it finds Near Hit and Near Miss instances

based on the Euclidean distance measure. Near Hit is the

Subset of

original instance having minimum Euclidean distance among all

Generation Evaluation Validation

features instances of the same class as that of the chosen instance;

Feature set

Goodness of S

Near Miss is the instance having minimum Euclidean distance

The subset E

L

S among all instances of different class. It updates the weights

U

E B of the features that are initialized to zero in the beginning

C S

T E based on an intuitive idea that a feature is more relevant if it

E

no Stopping yes D

T

distinguishes between an instance and its Near Miss and less

criterion relevant if it distinguishes between an instance and its Near

Hit. After exhausting all instances in the sample, it chooses all

Fig. 2 Feature subset selection features having weight greater than or equal to a threshold.

Several researchers have explored the possibility of using a

FS algorithms in general have two components [20]: a particular learning algorithm as a pre-processor to discover

selection algorithm that generates proposed subsets of features useful feature subsets for a primary learning algorithm. Cardie

and attempts to find an optimal subset; and an evaluation [7] describes the application of decision tree algorithms to the

algorithm that determines how ‘good’ a proposed feature task of selecting feature subsets for use by instance based

subset is, returning some measure of goodness to the selection learners. C4.5 is run over the training set and the features that

algorithm. However, without a suitable stopping criterion the appear in the pruned decision tree are selected. In a similar

FS process may run exhaustively or forever through the space approach, Singh and Provan [45] use a greedy oblivious

of subsets. Stopping criteria can be: (i) whether addition (or decision tree algorithm to select features from which to

deletion) of any feature does not produce a better subset; and construct a Bayesian network. BDSFS (Boosted Decision

(ii) whether an optimal subset according to some evaluation Stump FS) uses boosted decision stumps as the pre-processor

function is obtained. to discover the feature subset [9]. The number of features to

Ideally, feature selection methods search through the be selected is a parameter, say k, to the FS algorithm. The

subsets of features, and try to find the best one among the boosting algorithm is run for k rounds, and at each round all

competing 2N candidate subsets according to some evaluation features that have previously been selected are ignored. If a

function. However, this procedure is exhaustive as it tries to feature is selected in any round, it becomes part of the set that

find only the best one. It may be too costly and practically will be returned.

prohibitive, even for a medium-sized feature set size (N). FS with neural nets can be thought of as a special case of

Other methods based on heuristic or random search methods architecture pruning, where input features are pruned, rather

attempt to reduce computational complexity by compromising than hidden neurons or weights. The neural-network feature

performance. selector (NNFS) is based on elimination of input layer weights

Langley [28] grouped different FS methods into two broad [44]. The weights-based feature saliency measures bank on

groups (i.e., filter and wrapper) based on their dependence on the idea that weights connected to important features attain

the inductive algorithm that will finally use the selected large absolute values while weights connected to unimportant

subset. Filter methods are independent of the inductive features would probably attain values somewhere near zero.

IJCS VOLUME 1 NUMBER1 2006 ISSN 1306-4428 114 © 2006 WASET.ORG

INTERNATIONAL JOURNAL OF COMPUTER SCIENCE VOLUME 1 NUMBER 1 2006 ISSN 1306-4428

Some of FS procedures are based on making comparisons combinations of unknown features are used with equal

between the saliency of a candidate feature and the saliency of probability. Due to the probabilistic nature of the search, a

a noise feature [1]. feature that should be in the subset will win the race, even if it

LVF [31] is consistency driven method can handle noisy is dependent on another feature. An important aspect of the

domains if the approximate noise level is known a-priori. LVF genetic algorithm is that it is explicitly designed to exploit

generates a random subset S from the feature subset space epistasis (that is, interdependencies between bits in the string),

during each round of execution. If S contains fewer features and thus should be well-suited for this problem domain.

than the current best subset, the inconsistency rate of the However, genetic algorithms typically require a large number

dimensionally reduced data described by S is compared with of evaluations to reach a minimum.

the inconsistency rate of the best subset. If S is at least as Piramuthu [39] compared a number of FS techniques

consistent as the best subset, replaces the best subset. LVS without finding a real winner. To combine the advantages of

[32] is a variance of LVF that can decrease the number of filter and wrapper models, algorithms in a hybrid model have

checkings especially in the case of large datasets. recently been proposed to deal with high dimensional data

In Hall [17], a correlation measure is applied to evaluate the [25]. In these algorithms, first, a goodness measure of feature

goodness of feature subsets based on the hypothesis that a subsets based on data characteristics is used to choose best

good feature subset is one that contains features highly subsets for a given cardinality, and then, cross validation is

correlated with the class, yet uncorrelated with each other. Yu exploited to decide a final best subset across different

and Liu [50] introduced a novel concept, predominant cardinalities.

correlation, and proposed a fast filter method which can

identify relevant features as well as redundancy among

VII. FEATURE CONSTRUCTION

relevant features without pairwise correlation analysis.

Generally, an optimal subset is always relative to a certain The problem of feature interaction can be also addressed by

evaluation function (i.e., an optimal subset chosen using one constructing new features from the basic feature set. This

evaluation function may not be the same as that which uses technique is called feature construction/transformation. The

another evaluation function). Wrapper methods wrap the FS new generated features may lead to the creation of more

around the induction algorithm to be used, using cross- concise and accurate classifiers. In addition, the discovery of

validation to predict the benefits of adding or removing a meaningful features contributes to better comprehensibility of

feature from the feature subset used. In forward stepwise the produced classifier, and better understanding of the

selection, a feature subset is iteratively built up. Each of the learned concept.

unused variables is added to the model in turn, and the Assuming the original set A of features consists of a1, a2, ...,

variable that most improves the model is selected. In an, some variants of feature transformation is defined below.

backward stepwise selection, the algorithm starts by building a Feature transformation process can augment the space of

model that includes all available input variables (i.e. all bits features by inferring or creating additional features. After

are set). In each iteration, the algorithm locates the variable feature construction, we may have additional m features an+1,

that, if removed, most improves the performance (or causes an+2, ..., an+m. For example, a new feature ak (n < k ≤ n + m)

least deterioration). A problem with forward selection is that it could be constructed by performing a logical operation of ai

may fail to include variables that are interdependent, as it adds and aj from the original set of features.

variables one at a time. However, it may locate small effective The GALA algorithm [19] performs feature construction

subsets quite rapidly, as the early evaluations, involving throughout the course of building a decision tree classifier.

relatively few variables, are fast. In contrast, in backward New features are constructed at each created tree node by

selection interdependencies are well handled, but early performing a branch and bound search in feature space. The

evaluations are relatively expensive. Due to the naive Bayes search is performed by iteratively combining the feature

classifier’s assumption that, within each class, probability having the highest InfoGain value with an original basic

distributions for features are independent of each other, feature that meets a certain filter criterion. GALA constructs

Langley and Sage [29] note that its performance on domains new binary features by using logical operators such as

with redundant features can be improved by removing such conjunction, negation, and disjunction. On the other hand,

features. A forward search strategy is employed to select Zheng [51] creates at-least M-of-N features. For a given

features for use with naïve Bayes, as opposed to the backward instance, the value of an at-least M-of-N representation is true

strategies that are used most often with decision tree if at least M of its conditions is true of the instance while it is

algorithms and instance based learners. false, otherwise.

Sequential forward floating selection (SFFS) and sequential Feature transformation process can also extract a set of new

backward floating selection (SBFS) are characterized by the features from the original features through some functional

changing number of features included or eliminated at mapping. After feature extraction, we have b1, b2,..., bm (m <

different stages of the procedure [46]. The adaptive floating n), bi = fi(a1, a2,...,an), and fi is a mapping function. For

search [47] is able to find a better solution, of course at the instance for real valued features a1 and a2, for every object x

expense of significantly increased computational time. we can define b1(x) = c1*a1(x) + c2*a2(x) where c1 and c2 are

The genetic algorithm is another well-known approach for constants. While FICUS [36] is similar in some aspects to

FS [49]. In each iteration, a feature is chosen and raced some of the existing feature construction algorithms (such as

between being in the subset or excluded from it. All GALA), its main strength and contribution are its generality

IJCS VOLUME 1 NUMBER1 2006 ISSN 1306-4428 115 © 2006 WASET.ORG

INTERNATIONAL JOURNAL OF COMPUTER SCIENCE VOLUME 1 NUMBER 1 2006 ISSN 1306-4428

and flexibility. FICUS was designed to perform feature discriminative ability than the best subset of given features,

generation given any feature representation specification but these new features may not have a clear physical meaning.

(mainly the set of constructor functions) using its general- It would be nice if a single sequence of data pre-processing

purpose grammar. algorithms had the best performance for each data set but this

The choice between FS and feature construction depends on is not happened. Thus, we presented the most well known

the application domain and the specific training data, which algorithms for each step of data pre-processing so that one

are available. FS leads to savings in measurements cost since achieves the best performance for their data set.

some of the features are discarded and the selected features

retain their original physical interpretation. In addition, the REFERENCES

retained features may be important for understanding the [1] Bauer, K.W., Alsing, S.G., Greene, K.A., 2000. Feature screening using

physical process that generates the patterns. On the other signal-to-noise ratios. Neurocomputing 31, 29–44.

hand, transformed features generated by feature construction [2] M. Boulle. Khiops: A Statistical Discretization Method of Continuous

Attributes. Machine Learning 55:1 (2004) 53-69

may provide a better discriminative ability than the best subset [3] Breunig M. M., Kriegel H.-P., Ng R. T., Sander J.: ‘LOF: Identifying

of given features, but these new features may not have a clear Density-Based Local Outliers’, Proc. ACM SIGMOD Int. Conf. On

physical meaning. Management of Data (SIGMOD 2000), Dallas, TX, 2000, pp. 93-104.

[4] Brodley, C.E. and Friedl, M.A. (1999) "Identifying Mislabeled Training

Data", AIR, Volume 11, pages 131-167.

VIII. CONCLUSION [5] Bruha and F. Franek: Comparison of various routines for unknown

Machine learning algorithms automatically extract knowledge attribute value processing: covering paradigm. International Journal of

from machine-readable information. Unfortunately, their Pattern Recognition and Artificial Intelligence, 10, 8 (1996), 939-955

[6] J.R. Cano, F. Herrera, M. Lozano. Strategies for Scaling Up

success is usually dependant on the quality of the data that Evolutionary Instance Reduction Algorithms for Data Mining. In: L.C.

they operate on. If the data is inadequate, or contains Jain, A. Ghosh (Eds.) Evolutionary Computation in Data Mining,

extraneous and irrelevant information, machine learning Springer, 2005, 21-39

algorithms may produce less accurate and less understandable [7] C. Cardie. Using decision trees to improve cased-based learning. In

Proceedings of the First International Conference on Knowledge

results, or may fail to discover anything of use at all. Thus, Discovery and Data Mining. AAAI Press, 1995.

data pre-processing is an important step in the machine [8] M. Dash, H. Liu, Feature Selection for Classification, Intelligent Data

learning process. The pre-processing step is necessary to Analysis 1 (1997) 131–156.

resolve several types of problems include noisy data, [9] S. Das. Filters, wrappers and a boosting-based hybrid for feature

selection. Proc. of the 8th International Conference on Machine

redundancy data, missing data values, etc. All the inductive Learning, 2001.

learning algorithms rely heavily on the product of this stage, [10] T. Elomaa, J. Rousu. Efficient multisplitting revisited: Optima-

which is the final training set. preserving elimination of partition candidates. Data Mining and

By selecting relevant instances, experts can usually remove Knowledge Discovery 8:2 (2004) 97-126

[11] Fayyad U., and Irani K. (1993). Multi-interval discretization of

irrelevant ones as well as noise and/or redundant data. The continuous-valued attributes for classification learning. In Proc. of the

high quality data will lead to high quality results and reduced Thirteenth Int. Joint Conference on Artificial Intelligence, 1022-1027.

costs for data mining. In addition, when a data set is too huge, [12] Friedman, J.H. 1997. Data mining and statistics: What’s the connection?

it may not be possible to run a ML algorithm. In this case, Proceedings of the 29th Symposium on the Interface Between Computer

Science and Statistics.

instance selection reduces data and enables a ML algorithm to [13] Marek Grochowski, Norbert Jankowski: Comparison of Instance

function and work effectively with huge data. Selection Algorithms II. Results and Comments. ICAISC 2004a: 580-

In most cases, missing data should be pre-processed so as to 585.

allow the whole data set to be processed by a supervised ML [14] Jerzy W. Grzymala-Busse and Ming Hu, A Comparison of Several

Approaches to Missing Attribute Values in Data Mining, LNAI 2005,

algorithm. Moreover, most of the existing ML algorithms are

pp. 378−385, 2001.

able to extract knowledge from data set that store discrete [15] Isabelle Guyon, André Elisseeff; An Introduction to Variable and

features. If the features are continuous, the algorithms can be Feature Selection, JMLR Special Issue on Variable and Feature

integrated with a discretization algorithm that transforms them Selection, 3(Mar):1157--1182, 2003.

[16] Hernandez, M.A.; Stolfo, S.J.: Real-World Data is Dirty: Data Cleansing

into discrete attributes. A number of studies [23], [20]

and the Merge/Purge Problem. Data Mining and Knowledge Discovery

comparing the effects of using various discretization 2(1):9-37, 1998.

techniques (on common ML domains and algorithms) have [17] Hall, M. (2000). Correlation-based feature selection for discrete and

found the entropy based methods to be superior overall. numeric class machine learning. Proceedings of the Seventeenth

Feature subset selection is the process of identifying and International Conference on Machine Learning (pp. 359–366).

[18] K. M. Ho, and P. D. Scott. Reducing Decision Tree Fragmentation

removing as much of the irrelevant and redundant information Through Attribute Value Grouping: A Comparative Study, in Intelligent

as possible. Feature wrappers often achieve better results than Data Analysis Journal, 4(1), pp.1-20, 2000.

filters due to the fact that they are tuned to the specific [19] Hu, Y.-J., & Kibler, D. (1996). Generation of attributes for learning

interaction between an induction algorithm and its training algorithms. Proc. 13th International Conference on Machine Learning.

[20] J. Hua, Z. Xiong, J. Lowey, E. Suh, E.R. Dougherty. Optimal number of

data. However, they are much slower than feature filters. features as a function of sample size for various classification rules.

Moreover, the problem of feature interaction can be addressed Bioinformatics 21 (2005) 1509-1515

by constructing new features from the basic feature set [21] Norbert Jankowski, Marek Grochowski: Comparison of Instances

(feature construction). Generally, transformed features Selection Algorithms I. Algorithms Survey. ICAISC 2004b: 598-603.

[22] Knorr E. M., Ng R. T.: ‘A Unified Notion of Outliers: Properties and

generated by feature construction may provide a better Computation’, Proc. 4th Int. Conf. on Knowledge Discovery and Data

Mining (KDD’97), Newport Beach, CA, 1997, pp. 219-222.

IJCS VOLUME 1 NUMBER1 2006 ISSN 1306-4428 116 © 2006 WASET.ORG

INTERNATIONAL JOURNAL OF COMPUTER SCIENCE VOLUME 1 NUMBER 1 2006 ISSN 1306-4428

[23] R. Kohavi and M. Sahami. Error-based and entropy-based discretisation [51] Zheng (2000), Constructing X-of-N Attributes for Decision Tree

of continuous features. In Proceedings of the Second International Learning, Machine Learning, 40, 35–75, 2000, Kluwer Academic

Conference on Knowledge Discovery and Data Mining. AAAI Press, Publishers.

1996.

[24] Kononenko, I., Simec, E., and Robnik-Sikonja, M.(1997).Overcoming

the myopia of inductive learning algorithms with RELIEFF. Applied

Intelligence, 7: 39–55.

[25] S. B. Kotsiantis, P. E. Pintelas (2004), Hybrid Feature Selection instead S. B. Kotsiantis received a diploma in mathematics, a Master and a Ph.D.

of Ensembles of Classifiers in Medical Decision Support, Proceedings of degree in computer science from the University of Patras, Greece. His

Information Processing and Management of Uncertainty in Knowledge- research interests are in the field of data mining and machine learning. He has

Based Systems, July 4-9, Perugia - Italy, pp. 269-276. more than 40 publications to his credit in international journals and

[26] Kubat, M. and Matwin, S., 'Addressing the Curse of Imbalanced Data conferences.

Sets: One Sided Sampling', in the Proceedings of the Fourteenth D. Kanellopoulos received a diploma in electrical engineering and a Ph.D.

International Conference on Machine Learning, pp. 179-186, 1997. degree in electrical and computer engineering from the University of Patras,

[27] Lakshminarayan K., S. Harp & T. Samad, Imputation of Missing Data in Greece. He has more than 40 publications to his credit in international journals

Industrial Databases, Applied Intelligence 11, 259–275 (1999). and conferences.

[28] Langley, P., Selection of relevant features in machine learning. In: P. E. Pintelas is a Professor in the Department of Mathematics, University of

Proceedings of the AAAI Fall Symposium on Relevance, 1–5, 1994. Patras, Greece. His research interests are in the field of educational software

[29] P. Langley and S. Sage. Induction of selective Bayesian classifiers. In and machine learning. He has more than 100 publications in international

Proc. of 10th Conference on Uncertainty in Artificial Intelligence, journals and conferences.

Seattle, 1994.

[30] Ling, C. and Li, C., 'Data Mining for Direct Marketing: Problems and

Solutions', Proceedings of KDD-98.

[31] Liu, H. and Setiono, R., A probabilistic approach to feature selection—a

filter solution. Proc. of International Conference on ML, 319–327, 1996.

[32] H. Liu and R. Setiono. Some Issues on scalable feature selection. Expert

Systems and Applications, 15 (1998) 333-339. Pergamon.

[33] Liu, H. and H. Metoda (Eds), Instance Selection and Constructive Data

Mining, Kluwer, Boston, MA, 2001

[34] H. Liu, F. Hussain, C. Lim, M. Dash. Discretization: An Enabling

Technique. Data Mining and Knowledge Discovery 6:4 (2002) 393-423.

[35] Maas W. (1994). Efficient agnostic PAC-learning with simple

hypotheses. Proc. of the 7th ACM Conf. on Computational Learning

Theory, 67-75.

[36] Markovitch S. & Rosenstein D. (2002), Feature Generation Using

General Constructor Functions, Machine Learning, 49, 59–98, 2002.

[37] Oates, T. and Jensen, D. 1997. The effects of training set size on

decision tree complexity. In ML: Proc. of the 14th Intern. Conf., pp.

254–262.

[38] Pfahringer B. (1995). Compression-based discretization of continuous

attributes. Proc. of the 12th International Conference on Machine

Learning.

[39] S. Piramuthu. Evaluating feature selection methods for learning in data

mining applications. European Journal of Operational Research 156:2

(2004) 483-494

[40] Pyle, D., 1999. Data Preparation for Data Mining. Morgan Kaufmann

Publishers, Los Altos, CA.

[41] Quinlan J.R. (1993), C4.5: Programs for Machine Learning, Morgan

Kaufmann, Los Altos, California.

[42] Reinartz T., A Unifying View on Instance Selection, Data Mining and

Knowledge Discovery, 6, 191–210, 2002, Kluwer Academic Publishers.

[43] Rocke, D. M. and Woodruff, D. L. (1996) “Identification of Outliers in

Multivariate Data,” Journal of the American Statistical Association, 91,

1047–1061.

[44] Setiono, R., Liu, H., 1997. Neural-network feature selector. IEEE Trans.

Neural Networks 8 (3), 654–662.

[45] M. Singh and G. M. Provan. Efficient learning of selective Bayesian

network classifiers. In Machine Learning: Proceedings of the Thirteenth

International Conference on Machine Learning. Morgan Kaufmann,

1996.

[46] Somol, P., Pudil, P., Novovicova, J., Paclik, P., 1999. Adaptive floating

search methods in feature selection. Pattern Recognition Lett. 20

(11/13), 1157–1163.

[47] P. Somol, P. Pudil. Feature Selection Toolbox. Pattern Recognition 35

(2002) 2749-2759.

[48] C. M. Teng. Correcting noisy data. In Proc. 16th International Conf. on

Machine Learning, pages 239–248. San Francisco, 1999.

[49] Yang J, Honavar V. Feature subset selection using a genetic algorithm.

IEEE Int Systems and their Applications 1998; 13(2): 44–49.

[50] Yu and Liu (2003), Proceedings of the Twentieth International

Conference on Machine Learning (ICML-2003), Washington DC.

IJCS VOLUME 1 NUMBER1 2006 ISSN 1306-4428 117 © 2006 WASET.ORG

View publication stats

You might also like

- Assessment of Geothermal Resources For Power GenerNo ratings yetAssessment of Geothermal Resources For Power Gener7 pages

- CORONA: A Coordinate and Routing System For Nanonetworks: September 2015No ratings yetCORONA: A Coordinate and Routing System For Nanonetworks: September 20157 pages

- Business Process Documentation For ERP-Supported OrganisationsNo ratings yetBusiness Process Documentation For ERP-Supported Organisations9 pages

- a15ForensicinvestigationofsubmersiondeathsNo ratings yeta15Forensicinvestigationofsubmersiondeaths10 pages

- Gravito-Magnetic Instabilities in Anisotropically Expanding UidsNo ratings yetGravito-Magnetic Instabilities in Anisotropically Expanding Uids11 pages

- COVID-19 Pandemic: The Impact of The Social Media Technology On Higher EducationNo ratings yetCOVID-19 Pandemic: The Impact of The Social Media Technology On Higher Education27 pages

- Hydraulics and Pneumatics Operational CharacteristNo ratings yetHydraulics and Pneumatics Operational Characterist11 pages

- Finite Element Analysis of Impact Damage Response of Composite Motorcycle Safety HelmetsNo ratings yetFinite Element Analysis of Impact Damage Response of Composite Motorcycle Safety Helmets10 pages

- The Breast Imaging-Reporting and Data System BI-RANo ratings yetThe Breast Imaging-Reporting and Data System BI-RA13 pages

- Weekly External Load Correlation in Season Microcycles With Game Running Performance and Training Quantification in Elite Young Soccer PlayersNo ratings yetWeekly External Load Correlation in Season Microcycles With Game Running Performance and Training Quantification in Elite Young Soccer Players14 pages

- Data Analytics Platform For The Optimization of Waste Management ProceduresNo ratings yetData Analytics Platform For The Optimization of Waste Management Procedures7 pages

- Diagnostic Criteria For Systemic Lupus ErythematosusNo ratings yetDiagnostic Criteria For Systemic Lupus Erythematosus9 pages

- A_Comprehensive_Study_on_Sign_Language_RecognitionNo ratings yetA_Comprehensive_Study_on_Sign_Language_Recognition13 pages

- Outage Probability of Triple-Hop Mixed RF/FSO/RF Stratospheric Communication SystemsNo ratings yetOutage Probability of Triple-Hop Mixed RF/FSO/RF Stratospheric Communication Systems7 pages

- Prescriptive Analytics: Literature Review and Research ChallengesNo ratings yetPrescriptive Analytics: Literature Review and Research Challenges15 pages

- Homogenous Earth Approximation of Two-Layer EarthNo ratings yetHomogenous Earth Approximation of Two-Layer Earth10 pages

- Solar Hydrogen Productionand Storage TechniquesNo ratings yetSolar Hydrogen Productionand Storage Techniques7 pages

- Location of Energy Storage Units / Base-Load Generation ScenariosNo ratings yetLocation of Energy Storage Units / Base-Load Generation Scenarios6 pages

- Lightning Performance Study For Photovoltaic Systems: August 2015No ratings yetLightning Performance Study For Photovoltaic Systems: August 20156 pages

- A Framework For A Holistic Information System For Small-Medium Logistics EnterprisesNo ratings yetA Framework For A Holistic Information System For Small-Medium Logistics Enterprises7 pages

- Gamified or Traditional Assessment Methods ? A Mediation Model of RecommendationNo ratings yetGamified or Traditional Assessment Methods ? A Mediation Model of Recommendation13 pages

- Lightning Performance Study For Photovoltaic Systems: August 2015No ratings yetLightning Performance Study For Photovoltaic Systems: August 20156 pages

- Reduction of Porous Carbon Al Contact Resistance For An Electric Double-LayerNo ratings yetReduction of Porous Carbon Al Contact Resistance For An Electric Double-Layer6 pages

- SCADA Implementations To Supervise The Water Networks Infrastructures in The City of AthensNo ratings yetSCADA Implementations To Supervise The Water Networks Infrastructures in The City of Athens7 pages

- An Enhanced Simulation Model For DC Motor Belt Drive Conveyor System ControlNo ratings yetAn Enhanced Simulation Model For DC Motor Belt Drive Conveyor System Control5 pages

- Naka 2018 Do composite resin restorations protect cracked teeth An in vitro studyNo ratings yetNaka 2018 Do composite resin restorations protect cracked teeth An in vitro study7 pages

- Energy Consumption Estimation in Embedded Systems: IEEE Transactions On Instrumentation and Measurement May 2008No ratings yetEnergy Consumption Estimation in Embedded Systems: IEEE Transactions On Instrumentation and Measurement May 20085 pages

- Optimazation of The Cutting Parameters During CNC Plasma Arc CuttingNo ratings yetOptimazation of The Cutting Parameters During CNC Plasma Arc Cutting8 pages

- Principles and Developments in Soil Grouting: A Historical ReviewNo ratings yetPrinciples and Developments in Soil Grouting: A Historical Review18 pages

- Relative Residence Time and Oscillatory Shear IndeNo ratings yetRelative Residence Time and Oscillatory Shear Inde5 pages

- Defining A Management Function Based Architecture For 5G Network SlicingNo ratings yetDefining A Management Function Based Architecture For 5G Network Slicing8 pages

- Focus1 2E End of Year Test Writing ANSWERS PDFNo ratings yetFocus1 2E End of Year Test Writing ANSWERS PDF2 pages

- TS EAMCET ENGINEERING Top 40 Colleges OU Local Cutoffs - 2023 (For CSE, CSE Specialisations, IT & ECE Courses) - UpdatedNo ratings yetTS EAMCET ENGINEERING Top 40 Colleges OU Local Cutoffs - 2023 (For CSE, CSE Specialisations, IT & ECE Courses) - Updated11 pages

- Importance of Strategies in Knowledge Management.No ratings yetImportance of Strategies in Knowledge Management.5 pages

- Status Keberlanjutan Ekowisata Mangrove PetengoranNo ratings yetStatus Keberlanjutan Ekowisata Mangrove Petengoran15 pages

- AI-Powered Recruitment and Employee Selection Evaluating Bias and Fairness in Hiring PracticesNo ratings yetAI-Powered Recruitment and Employee Selection Evaluating Bias and Fairness in Hiring Practices8 pages

- StrategiesforTeachingPhysics AnActionResearchNo ratings yetStrategiesforTeachingPhysics AnActionResearch14 pages

- Polytechnic Classes: Civil Engineering DepartmentNo ratings yetPolytechnic Classes: Civil Engineering Department12 pages

- The Use of Design Thinking in MNCH Programs-CCHNo ratings yetThe Use of Design Thinking in MNCH Programs-CCH64 pages

- Curriculum Adaptations and Modifications As Tools To Manage Behavior - Kara WeinsteinNo ratings yetCurriculum Adaptations and Modifications As Tools To Manage Behavior - Kara Weinstein11 pages

- Moringa School DS Fulltime Flatiron Brochure Mobile Brochure 2022 1No ratings yetMoringa School DS Fulltime Flatiron Brochure Mobile Brochure 2022 16 pages

- 2018 - Shiau - Evaluation of A Flipped Classroom Approach To Learning Introductory EpidemiologyNo ratings yet2018 - Shiau - Evaluation of A Flipped Classroom Approach To Learning Introductory Epidemiology9 pages

- Stem 433 - Calculator Newsletter Project - Cargen TaylorNo ratings yetStem 433 - Calculator Newsletter Project - Cargen Taylor7 pages

- Lec-1-History & What Is Civil Engineering - CEO-111No ratings yetLec-1-History & What Is Civil Engineering - CEO-11157 pages

- Mahatma Gandhi Kashi Vidyapith: Objectives, ImportanceNo ratings yetMahatma Gandhi Kashi Vidyapith: Objectives, Importance15 pages

- Assessment of Geothermal Resources For Power GenerAssessment of Geothermal Resources For Power Gener

- CORONA: A Coordinate and Routing System For Nanonetworks: September 2015CORONA: A Coordinate and Routing System For Nanonetworks: September 2015

- Business Process Documentation For ERP-Supported OrganisationsBusiness Process Documentation For ERP-Supported Organisations

- Gravito-Magnetic Instabilities in Anisotropically Expanding UidsGravito-Magnetic Instabilities in Anisotropically Expanding Uids

- COVID-19 Pandemic: The Impact of The Social Media Technology On Higher EducationCOVID-19 Pandemic: The Impact of The Social Media Technology On Higher Education

- Hydraulics and Pneumatics Operational CharacteristHydraulics and Pneumatics Operational Characterist

- Finite Element Analysis of Impact Damage Response of Composite Motorcycle Safety HelmetsFinite Element Analysis of Impact Damage Response of Composite Motorcycle Safety Helmets

- The Breast Imaging-Reporting and Data System BI-RAThe Breast Imaging-Reporting and Data System BI-RA

- Weekly External Load Correlation in Season Microcycles With Game Running Performance and Training Quantification in Elite Young Soccer PlayersWeekly External Load Correlation in Season Microcycles With Game Running Performance and Training Quantification in Elite Young Soccer Players

- Data Analytics Platform For The Optimization of Waste Management ProceduresData Analytics Platform For The Optimization of Waste Management Procedures

- Diagnostic Criteria For Systemic Lupus ErythematosusDiagnostic Criteria For Systemic Lupus Erythematosus

- A_Comprehensive_Study_on_Sign_Language_RecognitionA_Comprehensive_Study_on_Sign_Language_Recognition

- Outage Probability of Triple-Hop Mixed RF/FSO/RF Stratospheric Communication SystemsOutage Probability of Triple-Hop Mixed RF/FSO/RF Stratospheric Communication Systems

- Prescriptive Analytics: Literature Review and Research ChallengesPrescriptive Analytics: Literature Review and Research Challenges

- Location of Energy Storage Units / Base-Load Generation ScenariosLocation of Energy Storage Units / Base-Load Generation Scenarios

- Lightning Performance Study For Photovoltaic Systems: August 2015Lightning Performance Study For Photovoltaic Systems: August 2015

- A Framework For A Holistic Information System For Small-Medium Logistics EnterprisesA Framework For A Holistic Information System For Small-Medium Logistics Enterprises

- Gamified or Traditional Assessment Methods ? A Mediation Model of RecommendationGamified or Traditional Assessment Methods ? A Mediation Model of Recommendation

- Lightning Performance Study For Photovoltaic Systems: August 2015Lightning Performance Study For Photovoltaic Systems: August 2015

- Reduction of Porous Carbon Al Contact Resistance For An Electric Double-LayerReduction of Porous Carbon Al Contact Resistance For An Electric Double-Layer

- SCADA Implementations To Supervise The Water Networks Infrastructures in The City of AthensSCADA Implementations To Supervise The Water Networks Infrastructures in The City of Athens

- An Enhanced Simulation Model For DC Motor Belt Drive Conveyor System ControlAn Enhanced Simulation Model For DC Motor Belt Drive Conveyor System Control

- Naka 2018 Do composite resin restorations protect cracked teeth An in vitro studyNaka 2018 Do composite resin restorations protect cracked teeth An in vitro study

- Energy Consumption Estimation in Embedded Systems: IEEE Transactions On Instrumentation and Measurement May 2008Energy Consumption Estimation in Embedded Systems: IEEE Transactions On Instrumentation and Measurement May 2008

- Optimazation of The Cutting Parameters During CNC Plasma Arc CuttingOptimazation of The Cutting Parameters During CNC Plasma Arc Cutting

- Principles and Developments in Soil Grouting: A Historical ReviewPrinciples and Developments in Soil Grouting: A Historical Review

- Relative Residence Time and Oscillatory Shear IndeRelative Residence Time and Oscillatory Shear Inde

- Defining A Management Function Based Architecture For 5G Network SlicingDefining A Management Function Based Architecture For 5G Network Slicing

- TS EAMCET ENGINEERING Top 40 Colleges OU Local Cutoffs - 2023 (For CSE, CSE Specialisations, IT & ECE Courses) - UpdatedTS EAMCET ENGINEERING Top 40 Colleges OU Local Cutoffs - 2023 (For CSE, CSE Specialisations, IT & ECE Courses) - Updated

- Status Keberlanjutan Ekowisata Mangrove PetengoranStatus Keberlanjutan Ekowisata Mangrove Petengoran

- AI-Powered Recruitment and Employee Selection Evaluating Bias and Fairness in Hiring PracticesAI-Powered Recruitment and Employee Selection Evaluating Bias and Fairness in Hiring Practices

- Curriculum Adaptations and Modifications As Tools To Manage Behavior - Kara WeinsteinCurriculum Adaptations and Modifications As Tools To Manage Behavior - Kara Weinstein

- Moringa School DS Fulltime Flatiron Brochure Mobile Brochure 2022 1Moringa School DS Fulltime Flatiron Brochure Mobile Brochure 2022 1

- 2018 - Shiau - Evaluation of A Flipped Classroom Approach To Learning Introductory Epidemiology2018 - Shiau - Evaluation of A Flipped Classroom Approach To Learning Introductory Epidemiology

- Stem 433 - Calculator Newsletter Project - Cargen TaylorStem 433 - Calculator Newsletter Project - Cargen Taylor

- Lec-1-History & What Is Civil Engineering - CEO-111Lec-1-History & What Is Civil Engineering - CEO-111

- Mahatma Gandhi Kashi Vidyapith: Objectives, ImportanceMahatma Gandhi Kashi Vidyapith: Objectives, Importance