F test is a statistical test that is used in hypothesis testing, that determines whether or not the variances of two populations or two samples are equal. An f distribution is what the data in a f test conforms to. By dividing the two variances, this test compares them using the f statistic. Depending on the details of the situation, a f-test can be one-tailed or two-tailed. The article will provide further information on the f test, the f statistic, its calculation, critical value, and how to use it to test hypotheses.

F-distribution

In statistical hypothesis testing, the Fisher-Snedecor distribution, sometimes known as the F-distribution, is used, particularly when comparing variances or testing means across populations. Two degrees of freedom parameters, represented as df1 (numerator) and df2 (denominator), define this positively skewed data.

In statistical analyses such as ANOVA or regression, the F-test assesses the equality of variances or means, as it is derived from the F-distribution. In hypothesis testing, the rejection zone is determined by critical values derived from the F-distribution, taking into account the degrees of freedom and significance level. An F-distribution can be found when two independent chi-square variables are split by their corresponding degrees of freedom.

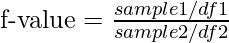

Formula for F-distribution:

(Equation 1)

(Equation 1)

- The independent random variables, Samples 1 and 2, have a chi-square distribution.

- The related samples’ degrees of freedom are denoted by df1 and df2.

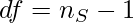

Degree of Freedom

The degrees of freedom represent the number of observations used to calculate the chi-square variables that form the ratio. The shape of the F-distribution is determined by its degrees of freedom. It is a right-skewed distribution, meaning it has a longer tail on the right side. As the degrees of freedom increase, the F-distribution becomes more symmetric and approaches a bell shape.

What is F-Test?

The F test is a statistical technique that determines if the variances of two samples or populations are equal using the F test statistic. Both the samples and the populations need to be independent and fit into an F-distribution. The null hypothesis can be rejected if the results of the F test during the hypothesis test are statistically significant; if not, it stays unchanged.

We can use this test when:

- The population is normally distributed.

- The samples are taken at random and are independent samples.

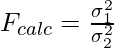

F-Test Formula using two covariances

Here,

- Fcalc = Critical F-value.

- σ12 & σ22 = variance of the two samples.

Here,

- df = Degrees of freedom of the sample.

- nS = Sample size.

Hypothesis Testing Framework for F-test

Using hypothesis testing, the f test is performed to verify that the variances are equivalent. For various hypothesis tests, the f test formula is provided as follows:

Left Tailed Test:

Null Hypothesis: H0 :

Alternate Hypothesis: H1 :

Decision-Making Standard: The null hypothesis is to be rejected if the f statistic is less than the f critical value.

Right Tailed Test:

Null Hypothesis: H0 :

Alternate Hypothesis: H1 :

Decision-Making Standard: Dismiss the null hypothesis if the f test statistic is greater than the f test critical value.

Two Tailed Test:

Null Hypothesis: H0 : \sigma_{1}^2 = \sigma_{2}^2

Alternate Hypothesis: H1 :

Decision-Making Standard: When the f test statistic surpasses the f test critical value, the null hypothesis is declared invalid.

F Test Statistic Formula Assumptions

Several assumptions are used in the F Test equation. For the F-test Formula to be utilized, the population distribution needs to be normal. Independent events should be the basis for the test samples. Apart from this, the following considerations should also be taken into consideration.

- It is simpler to calculate right-tailed tests. By pushing the bigger variance into the numerator, the test is forced to be right tailed.

- Before the critical value is determined in two-tailed tests, alpha is divided by two.

- Squares of standard deviations equal variances.

Steps to calculate F-Test

Step 1: Use Standard deviation (σ) and find variance (σ2) of the data. (if not already given)

Step 2: Determine the null and alternate hypothesis.

- H0: no difference in variances.

- H1: difference in variances.

Step 3: Find Fcalc using Equation 1 (F-value).

NOTE : While calculating Fcalc, divide the larger variance with small variance as it makes calculations easier.

Step 4: Find the degrees of freedom of the two samples.

Step 5: Find Ftable value using d1 and d2 obtained in Step-4 from the F-distribution table. Take learning rate, α = 0.05 (if not given)

Looking up the F-distribution table:

In the F-Distribution table (Link here), refer the table as per the given value of α in the question.

- d1 (Across) = df of the sample with numerator variance. (larger)

- d2 (Below) = df of the sample with denominator variance. (smaller)

Consider the F-Distribution table given below, while performing One-Tailed F-Test.

GIVEN:

α = 0.05

d1 = 2

d2 = 3

|

| 161.4 | 199.5 | . . |

| 18.51 | 19.00 | . . |

| 10.13 | 9.55 | . . |

:

| :

| . . |

Then, Ftable = 9.55

Step 6: Interpret the results using Fcalc and Ftable.

Interpreting the results:

If Fcalc < Ftable :

Cannot reject null hypothesis.

∴ Variance of two populations are similar.

If Fcalc > Ftable :

Reject null hypothesis.

∴ Variance of two populations are not similar.

Example Problem for calculating F-Test

Consider the following example,

Conduct a two-tailed F-Test on the following samples:

Step 1: The statement of the hypothesis is formatted as:

- H0: no difference in variances.

- H1: difference in variances.

Step 2: Let’s calculated the value of the variances in numerator and denominator.

F-value =

- σ12 = (10.47)2 = 109.63

- σ22 = (8.12)2 = 65.99

Fcalc = (109.63 / 65.99) = 1.66

Step 3: Now, let’s calculate the degree of freedom.

Degree of freedom = sample – 1

Sample 1 = n1 = 41

Sample 2 = n2 = 21

Degree of sample 1 = d1 = (n1 – 1) = (41 – 1) = 40

Degree of sample 2 = d2 = (n2 — 1) = (21 – 1) = 20

Step 4 – The usual alpha level of 0.05 is selected because the question does not specify an alpha level. The alpha level should be lowered during the test to half of its starting value, or 0.025.

Using d1 = 40 and d2 = 20 in the F-Distribution table. (link here)

Take α = 0.05 as it’s not given.

Since it is a two-tailed F-test,

α = 0.05/2 = 0.025

Step 5 – The critical F value is found with alpha at 0.025 using the F table. For (40, 20), the critical value at alpha equal to 0.025 IS 2.287.

Therefore, Ftable = 2.287

Step 6 – Since Fcalc < Ftable (1.66 < 2.287):

We cannot reject null hypothesis.

∴ Variance of two populations is similar to each other.

F-Test is the most often used when comparing statistical models that have been fitted to a data set to identify the model that best fits the population.

Frequently Asked Questions (FAQs)

1. What is the difference between the F-test and t-test?

The t-test is employed to assess whether the means of two groups are significantly distinct, providing a measure of the difference between them. On the other hand, the F-test is utilized to compare the variances of two or more groups, determining whether these variances are significantly different from one another.

2. Is ANOVA and F-test same?

ANOVA and F-test are related, with the F-test being component of ANOVA. ANOVA (Analysis of Variance) is a statistical technique that involves the F-test. The F-test is specifically used within ANOVA to compare the variances between different groups, helping determine if there are significant differences among group means.

3. What is p-value of the F-test?

The p-value of the F-test represents the probability of obtaining the observed variance ratio or a more extreme ratio, assuming the null hypothesis of equal variances is true. A smaller p-value indicates stronger evidence against the null hypothesis.

4. What is the F-test to compare variances?

The F-test to compare variances assesses whether the variances of two or more groups are statistically different. It involves comparing the ratio of variances, providing a test statistic that follows an F-distribution under the null hypothesis of equal variances.

Please Login to comment...