Self-regression Language Model (LLM) models like ChatGPT have revolutionized natural language processing tasks by demonstrating the ability to generate coherent and contextually relevant text. However, maximizing their potential requires a nuanced understanding of how to effectively utilize prompts.

In this article, we delve into the Strategies and Techniques for achieving superior results with self-regression LLM models through the use of prompts.

Tips and Practices for Achieving Better Results Using Prompts With LLMs

Crafting effective prompts is key to harnessing the full potential of self-regression LLM models like ChatGPT. By providing context and constraints, prompts enable users to steer the model's responses towards specific objectives. Effective prompts not only enhance the quality of generated text but also facilitate fine-grained control over the model's behavior.

Let's discuss in brief 6 tips and practices for achieving better results with prompts.

1. Be Specific and Detailed in Your Prompts

The precision of your prompt directly influences the accuracy and relevance of the model's response. Specificity narrows down the model's focus, guiding it to generate information that aligns closely with your query. This approach is particularly beneficial when dealing with complex subjects or when you're looking for detailed insights.

For example, consider the difference between the prompts:

- Provide a detailed overview of the colonization prospects of Mars, including technological requirements and potential human challenges.

The first prompt lacks specificity and might lead to a broad, unfocused response. In contrast, the second one explicitly outlines the subject (Mars colonization), the type of information needed (technological requirements and human challenges), and the depth of explanation expected (detailed overview). This level of specificity prevents the model from veering off into unrelated aspects of space and focuses its 'thought' process on generating a structured and informative response.

2. Use Clear and Structured Language

Clarity and structure in your prompts are essential for effective communication with LLMs. A well-structured prompt helps the model understand the sequence and importance of the information requested, leading to more coherent and logically organized responses. Avoid ambiguity and complexity that could confuse the model or dilute the focus of its output.

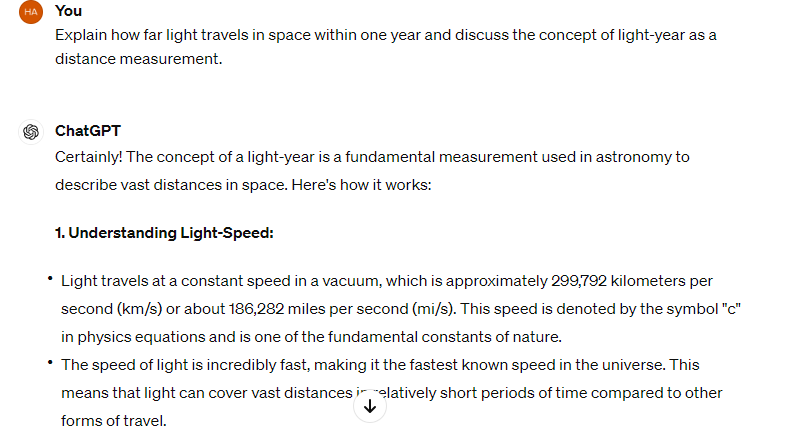

Take, for instance, the difference in clarity between "Space, how far, light speed?" and "Explain how far light travels in space within one year and discuss the concept of light-year as a distance measurement."

- Space, how far, light speed?

- Explain how far light travels in space within one year and discuss the concept of light-year as a distance measurement.

The first prompt lacks clarity and structure, potentially confusing the model and resulting in an unclear response. The second prompt uses clear, structured language to delineate the request into two parts: an explanation of light travel in space and a discussion on the concept of a light-year. This clarity ensures that the model's response is both comprehensive and focused.

3. Incorporate Contextual Cues When Necessary

Contextual cues are pivotal in directing the model's response towards the intended interpretation of your prompt, especially for topics with multiple meanings or recent developments. Providing context helps the model apply the most relevant knowledge base, enhancing the accuracy of its outputs.

Consider the prompt "Discuss the latest trends in AI" without context versus "Discuss the latest trends in AI as of 2024, focusing on advancements in natural language processing and generative models." The addition of a specific year and focus areas immediately informs the model of the temporal and thematic context, ensuring the response is not only current but also aligned with the specific areas of interest.

- Discuss the latest trends in AI

- Discuss the latest trends in AI in 2023, focusing on advancements in natural language processing and generative models.

The first prompt lacks context, leaving the model to interpret the term "latest trends in AI" without specific guidance. In contrast, the second prompt provides context by specifying the year (2023) and the focus areas (advancements in natural language processing and generative models). This contextual information ensures that the model's response is relevant and aligned with the user's interests.

Specifying the desired output format in your prompt can significantly influence the utility and readability of the model's response. Whether you need a concise summary, a detailed analysis, or a list of bullet points, making this clear in your prompt ensures that the model's output meets your expectations.

For example, the prompt "List the advantages and disadvantages of renewable energy sources" can result in a varied range of responses in terms of length and detail. However, refining the prompt to " List the advantages and disadvantages of renewable energy sources in a tabular format, with three points under each category" explicitly signals the model to provide a concise, table format tailoring the output to a specific format that may be more useful for quick reference or study.

- List the advantages and disadvantages of renewable energy sources.

- List the advantages and disadvantages of renewable energy sources in a tabular format, with three points under each category

The prompt specifies the desired output format as a tabular structure with advantages and disadvantages of renewable energy sources. By providing clear guidance on the number of points under each category (three points), the model is directed to organize the information in a structured and concise manner. This format enables the user to quickly compare the pros and cons of renewable energy sources for better decision-making or study purposes.

5. Iteratively Refine Your Prompts Based on Responses

The process of prompt engineering is iterative. Initial prompts may not always elicit the perfect response on the first try. Based on the model's output, you can refine your prompt to clarify, expand, or redirect the focus of your query. This iterative refinement helps hone in on the exact information or style of response you're seeking.

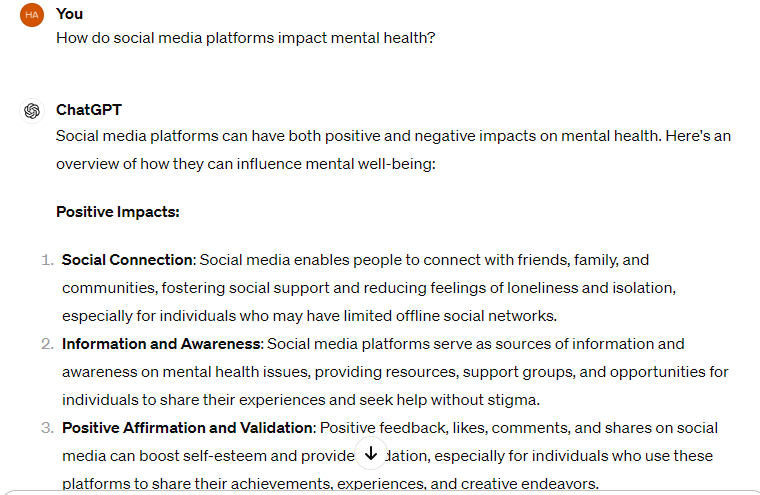

Starting with a broad prompt like "How do social media platforms impact mental health?" might yield a general response. If the initial output isn't what you were looking for, you can refine the prompt to something more specific, such as "Discuss the psychological effects of prolonged social media use on adolescents, focusing on aspects such as self-esteem, body image, and interpersonal relationships. Provide insights from recent studies and include potential strategies for mitigating negative impacts. Additionally, explore any cultural or demographic factors that may influence these effects." This refined prompt is more likely to result in a targeted and useful response.

- How do social media platforms impact mental health?

- Discuss the psychological effects of prolonged social media use on adolescents, focusing on aspects such as self-esteem, body image, and interpersonal relationships. Provide insights from recent studies and include potential strategies for mitigating negative impacts. Additionally, explore any cultural or demographic factors that may influence these effects.

The initial prompt addresses a broad topic, but the response may lack depth or specificity. After receiving the initial response, the user can refine the prompt to provide clearer guidance and additional details on what they're seeking. The refined prompt specifies the target demographic (adolescents) and key aspects of mental health impacted by social media use. It also requests insights from recent studies and potential mitigation strategies, as well as exploration of cultural or demographic factors influencing these effects. This iterative refinement process helps to elicit a more targeted and informative response from the model.

6. Leverage Keywords That Signal Intent

The use of specific keywords can signal your intent to the model, helping it discern whether you're seeking a factual answer, a creative piece, or a technical explanation. This clarity assists the model in aligning its response with your expectations, enhancing the relevance and quality of the output.

Certain keywords can signal the intent to the model, helping it understand the type of response needed, whether it's a creative story, a technical explanation, or a simple fact.

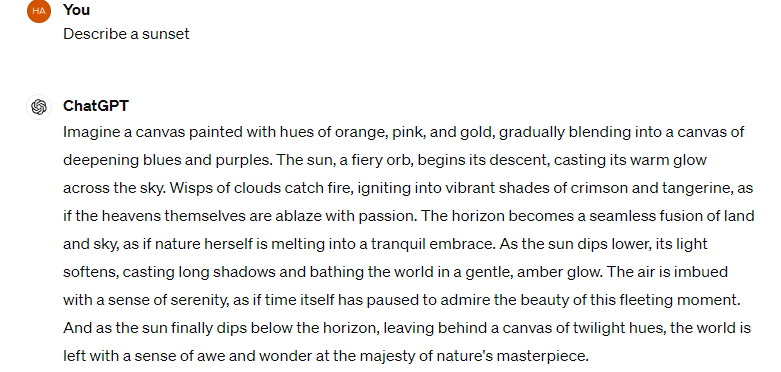

For instance, the prompt "Describe a sunset" can be interpreted in numerous ways. Refining it to "Write a poetic description of a sunset over the ocean, focusing on the colors and emotions it evokes" incorporates keywords like "poetic," "colors," and "emotions," which guide the model towards generating a response that's not just descriptive but also evocative and creative, in line with the requester's intent.

- Write a poetic description of a sunset over the ocean, focusing on the colors and emotions it evokes" incorporates keywords like "poetic," "colors," and "emotions.

The first prompt is open-ended and may result in various interpretations. In contrast, the second prompt incorporates keywords like "poetic," "colors," and "emotions," signaling the desired tone and focus of the response. This guidance helps the model generate a response that aligns with the requester's intent, producing a descriptive and evocative portrayal of the sunset.

Conclusion

Effective utilization of prompts is key to unlocking the full potential of self-regression LLM models like ChatGPT. By employing strategies such as clarity, contextual relevance, and iterative refinement, users can guide these models to produce high-quality, contextually appropriate text. With careful crafting of prompts and thoughtful experimentation, users can achieve superior results and harness the power of self-regression LLM models for diverse applications in natural language processing.

In each example, the effectiveness of the training improves as the prompts become more detailed, contextual, and aligned with specific learning goals.